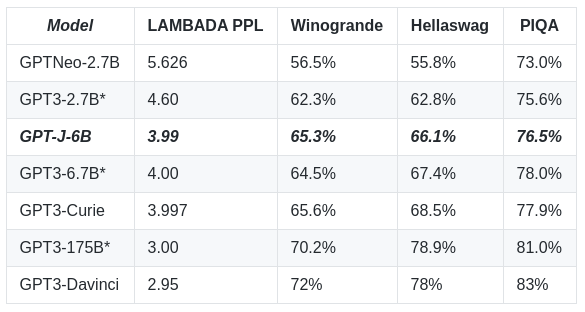

Ben and I have released GPT-J, 6B JAX-based Transformer LM 🥳

- Performs on par with 6.7B GPT-3

- Performs better and decodes faster than GPT-Neo

- repo + colab + free web demo

article: bit.ly/2TH8yl0

repo: bit.ly/3eszQ6C

- Performs on par with 6.7B GPT-3

- Performs better and decodes faster than GPT-Neo

- repo + colab + free web demo

article: bit.ly/2TH8yl0

repo: bit.ly/3eszQ6C

Colab: bit.ly/3w0fB6n

demo: bit.ly/3psRCdM

- Trained on 400B tokens with TPU v3-256 for five weeks

- GPT-J performs much closer to GPT-3 of similar size than GPT-Neo does

demo: bit.ly/3psRCdM

- Trained on 400B tokens with TPU v3-256 for five weeks

- GPT-J performs much closer to GPT-3 of similar size than GPT-Neo does

Also big thanks to EleutherAI, @jekbradbury, Janko Prester, Laurence Golding and @nabla_theta for their valuable assistance!

Please feel free to ask any question regarding GPT-J at EleutherAI 😉

You can also discuss and may find some interesting use cases and results here!

eleuther.ai

You can also discuss and may find some interesting use cases and results here!

eleuther.ai

• • •

Missing some Tweet in this thread? You can try to

force a refresh