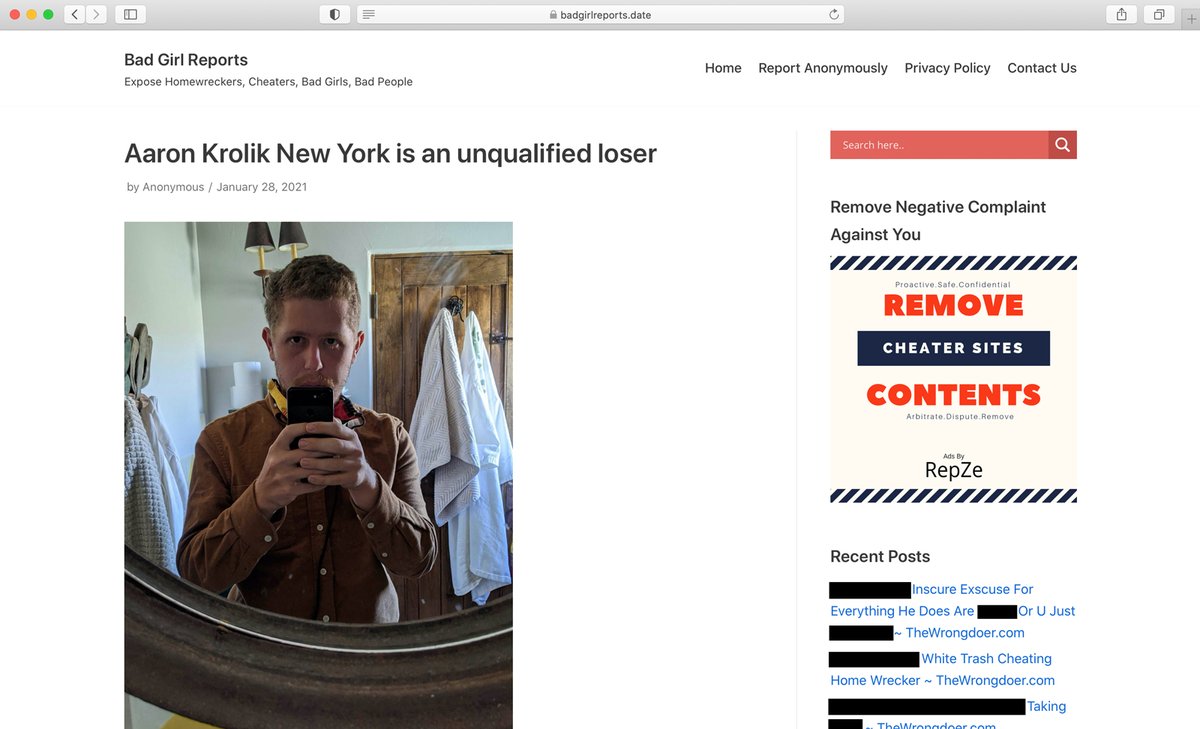

Google has a new concept called "known victims" for revenge porn & people serially attacked on slander sites. Once a person requests removal of these results from a search of their name, Google will automatically suppress similar content from resurfacing. nytimes.com/2021/06/10/tec…

One of the surprising things about working on the slander series is how few people in the field, even experts, know that Google voluntarily removes some search results. (No court order needed!) You have to visit this generic url: support.google.com/websearch/trou…

Because so few people know about it, "reputation managers" are charging people like $500 a pop to "remove damaging information from Google results." And ALL THEY DO is fill out that form for free. Someone tried to hawk this service to my husband after he came under attack.

The reason Google changes are meaningful (assuming they work) is that when someone comes after you online, it tends to be repeatedly. Plus sites scrape each other, compounding the damage, so you fill out these forms again & again & again. "Known victims" theoretically fixes that.

Google blog post on the changes: "Improving Search to better protect people from harassment" blog.google/products/searc…

It is WILD how much the internet has changed in a decade.

It is WILD how much the internet has changed in a decade.

• • •

Missing some Tweet in this thread? You can try to

force a refresh