📺How to train state-of-the-art sentence embeddings? 📺

Just uploaded my 3-part video series on the theory how to train state-of-the-art sentence embedding models:

Just uploaded my 3-part video series on the theory how to train state-of-the-art sentence embedding models:

📺 Part 1 - Applications & Definition

- Why do we need dense representation?

- Definition of dense representation

- What does "semantically similar" mean?

- Applications: Clustering, Search, Zero- & Few-Shot-Classification...

- Why do we need dense representation?

- Definition of dense representation

- What does "semantically similar" mean?

- Applications: Clustering, Search, Zero- & Few-Shot-Classification...

📺 Part 2 - Applications & Definition

- Basic Training Setup

- Loss-Functions: Contrastive Loss, Triplet Loss, Batch Hard Triplet Loss, Multiple Negatives Ranking Loss

- Training with hard negatives for semantic search

- Mining of hard negatives

- Basic Training Setup

- Loss-Functions: Contrastive Loss, Triplet Loss, Batch Hard Triplet Loss, Multiple Negatives Ranking Loss

- Training with hard negatives for semantic search

- Mining of hard negatives

📺Part 3 - Advanced Training

- Multilingual Text Embeddings

- Data Augmentation with Cross-Encoders

- Unsupervised Text Embedding Learning

- Pre-Training Methods for dense representations

- Neural Search

- Multilingual Text Embeddings

- Data Augmentation with Cross-Encoders

- Unsupervised Text Embedding Learning

- Pre-Training Methods for dense representations

- Neural Search

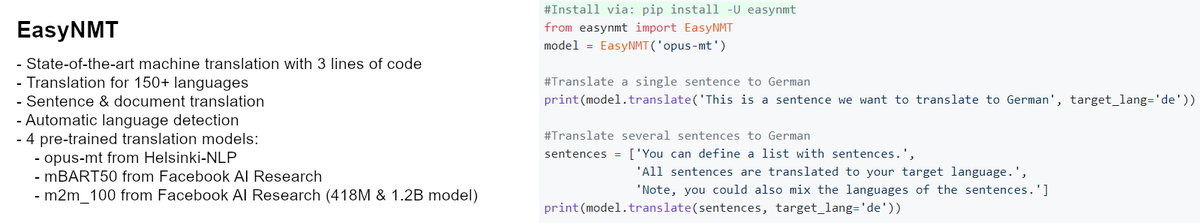

You are interested in actual code examples? Check the docs at sbert.net

The videos were recorded for the great "Deep Learning for NLP" lecture from Ivan Habernal and @MohsenMes

github.com/dl4nlp-tuda202…

The videos were recorded for the great "Deep Learning for NLP" lecture from Ivan Habernal and @MohsenMes

github.com/dl4nlp-tuda202…

• • •

Missing some Tweet in this thread? You can try to

force a refresh