NEW BLOG POST: Machine learning in a hurry: what I've learned from the #SLICED ML competition

varianceexplained.org/r/sliced-ml/ #rstats

varianceexplained.org/r/sliced-ml/ #rstats

You can vote for me to go to the playoffs of #SLICED here! forms.gle/1JNC31EAbxTAaf…

To summarize what I've learned so far from #SLICED 🧵

To summarize what I've learned so far from #SLICED 🧵

1. The tidymodels #rstats package is a really powerful "pit of success" for machine learning 🔥tidymodels.org

Perhaps the most important innovation is the combination of recipes (feature engineering) and models into an encapsulated workflow. (Continued)

Perhaps the most important innovation is the combination of recipes (feature engineering) and models into an encapsulated workflow. (Continued)

We know we have to separate train/test data for model fitting. But feature engineering like

* normalization

* imputing missing values

* dimensionality reduction

*also* have to be trained! Applying this cleaning to the training+test data together can cause data leakage! 😱

* normalization

* imputing missing values

* dimensionality reduction

*also* have to be trained! Applying this cleaning to the training+test data together can cause data leakage! 😱

The recipes package solves this

Instead of preprocessing the data yourself, you specify a recipe for cleaning, such that it can be prepared on any training set & applied to any test set

Makes cross validation easy!

(Source tidymodels.org/start/recipes/) #tidymodels #rstats

Instead of preprocessing the data yourself, you specify a recipe for cleaning, such that it can be prepared on any training set & applied to any test set

Makes cross validation easy!

(Source tidymodels.org/start/recipes/) #tidymodels #rstats

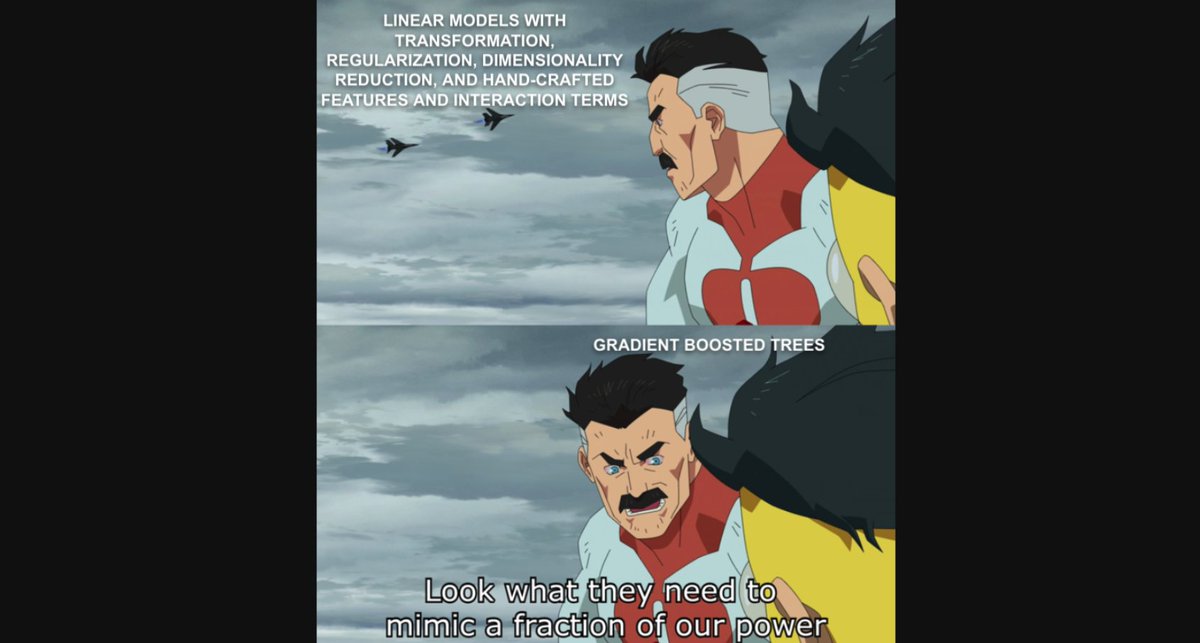

2. Gradient boosted trees are absolutely OP for Kaggle competitions on tabular data.

My background is statistics, so I really do like interpretable models where I know the generative process, understand the coefficients, and can calculate p-values

But xgboost PLAYS TO WIN

My background is statistics, so I really do like interpretable models where I know the generative process, understand the coefficients, and can calculate p-values

But xgboost PLAYS TO WIN

I've noticed xgboost suffers when the data has many low-value features (e.g. a sparse categorical variable), at least for modest values of learn rate / # of trees.

I think weak features are basically "diluting" the strong ones.

Anyone know of research on this problem?

I think weak features are basically "diluting" the strong ones.

Anyone know of research on this problem?

3. You can often improve on xgboost by stacking it with another model!

Every model has some error. But if 2 models have *different* errors, then averaging them can sometimes make an even better model.

The stacks 📦 is the tidymodels way to do this: github.com/tidymodels/sta…

Every model has some error. But if 2 models have *different* errors, then averaging them can sometimes make an even better model.

The stacks 📦 is the tidymodels way to do this: github.com/tidymodels/sta…

My screencast on #SLICED ep5, predicting Airbnb prices, is an example of model stacking. I stacked:

* a xgboost model on a few numeric features

* a LASSO model on thousands of text features

to make a model that easily beat either by itself #tidymodels

* a xgboost model on a few numeric features

* a LASSO model on thousands of text features

to make a model that easily beat either by itself #tidymodels

4. If I make it to the #SLICED playoffs, I need to learn how to meme.

@kierisi, @StatsInTheWild, and others have been crushing me in the chat voting portion of the competition, just because my screencasts didn't include "memes" or a "sense of humor" or "any personality at all"

@kierisi, @StatsInTheWild, and others have been crushing me in the chat voting portion of the competition, just because my screencasts didn't include "memes" or a "sense of humor" or "any personality at all"

I like to think it's never too late to learn.

So if you want to see me struggle to be relatable in the #SLICED playoffs, don't forget to vote! forms.gle/1JNC31EAbxTAaf…

So if you want to see me struggle to be relatable in the #SLICED playoffs, don't forget to vote! forms.gle/1JNC31EAbxTAaf…

• • •

Missing some Tweet in this thread? You can try to

force a refresh