So I wrote this previous thread in a hurry and didn’t take time to spell out what it means, and what the background is. So let me try again.

https://twitter.com/matthew_d_green/status/1423071186616000513

For the past decade, providers like Apple, WhatsApp/Facebook, Snapchat, and others have been adding end-to-end encryption to their text messaging and video services. This has been a huge boon for privacy. But governments have been opposed to it.

Encryption is great for privacy, but also makes (lawful) surveillance hard. For years national security agencies and law enforcement have been asking for “back doors” so that police can wiretap specific users. This hasn’t been very successful.

In 2018, Facebook announced plans to greatly expand their use of end-to-end encryption. A number of governments were very upset about this, and wrote an open letter urging them to stop. justice.gov/opa/pr/attorne…

The signers, led by US AG William Barr, presented an entirely new concern that had not been a major part of the conversation til then.

By adding encryption, Facebook would make it harder to scan messages for prohibited content like child porn and terrorist recruitment media.

By adding encryption, Facebook would make it harder to scan messages for prohibited content like child porn and terrorist recruitment media.

These are bad things. I don’t particularly want to be on the side of child porn and I’m not a terrorist. But the problem is that encryption is a powerful tool that provides privacy, and you can’t really have strong privacy while also surveilling every image anyone sends.

A number of people pointed out that these scanning technologies are effectively (somewhat limited) mass surveillance tools. Not dissimilar to the tools that repressive regimes have deployed — just turned to different purposes.

In any case, the pressure bt various governments (and in particular, the UK) has led many technologists to try to develop a “compromise”. Since files are encrypted on the server, let’s scan them on the endpoint (your phone) before they get encrypted.

This decentralizes the surveillance system. Your phone will do it for you! And then we can have encryption and our cake too.

There are a lot of problems with this idea. Yes — client side scanning (and encrypted images on server) is better than plaintext images and server-side scanning. But to some extent, the functionality is the same. And subject to abuse…

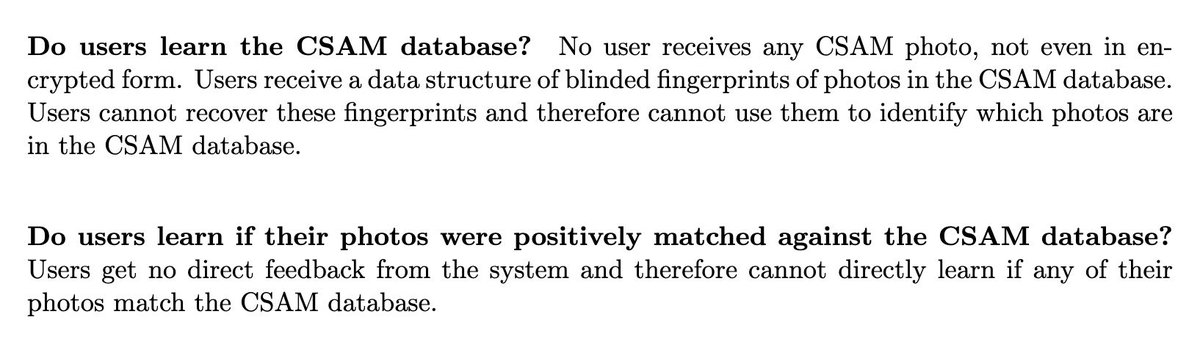

The way this will work is that your phone will download a database of “fingerprints” for all of the bad images (child porn, terrorist recruitment videos etc.) It will check each image on your phone for matches. The fingerprints are ‘imprecise’ so they can catch close matches.

Whoever controls this list can search for whatever content they want on your phone, and you don’t really have any way to know what’s on that list because it’s invisible to you (and just a bunch of opaque numbers, even if you hack into your phone to get the list.)

The theory is that you will trust Apple to only include really bad images. Say, images curated by the National Center for Missing and Exploited Children (NCMEC). You’d better trust them, because trust is all you have.

(There are some other fancier approaches that split the database so your phone doesn’t even see it — the databases are actually trade secrets in some cases. This doesn’t change the functionality but makes the system even harder to check up on. Apple may use something like this.)

But there are worse things than worrying about Apple being malicious. I mentioned that these perceptual hash functions were “imprecise”. This is on purpose. They’re designed to find images that look like the bad images, even if they’ve been resized, compressed, etc.

This means that, depending on how they work, it might be possible for someone to make problematic images that “match” entirely harmless images. Like political images shared by persecuted groups. These harmless images would be reported to the provider.

I can’t imagine anyone would do this just for the lulz (cough, 8chan). Just to have fun with someone they don’t like.

But there are some really bad people in the world who would do it on purpose.

But there are some really bad people in the world who would do it on purpose.

And the problem is that none of this technology was designed to stop this sort of malicious behavior. In the past it was always used to scan *unencrypted* content. If deployed in encrypted systems (and that is the goal) then it provides an entirely new class of attacks.

Ok I’m almost done, I promise. But first I want to talk about how Apple is going to spin this and why it matters so much.

Initially Apple is not going to deploy this system on your encrypted images. They’re going to use it on your phone’s photo library, and only if you have iCloud Backup turned on.

So in that sense, “phew”: it will only scan data that Apple’s servers already have. No problem right?

So in that sense, “phew”: it will only scan data that Apple’s servers already have. No problem right?

But ask yourself: why would Apple spend so much time and effort designing a system that is *specifically* designed to scan images that exist (in plaintext) only on your phone — if they didn’t eventually plan to use it for data that you don’t share in plaintext with Apple?

Regardless of what Apple’s long term plans are, they’ve sent a very clear signal. In their (very influential) opinion, it is safe to build systems that scan users’ phones for prohibited content.

That’s the message they’re sending to governments, competing services, China, you.

That’s the message they’re sending to governments, competing services, China, you.

Whether they turn out to be right or wrong on that point hardly matters. This will break the dam — governments will demand it from everyone.

And by the time we find out it was a mistake, it will be way too late.

And by the time we find out it was a mistake, it will be way too late.

• • •

Missing some Tweet in this thread? You can try to

force a refresh