Grasping Kubernetes Networking (Mega Thread)

- What is Kubernetes Service?

- When to use ClusterIP, NodePort, or LoadBalancer?

- How does multi-cluster service work?

- Why both Ingress and Ingress Controller?

The answers become clear when things are explained bottom-up! 🔽

- What is Kubernetes Service?

- When to use ClusterIP, NodePort, or LoadBalancer?

- How does multi-cluster service work?

- Why both Ingress and Ingress Controller?

The answers become clear when things are explained bottom-up! 🔽

1. Low-level Kubernetes Networking Guarantees

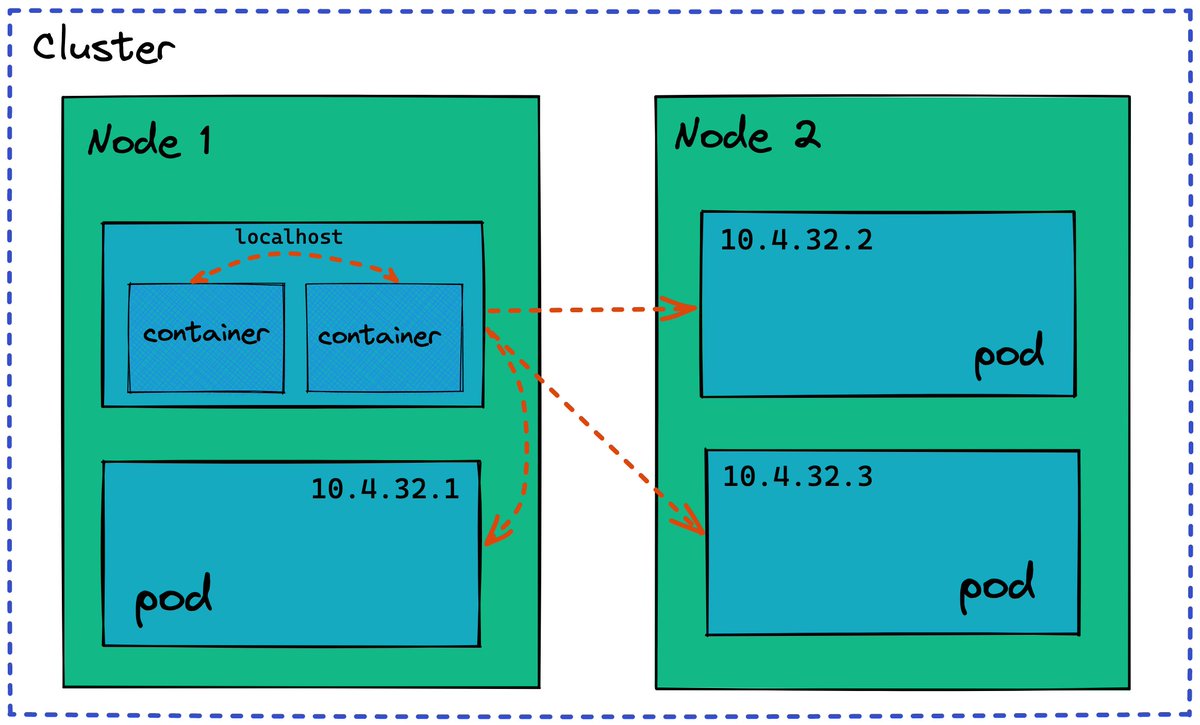

To make Pods mimicking traditional VMs, Kubernetes defines its networking model as follows:

- Every Pod gets its own IP address

- Pods talk to other Pods directly (no visible sNAT)

- Containers in a pod communicate via localhost

To make Pods mimicking traditional VMs, Kubernetes defines its networking model as follows:

- Every Pod gets its own IP address

- Pods talk to other Pods directly (no visible sNAT)

- Containers in a pod communicate via localhost

2. Kubernetes does nothing for low-level networking!

It delegates the implementation to Container Runtimes and networking plugins.

A typical example: cri-o (CR) connects pods on a node to a shared Linux bridge; flannel (plugin) puts nodes into an overlay network.

It delegates the implementation to Container Runtimes and networking plugins.

A typical example: cri-o (CR) connects pods on a node to a shared Linux bridge; flannel (plugin) puts nodes into an overlay network.

3. Kubernetes Service Resource and ClusterIP

Web services normally have human-readable names. Typical web service also consists of multiple endpoints (VMs or pods).

Kubernetes Service Resource puts ephemeral pods into a named group with a ClusterIP assigned to it.

Web services normally have human-readable names. Typical web service also consists of multiple endpoints (VMs or pods).

Kubernetes Service Resource puts ephemeral pods into a named group with a ClusterIP assigned to it.

4. Kubernetes comes with its own Service Discovery

When you call a service using its domain name, KubeDNS replaces the domain name with a ClusterIP. But ClusterIP is virtual! Egress traffic to this IP is intercepted by the source node and redirected to one of the service pods.

When you call a service using its domain name, KubeDNS replaces the domain name with a ClusterIP. But ClusterIP is virtual! Egress traffic to this IP is intercepted by the source node and redirected to one of the service pods.

5. Exposing Kubernetes Service (I)

Pod-to-Pod and Pod-to-Service networking work only within a cluster. From outside the cluster, it's rarely possible to access a pod by its IP.

But it's possible to map a ClusterIP to a port on every node using a Service with type NodePort.

Pod-to-Pod and Pod-to-Service networking work only within a cluster. From outside the cluster, it's rarely possible to access a pod by its IP.

But it's possible to map a ClusterIP to a port on every node using a Service with type NodePort.

6. Exposing Kubernetes Service (II)

No one likes services hanging on random ports. Service type LoadBalancer allows assigning a public IP address to an in-cluster service. But it must be implemented by the platform provider.

LB can send traffic to a NodePort or Pod IPs directly

No one likes services hanging on random ports. Service type LoadBalancer allows assigning a public IP address to an in-cluster service. But it must be implemented by the platform provider.

LB can send traffic to a NodePort or Pod IPs directly

7. Exposing HTTP(S) Services

NodePort and LoadBalancer services work on L4. Thus, they don't understand HTTP routing, cannot do SSL termination, etc.

An Ingress Resource describes a public L7 load balancer forwarding HTTP traffic to one or more in-cluster Kubernetes Services.

NodePort and LoadBalancer services work on L4. Thus, they don't understand HTTP routing, cannot do SSL termination, etc.

An Ingress Resource describes a public L7 load balancer forwarding HTTP traffic to one or more in-cluster Kubernetes Services.

8. Kubernetes Ingress Controllers

As it usually happens with Kubernetes, it doesn't come with its own implementation of Ingress.

Kubernetes defines the Ingress Resource and expects platform providers to implement a corresponding controller to do the actual request handling.

As it usually happens with Kubernetes, it doesn't come with its own implementation of Ingress.

Kubernetes defines the Ingress Resource and expects platform providers to implement a corresponding controller to do the actual request handling.

9. Kubernetes and Service Mesh

SM transparently expands Kubernetes capabilities:

- Relies on Service resources

- Doesn't require a change on the app side

- Replaces kube-proxy for Service Discovery

- Brings multi-cluster services 🔥

- Provides Ingress Controller and/or Gateway

SM transparently expands Kubernetes capabilities:

- Relies on Service resources

- Doesn't require a change on the app side

- Replaces kube-proxy for Service Discovery

- Brings multi-cluster services 🔥

- Provides Ingress Controller and/or Gateway

That's probably it for the Kubernetes networking!

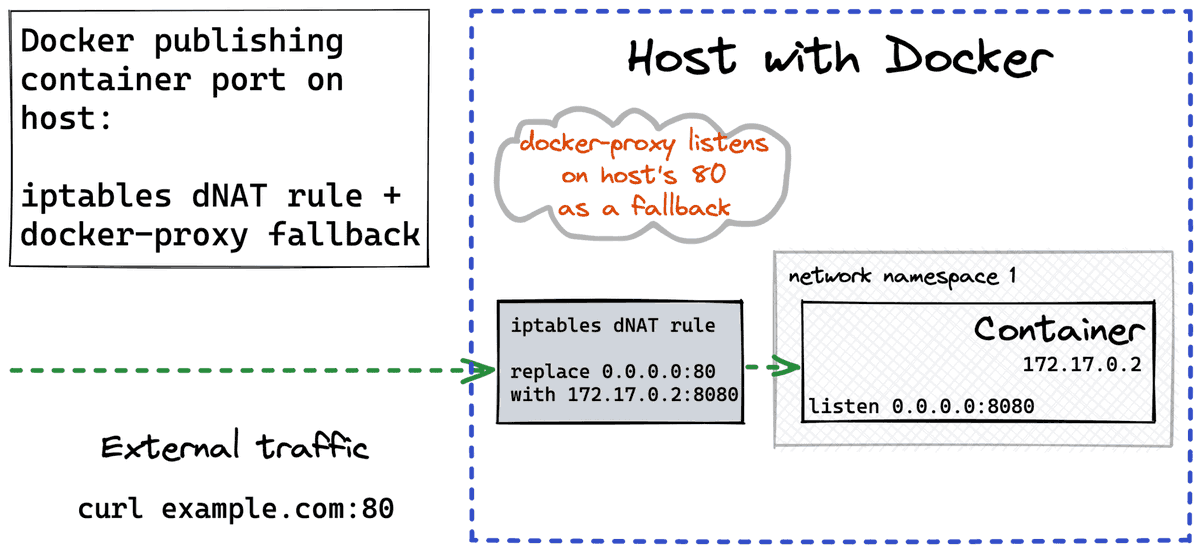

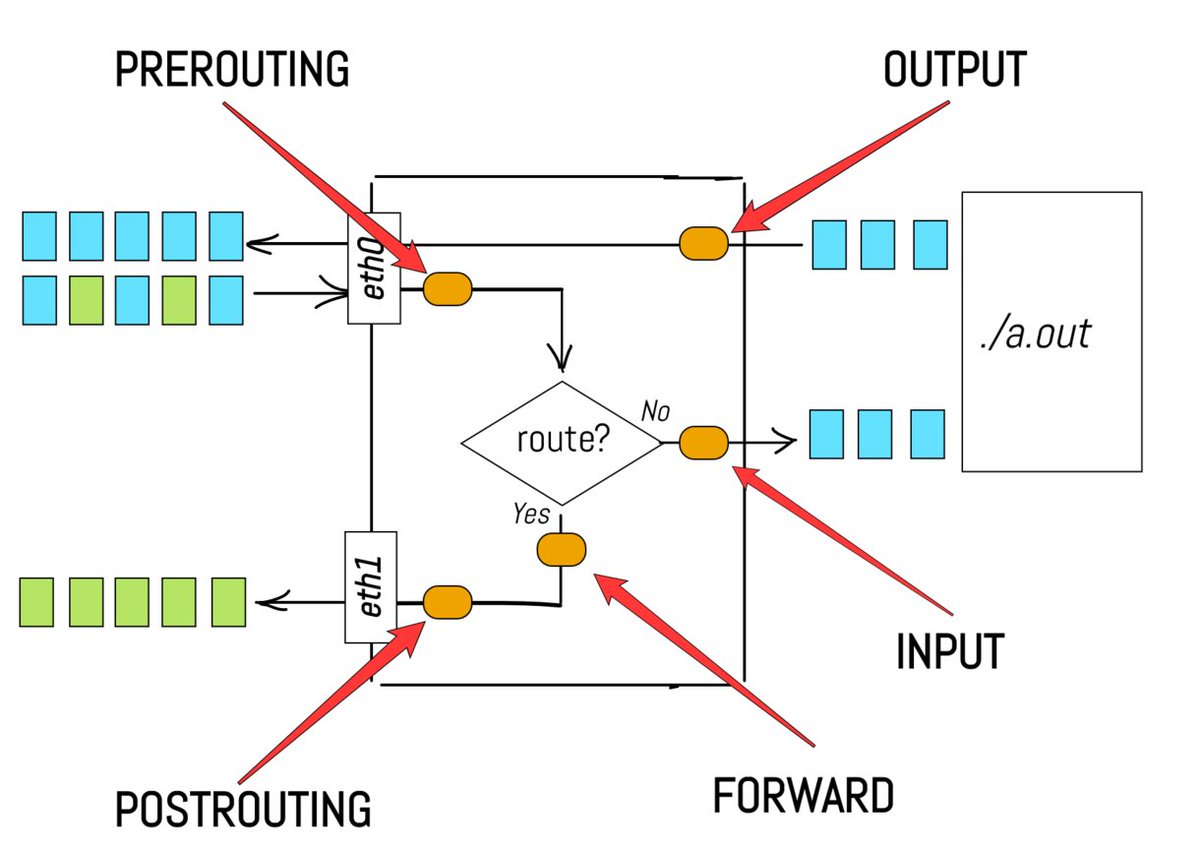

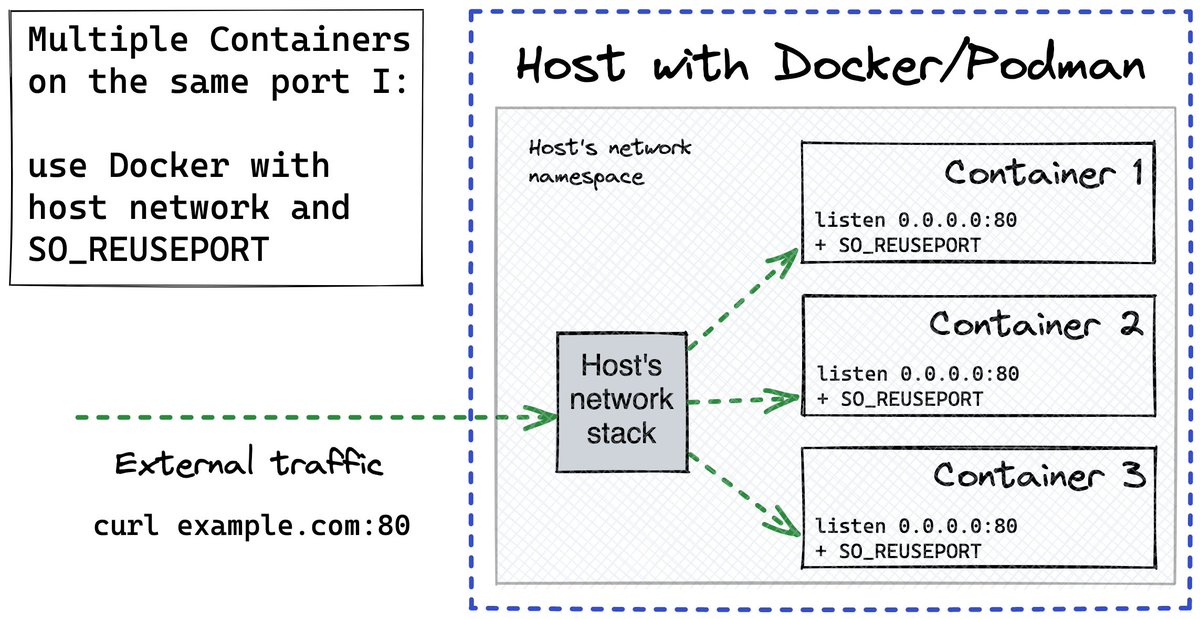

For more details on a single node container networking, check out this article

iximiuz.com/en/posts/conta…

For more details on a single node container networking, check out this article

iximiuz.com/en/posts/conta…

For the details of Service Discovery implementation in Kubernetes, check out this article.

iximiuz.com/en/posts/servi…

iximiuz.com/en/posts/servi…

For the Service Mesh basics - the Proxy Sidecar Pattern, check out this article.

iximiuz.com/en/posts/servi…

iximiuz.com/en/posts/servi…

• • •

Missing some Tweet in this thread? You can try to

force a refresh