I'm happy to announce that our paper "The Devil is in the Detail: Simple Tricks Improve Systematic Generalization of Transformers" has been accepted to #EMNLP2021!

paper: arxiv.org/abs/2108.12284

code: github.com/robertcsordas/…

1/4

paper: arxiv.org/abs/2108.12284

code: github.com/robertcsordas/…

1/4

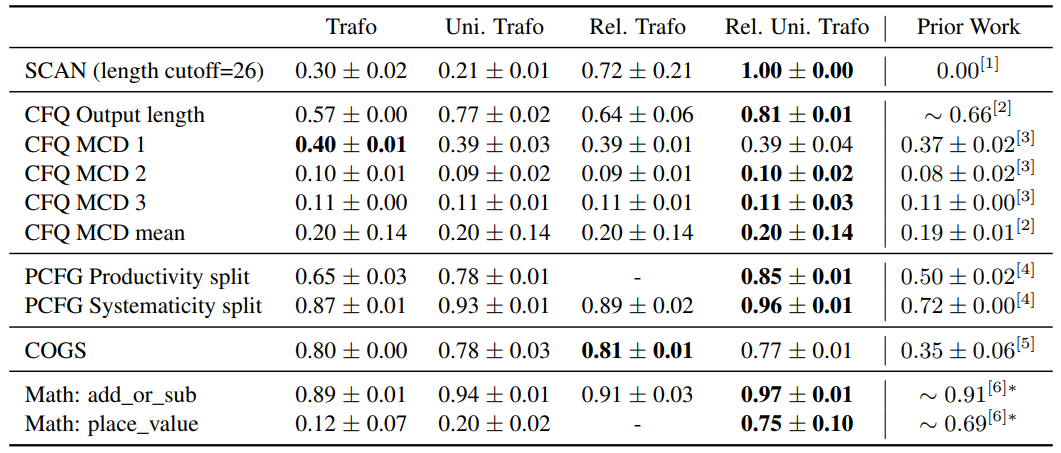

We improve the systematic generalization of Transformers on SCAN (0 -> 100% with length cutoff=26), CFQ (66 -> 81% on output length split), PCFG (50 -> 85% on productivity split, 72 -> 96% on systematicity split), COGS (35 -> 81%), and Mathematics dataset.

2/4

2/4

We achieve these large improvements by revisiting model configurations as basics as the scaling of embeddings, early stopping, relative positional embedding, and weight sharing (Universal Transformers).

3/4

3/4

We also show that relative positional embeddings largely mitigate the EOS decision problem.

Importantly, differences between these models are typically invisible on the IID data split. This calls for proper generalization validation sets.

4/4

Importantly, differences between these models are typically invisible on the IID data split. This calls for proper generalization validation sets.

4/4

• • •

Missing some Tweet in this thread? You can try to

force a refresh