After you train a machine learning model, the BEST way to showcase it to the world is to make a demo for others to try your model!

Here is a quick thread🧵on two of the easiest ways to make a demo for your machine learning model:

Here is a quick thread🧵on two of the easiest ways to make a demo for your machine learning model:

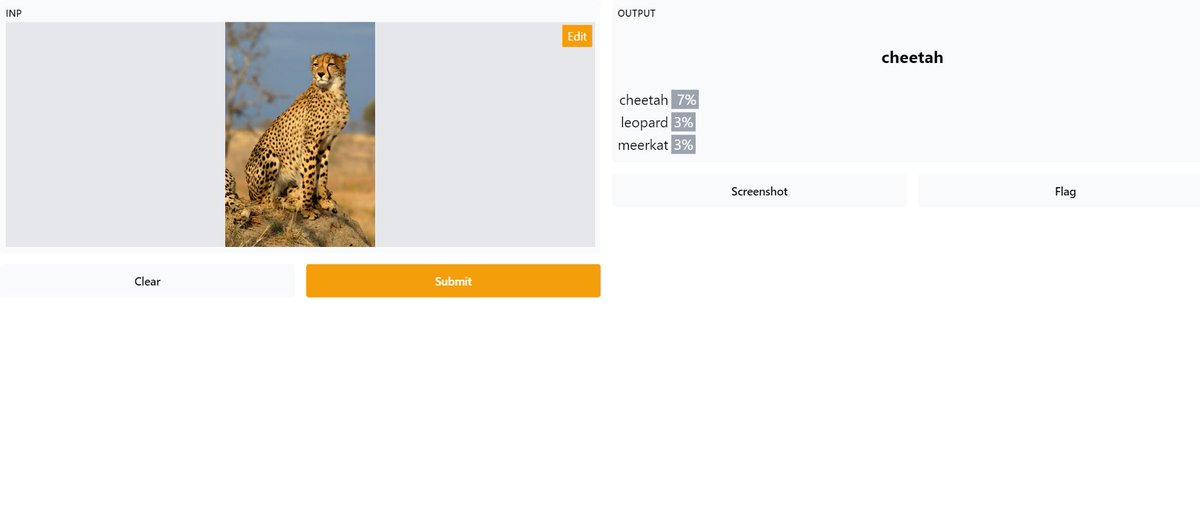

Currently, Gradio is probably the fastest way to set up a machine learning demo ⚡

Just a couple lines of code allows you to use your inference code to make a beautiful demo that you can share with the world.

Learn more here → gradio.app

Just a couple lines of code allows you to use your inference code to make a beautiful demo that you can share with the world.

Learn more here → gradio.app

Using Gradio, I was able to quickly make this demo of my CycleGAN package (screenshot was taken using Gradio's built-in functionality!):

upit-cyclegan.herokuapp.com

upit-cyclegan.herokuapp.com

An alternative and more general tool for making web apps for data science and machine learning scripts/tools is Streamlit.

Learn more here → streamlit.io

Learn more here → streamlit.io

When is Gradio better than Streamlit?

Gradio is designed specifically for creating interfaces for machine learning models, and it takes ~5 min to get an app running!

Gradio is designed specifically for creating interfaces for machine learning models, and it takes ~5 min to get an app running!

It also includes useful features like interpretability, flagging unexpected model behavior, etc. It is therefore quite useful for providing more insight about your models

That said, Streamlit is a highly flexible and customizable python-based UI framework that also support third-party components that extend what's possible with Streamlit

Additionally, Streamlit includes useful features like caching that allows you to develop performant apps!

Additionally, Streamlit includes useful features like caching that allows you to develop performant apps!

Therefore, in my opinion, I think it's worth learning how to use both tools to be able to create amazing demos for your machine learning projects.

If you found this thread useful, consider following me for more ML- and STEM-related content!

• • •

Missing some Tweet in this thread? You can try to

force a refresh