Last week I trained a machine learning model using 100% of the data.

Then I used the model to predict the labels on the same dataset I used to train it.

I'm not kidding. Hear me out: ↓

Then I used the model to predict the labels on the same dataset I used to train it.

I'm not kidding. Hear me out: ↓

Does this sound crazy?

Yes.

Would I be losing my shit if I heard that somebody did this?

Yes.

So what's going on?

Yes.

Would I be losing my shit if I heard that somebody did this?

Yes.

So what's going on?

I have a dataset with a single numerical feature and a binary target.

I need to know the threshold that better separates the positive samples from the negative ones.

I don't want a model to make predictions; I just need to know the threshold.

I need to know the threshold that better separates the positive samples from the negative ones.

I don't want a model to make predictions; I just need to know the threshold.

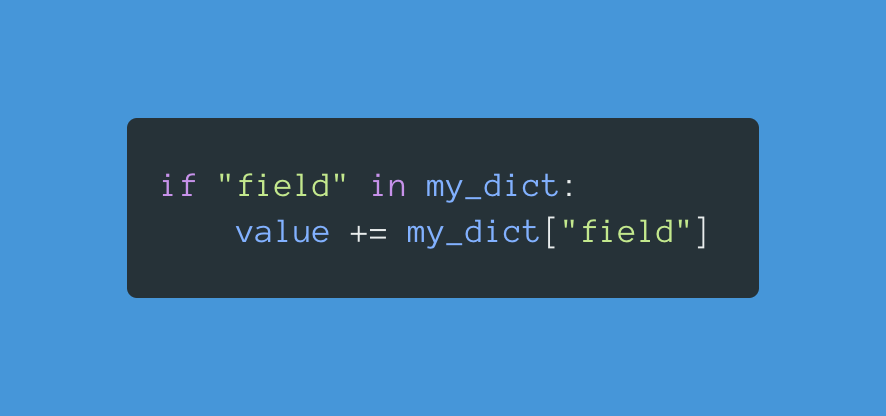

There are a bazillion ways to find this threshold. Attached you can see one of them.

Fit a DecisionTreeClassifier on the data, and print the threshold.

That's it.

Fit a DecisionTreeClassifier on the data, and print the threshold.

That's it.

Remember, I don't need this model to do anything else. I don't care about validating it or anything like that.

Of course, I used 100% of the data for this.

And then I had to answer an important question:

Of course, I used 100% of the data for this.

And then I had to answer an important question:

After having the threshold, I needed to determine the precision and recall of the data.

How good was that threshold separating the positive from the negative examples?

Let's compute that.

How good was that threshold separating the positive from the negative examples?

Let's compute that.

So yeah, after training with 100% of the data, I used the model to predict the targets of the same data.

Sounds egregious, but it isn't.

Sounds egregious, but it isn't.

This experience taught me something important:

Whatever preconceptions I have, the best approach is always to put them aside, be ready to be wrong, and be open to learning something new.

Whatever preconceptions I have, the best approach is always to put them aside, be ready to be wrong, and be open to learning something new.

I had many numerical values. Some of them correspond to positive examples, some of them to negative examples.

I want to find the value (threshold) that better separates the positive from the negative values.

That was the problem.

I want to find the value (threshold) that better separates the positive from the negative values.

That was the problem.

https://twitter.com/edkesuma/status/1447538233848463365?s=20

Here is the process that should give a little bit more context around this application:

1. Boostrap the threshold ← This is what I explained in this thread.

2. Collect more data

3. Label some of that data (~20%)

4. Recompute the threshold using new labeled data

5. Repeat

1. Boostrap the threshold ← This is what I explained in this thread.

2. Collect more data

3. Label some of that data (~20%)

4. Recompute the threshold using new labeled data

5. Repeat

• • •

Missing some Tweet in this thread? You can try to

force a refresh