A big part of my work is to build computer vision models to recognize things.

It's usually ordinary stuff: An antenna, a fire extinguisher, a bag, a ladder.

Here is a trick I use to solve some of these problems.

It's usually ordinary stuff: An antenna, a fire extinguisher, a bag, a ladder.

Here is a trick I use to solve some of these problems.

The good news about having to recognize everyday objects:

There are a ton of pre-trained models that help with that. You can start with one of these models and get decent results out of the box.

This is important. I'll come back to it in a second.

There are a ton of pre-trained models that help with that. You can start with one of these models and get decent results out of the box.

This is important. I'll come back to it in a second.

Many of the use cases that I tackle are about "augmenting" the people who are working with machine learning.

Let's say you have a team looking at drone footage to find squirrels. Eight hours every day looking at images.

This sucks. I can help with that.

Let's say you have a team looking at drone footage to find squirrels. Eight hours every day looking at images.

This sucks. I can help with that.

(By the way, I haven't done anything with "squirrels." It's just a silly example I'm using on this thread, so bear with me.)

One thing in common across many of these uses cases: You almost always prioritize recall.

Why is that?

The goal is to cut down the amount of work that people have to do. You want to make them more efficient, so you want to find interesting images for them to review.

Why is that?

The goal is to cut down the amount of work that people have to do. You want to make them more efficient, so you want to find interesting images for them to review.

Out of the bazillion images that a drone takes, we only care about those with squirrels in it.

We want to have a high recall finding squirrels. We don't care if that means sacrificing precision.

We want to have a high recall finding squirrels. We don't care if that means sacrificing precision.

Here is a dream scenario:

We build a model that cuts down the amount of work people have to do by throwing away 90% of the images that don't have squirrels.

People can now focus only on 10% of the images!

We build a model that cuts down the amount of work people have to do by throwing away 90% of the images that don't have squirrels.

People can now focus only on 10% of the images!

As long as we are including anything that may be a squirrel in that 10%, we are good.

(90% - 10% are just hypothetical figures. Sometimes, even cutting 1% of the work is valuable.)

(90% - 10% are just hypothetical figures. Sometimes, even cutting 1% of the work is valuable.)

Remember I mentioned that there are a ton of pre-trained models good at recognizing everyday objects?

This is how I use them:

This is how I use them:

First, any good solution will use a specialized model (trained explicitly to recognize squirrels.) You can build this using transfer learning from a pre-trained model.

This is table-stakes.

But there's another excellent way to use pre-trained models:

This is table-stakes.

But there's another excellent way to use pre-trained models:

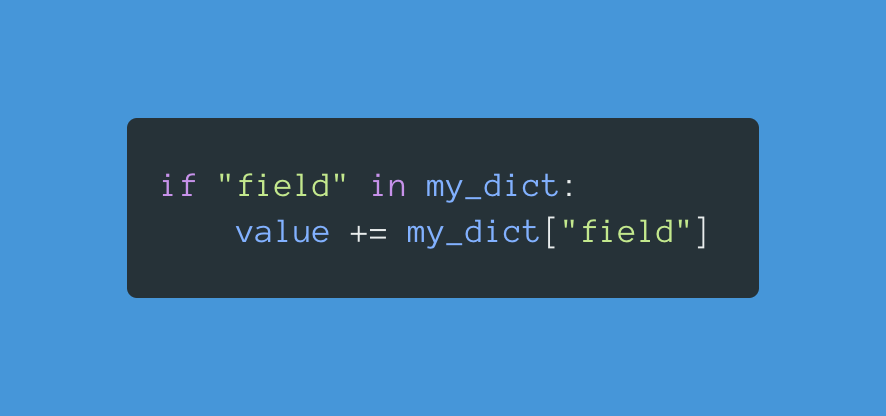

You can use a pre-trained model out of the box to regulate the precision and recall of your solution.

In other words: an ensemble of models that votes for the final answer.

Here is how this works:

In other words: an ensemble of models that votes for the final answer.

Here is how this works:

Let's imagine we use 2 models:

• Model A is the classifier you trained to recognize squirrels.

• Model B is a pre-trained ResNet50 model that knows some stuff about squirrels. It's not as good as Model A, but it's not dumb either.

Now you use both models with your images.

• Model A is the classifier you trained to recognize squirrels.

• Model B is a pre-trained ResNet50 model that knows some stuff about squirrels. It's not as good as Model A, but it's not dumb either.

Now you use both models with your images.

Do you want to increase recall?

If at least one model sees a squirrel, you report the image as being a positive sample.

Do you want to increase precision?

You only report a positive sample if every model sees a squirrel.

If at least one model sees a squirrel, you report the image as being a positive sample.

Do you want to increase precision?

You only report a positive sample if every model sees a squirrel.

You can add more models to control your precision and recall better.

(In my experience, I've never gotten over three models. There are diminishing returns with this.)

The best part of this? You only train and worry about a single model and use everything else as it comes.

(In my experience, I've never gotten over three models. There are diminishing returns with this.)

The best part of this? You only train and worry about a single model and use everything else as it comes.

A great candidate to recognize everyday objects? OpenAI's CLIP.

Holy shit, CLIP is good!

Zero-shot. Don't need to spend a second training it, and it's excellent at helping your specialized model.

Holy shit, CLIP is good!

Zero-shot. Don't need to spend a second training it, and it's excellent at helping your specialized model.

Do you know what's even better than this?

You build a *really*, *really* good model that recognizes squirrels and potentially takes over all the work people are doing.

Why would you focus on doing 90% of the work when you can do 100% of the work?

You build a *really*, *really* good model that recognizes squirrels and potentially takes over all the work people are doing.

Why would you focus on doing 90% of the work when you can do 100% of the work?

That's, unfortunately, how many people are still approaching machine learning.

I get it. That's what all of us want. But money and time are finite resources.

The trick is to take it one step at a time. There's a lot of value we can reach with out-of-the-box solutions.

I get it. That's what all of us want. But money and time are finite resources.

The trick is to take it one step at a time. There's a lot of value we can reach with out-of-the-box solutions.

Now, I wish I could get the opportunity to build a squirrel-detector model.

One thing is for sure: If I do, I'll tell you about my experience building it.

This is me → @svpino. Follow me because you don't want to miss it.

One thing is for sure: If I do, I'll tell you about my experience building it.

This is me → @svpino. Follow me because you don't want to miss it.

• • •

Missing some Tweet in this thread? You can try to

force a refresh