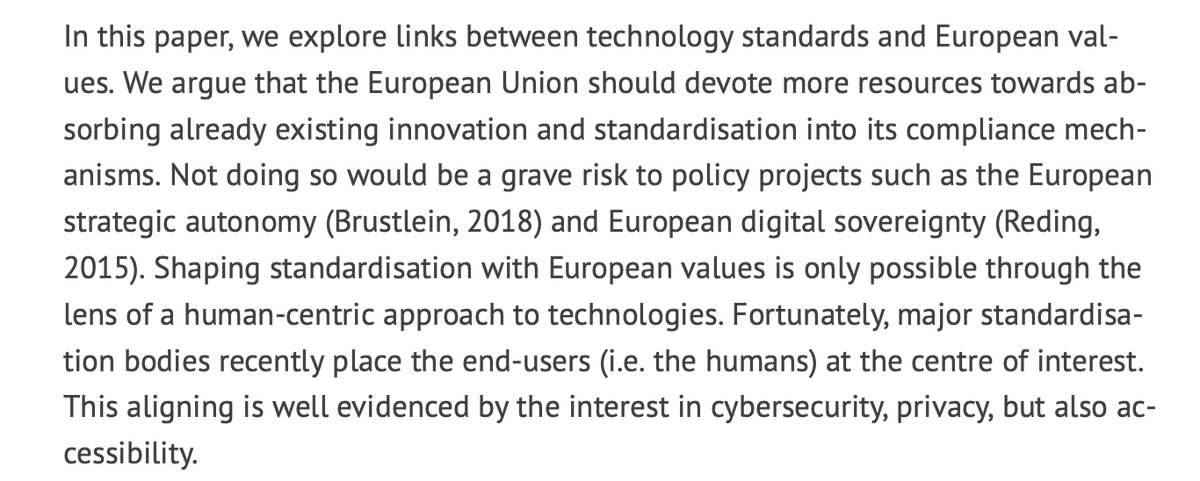

Our latest paper on technology standardisation is out in @PolicyR! Thanks @teirdes for the fabulous co-op. Some people doubt that values-inspired technology design is possible. We show that not only it is possible, but values already influence technology. policyreview.info/pdf/policyrevi…

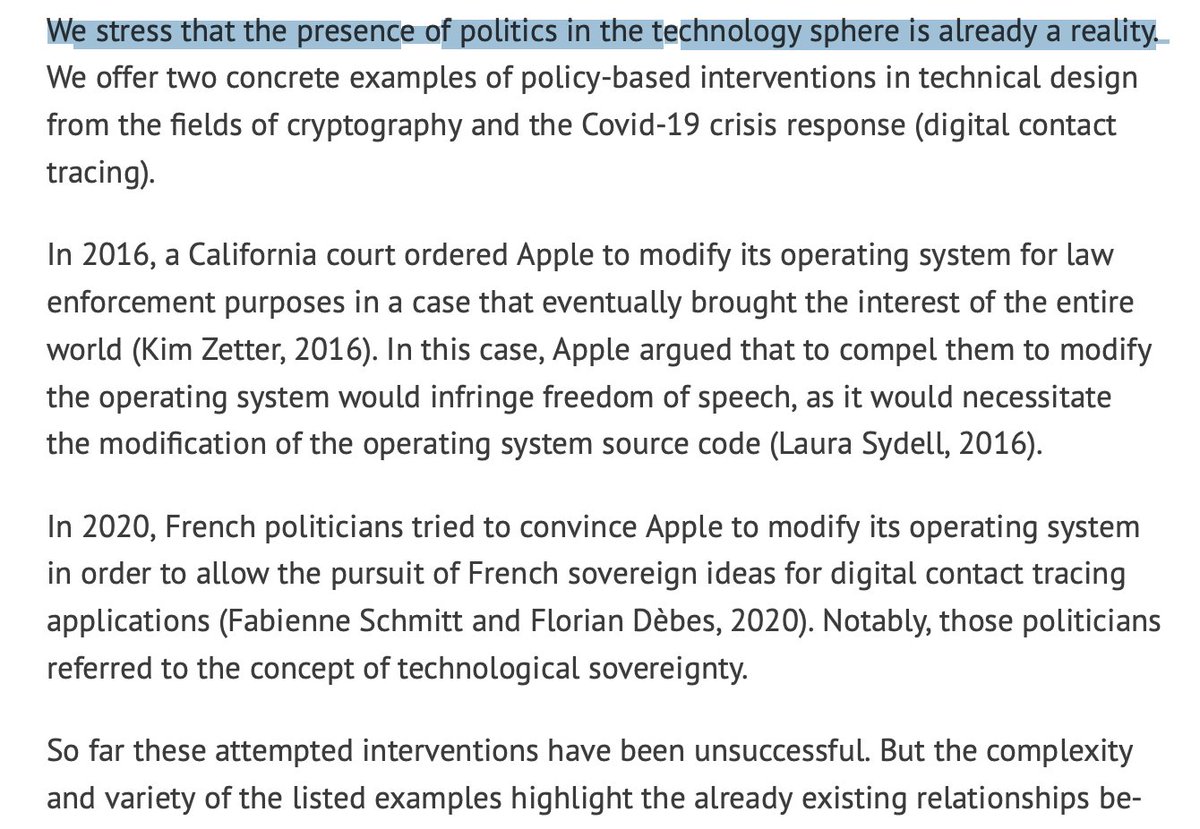

"Values" not only guide the building of technologies in aspects such as privacy or cybersecurity, accessibility, freedom of expression, or censorship. There are past examples of political-technology clashes/interventions, too. On-demand decryption or OS changes are examples.

"Human, moral, and European values are clearly linked to technology ... We stress that the presence of politics in the technology sphere is already a reality.". True story! With examples from the U.S. and France.

There are also examples of failures. For example, the Do Not Track/Tracking Preferences Expression pollinated tech policy debates, but ultimately did not get a backing and it is facing a crisis. But there are also many success stories. For example Privacy by Design.

But European Union is struggling with technology standards. Its approach is maladapted to today's world. GDPR and 2G are the well known examples, but what else is there? There is a need for a change. The need for smart tech policy people to engage.

Europe can do better, though. We solve this puzzle. European Union must simplify the current policy, needs a modern strategy of involvement in technology standards, must realise how to practically structure its influence over technology standards

I must also thank again to probably my favourite former MEP Amelia @teirdes. We both know a lot about technology standards. And about technology policy. This work is a direct outcome of our mix of fabulous experience and knowledge.

Direct link to our paper: policyreview.info/articles/analy…

• • •

Missing some Tweet in this thread? You can try to

force a refresh