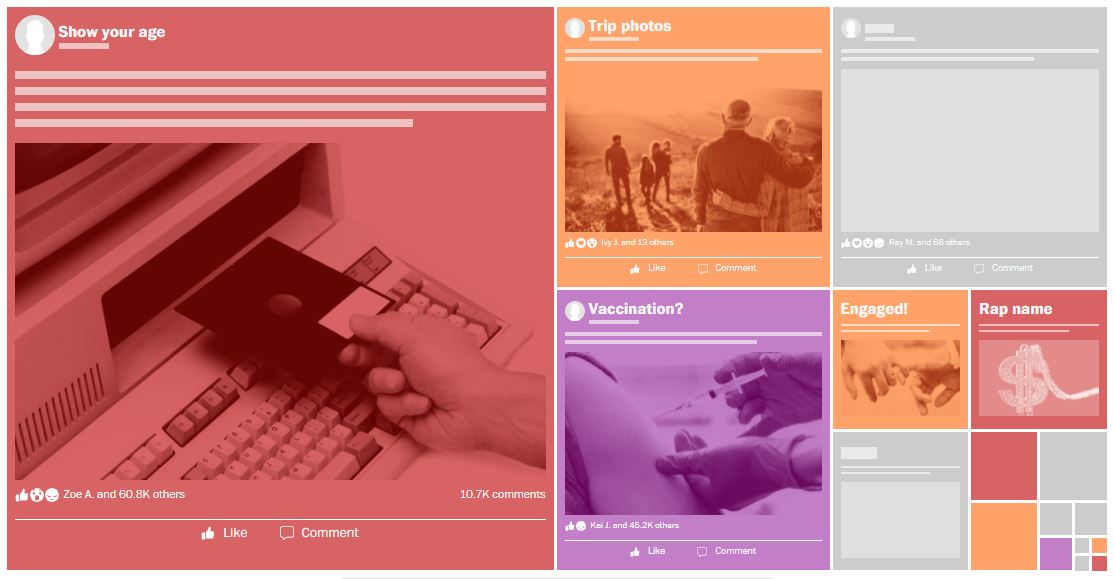

Internal documents reveal that Facebook has privately tracked real-world harms exacerbated by its platforms, ignored warnings from employees about the risks of their design decisions and exposed vulnerable communities around the world to dangerous content. wapo.st/3Et94VZ

Disclosed to the SEC by whistleblower Frances Haugen, the Facebook Papers were provided to Congress in redacted form by Haugen’s legal counsel.

Here are key takeaways from The Post’s investigation: wapo.st/3Et94VZ

Here are key takeaways from The Post’s investigation: wapo.st/3Et94VZ

Zuckerberg testified last year before Congress that the company removes 94 percent of the hate speech it finds.

But in internal documents, researchers estimated that the company was removing less than 5 percent of all hate speech on Facebook. wapo.st/3CeS6d9

But in internal documents, researchers estimated that the company was removing less than 5 percent of all hate speech on Facebook. wapo.st/3CeS6d9

During the run-up to the 2020 election, Facebook dialed up efforts to police content that promoted violence, misinformation and hate speech.

But after Nov. 6, Facebook rolled back many of the dozens of measures aimed at safeguarding U.S. users. wapo.st/3CeS6d9

But after Nov. 6, Facebook rolled back many of the dozens of measures aimed at safeguarding U.S. users. wapo.st/3CeS6d9

According to one 2020 summary, the vast majority of Facebook's efforts against misinformation — 84 percent — went toward the United States, the documents show, with just 16 percent going to the “Rest of World,” including India, France and Italy. twitter.com/i/events/14522…

A 2019 report tracking a dummy account set up to represent a conservative mother in North Carolina found that Facebook’s recommendation algorithms led her to QAnon, an extremist ideology that the FBI has deemed a domestic terrorism threat, in five days. wapo.st/3CeS6d9

A mix of presentations, research studies, discussion threads and strategy memos, the Facebook Papers provide an unprecedented view into how executives at the social media giant weigh trade-offs between public safety and their own bottom line. washingtonpost.com/technology/202…

The internal debate over the “angry” emoji and the findings about its effects shed light on the highly subjective human judgments that underlie Facebook’s news feed algorithm. twitter.com/i/events/14529…

Facebook’s news feed algorithm has been blamed for fanning sectarian hatred, steering users toward extremism and conspiracy theories, and incentivizing politicians to take more divisive stands.

But how does it work, and what makes it so influential? twitter.com/i/events/14530…

But how does it work, and what makes it so influential? twitter.com/i/events/14530…

• • •

Missing some Tweet in this thread? You can try to

force a refresh