How to think about precision and recall:

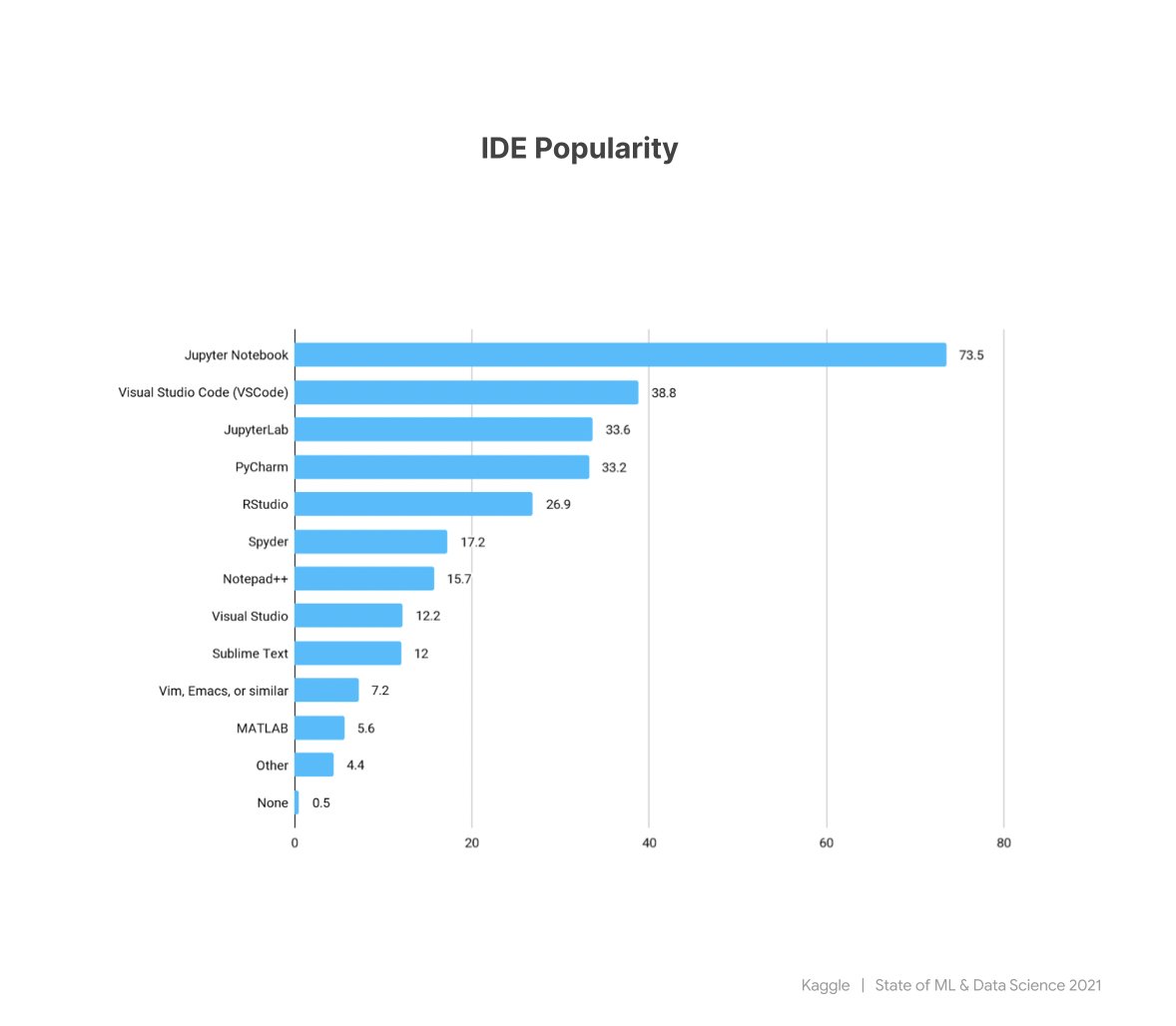

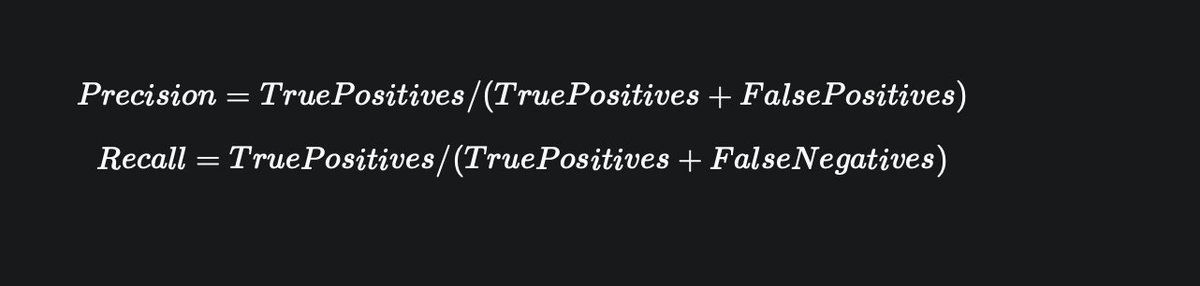

Precision: What is the percentage of positive predictions that are actually positive?

Recall: What is the percentage of actual positives that were predicted correctly?

Precision: What is the percentage of positive predictions that are actually positive?

Recall: What is the percentage of actual positives that were predicted correctly?

The fewer false positives, the higher the precision. Vice-versa.

The fewer false negatives, the higher the recall. Vice-versa.

The fewer false negatives, the higher the recall. Vice-versa.

How do you increase precision? Reduce false positives.

It can depend on the problem, but generally, that might mean fixing the labels of those negative samples(being predicted as positives) or adding more of them in the training data.

It can depend on the problem, but generally, that might mean fixing the labels of those negative samples(being predicted as positives) or adding more of them in the training data.

How do you increase recall? Reduce false negatives.

Fix the labels of positives samples that are being classified as negatives when they are not, or add more samples to the training data.

Fix the labels of positives samples that are being classified as negatives when they are not, or add more samples to the training data.

What happens when I increase precision? I will hurt recall.

There is a tradeoff between them. Increasing one can reduce the other.

There is a tradeoff between them. Increasing one can reduce the other.

What does it mean when the precision of your classifier is 1?

False positives are 0.

Your classifier is smart about not classifying negative samples as positives.

False positives are 0.

Your classifier is smart about not classifying negative samples as positives.

What's about recall being 1?

False negatives are 0.

Your classifier is smart about not classifying positive samples as negatives.

What if the precision and recall are both 1? You have a perfect classifier. This is ideal!

False negatives are 0.

Your classifier is smart about not classifying positive samples as negatives.

What if the precision and recall are both 1? You have a perfect classifier. This is ideal!

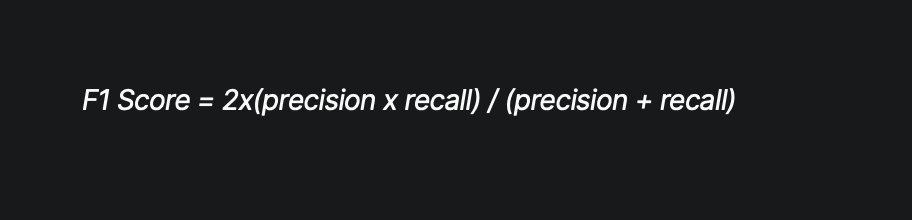

What is the better way to know the performance of the classifier without playing a battle of balancing precision and recall?

Combine them. Find their harmonic mean. If either precision or recall is low, the resulting mean will be low too.

Such harmonic mean is called the F1 Score and it is a reliable metric to use when we are dealing with imbalanced datasets.

Such harmonic mean is called the F1 Score and it is a reliable metric to use when we are dealing with imbalanced datasets.

If your dataset is balanced(positive samples are equal to negative samples in the training set), ordinary accuracy is enough.

Thanks for reading.

If you found the thread helpful, share it with your friends on Twitter. It is certainly the best way to support me.

Follow @Jeande_d for more machine learning content.

If you found the thread helpful, share it with your friends on Twitter. It is certainly the best way to support me.

Follow @Jeande_d for more machine learning content.

• • •

Missing some Tweet in this thread? You can try to

force a refresh