Delighted to announce two papers we will present at #NeurIPS2021: on XLVIN (spotlight!), and on transferable algorithmic reasoning.

Both summarised in the wonderful linked thread from @andreeadeac22!

I'd like to add a few sentiments on XLVIN specifically... thread time! 🧵1/7

Both summarised in the wonderful linked thread from @andreeadeac22!

I'd like to add a few sentiments on XLVIN specifically... thread time! 🧵1/7

https://twitter.com/andreeadeac22/status/1456636063271821314

You might have seen XLVIN before -- we'd advertised it a few times, and it also featured at great length in my recent talks.

The catch? The original version of XLVIN has been doubly-rejected, from both ICLR (in spite of all-positive scores) and ICML. 2/7

The catch? The original version of XLVIN has been doubly-rejected, from both ICLR (in spite of all-positive scores) and ICML. 2/7

https://twitter.com/PetarV_93/status/1321114783249272832

However, this is one of the cases in which the review system worked as intended! Even AC-level rejections can be a blessing in disguise.

Each review cycle allowed us to deepen our qualitative insight into why exactly does XLVIN work as intended... 3/7

Each review cycle allowed us to deepen our qualitative insight into why exactly does XLVIN work as intended... 3/7

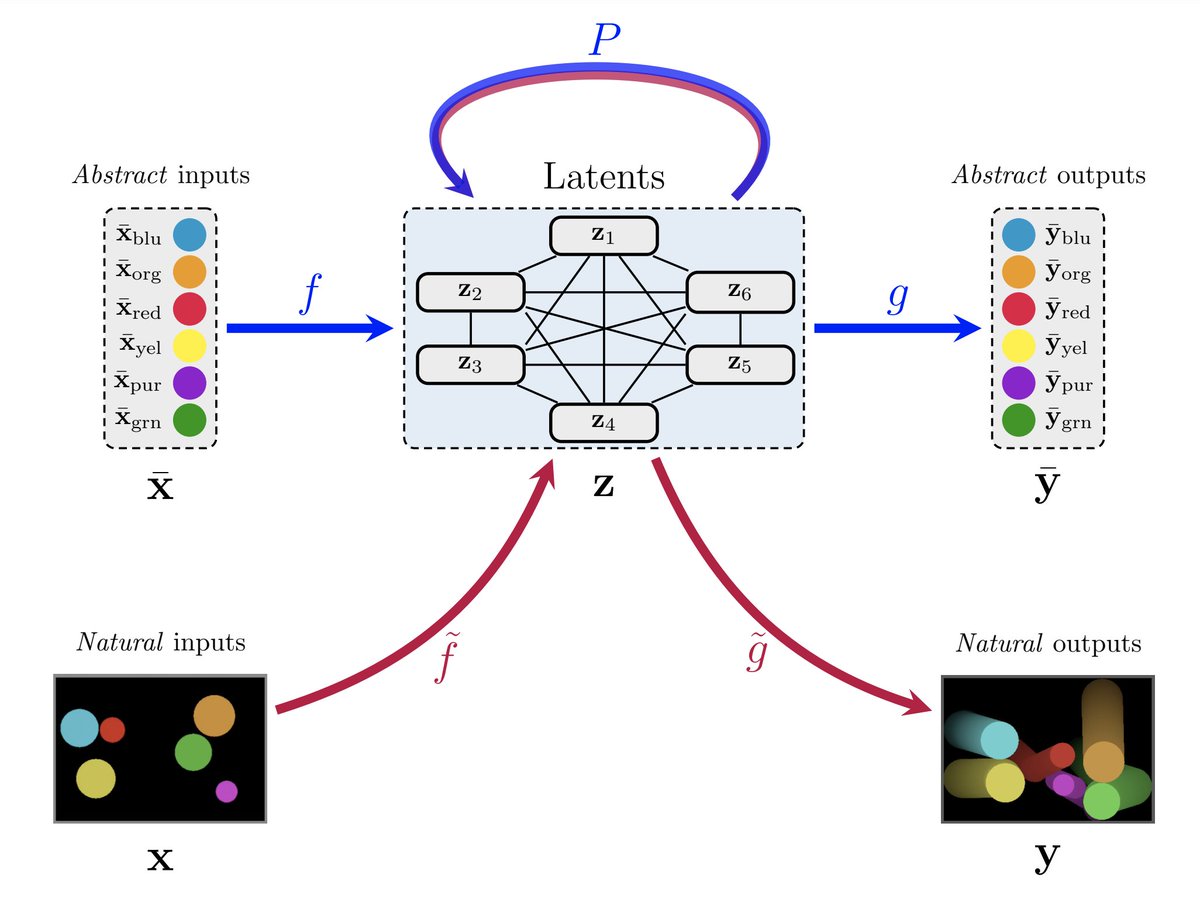

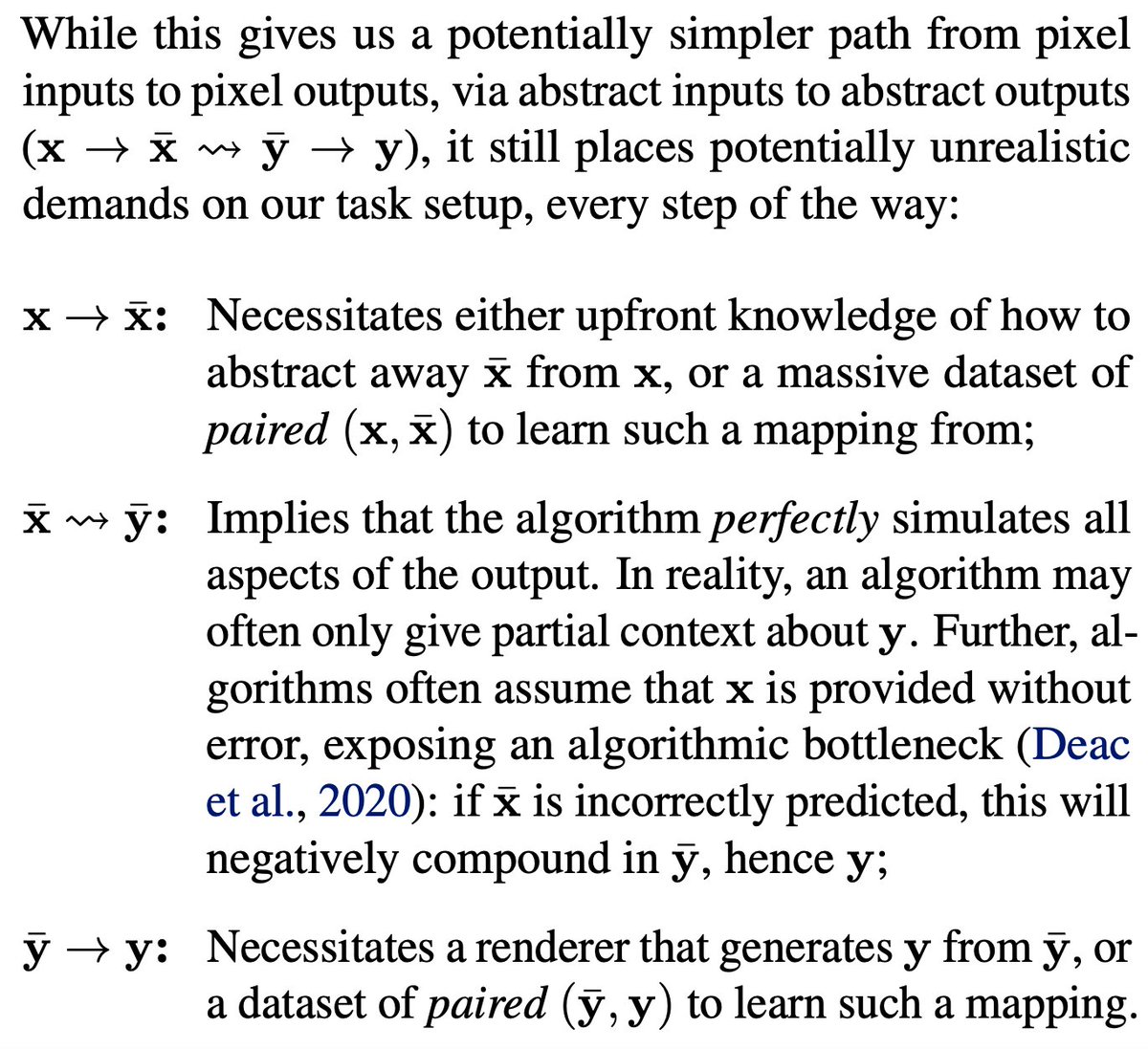

Specifically, we studied:

- What is the benefit of learning a high-dimensional algorithm such as the Bellman backup vs. running it explicitly (as in papers like TreeQN)?

- Has a general CNN encoder over noisy data actually learnt to use this algorithm? 4/7

- What is the benefit of learning a high-dimensional algorithm such as the Bellman backup vs. running it explicitly (as in papers like TreeQN)?

- Has a general CNN encoder over noisy data actually learnt to use this algorithm? 4/7

In studying this, we made the first rigorous observation of what we termed the "algorithmic bottleneck".

Ultimately, we realised that breaking the bottleneck is our main contribution, _not_ generalising Value Iteration Nets to general environments. 5/7

Ultimately, we realised that breaking the bottleneck is our main contribution, _not_ generalising Value Iteration Nets to general environments. 5/7

Accordingly, we changed the title and heavily rewrote the paper :)

Goodbye "XLVIN", hello "Neural Algorithmic Reasoners are Implicit Planners"!

The result? Spotlight talk (top 3% of all accepted papers)! 6/7

Goodbye "XLVIN", hello "Neural Algorithmic Reasoners are Implicit Planners"!

The result? Spotlight talk (top 3% of all accepted papers)! 6/7

In recognition of this, we actually wrote an explicit acknowledgement to all of our reviewers (even for the venues that rejected us!) -- all of you have directly contributed to making XLVIN's contributions what they are now, and I'm very excited to see where we can take this. 7/7

• • •

Missing some Tweet in this thread? You can try to

force a refresh