A highlight from #EMNLP2021 fascinating keynote by @StevenBird:

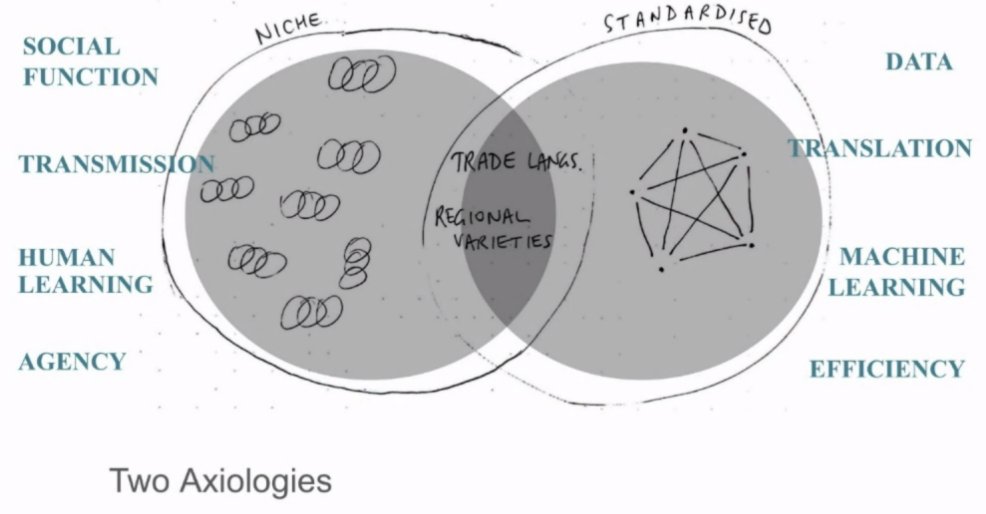

NLP often comes with a set of assumptions about what are the needs of communities with low-resource languages. But we need to learn what they *actually* need, they may have a completely different epistemology.

/1

NLP often comes with a set of assumptions about what are the needs of communities with low-resource languages. But we need to learn what they *actually* need, they may have a completely different epistemology.

/1

AR: this is such a thought-provoking talk, pointing at the missing bridges between language tech and social sciences, esp. anthropology. As a computational linguist lucky to spend a year in @CPH_SODAS - I still don't think I even see the depth of everything we're missing.

/2

/2

An audience question (@bonadossou from @MasakhaneNLP?): how do we increase the volume of NLP research on low-resource languages when such work is not as incentivized?

@StevenBird: keep submitting. I've had many rejections. Theme track for ACL2022 will be language diversity.

/3

@StevenBird: keep submitting. I've had many rejections. Theme track for ACL2022 will be language diversity.

/3

On that topic: I'm deeply grateful to @WiNLPWorkshop for inviting me to the panel on the role of peer review in diversifying #NLProc. The panel will be on Thursday (Nov 11th), 1pm Punta Cana.

/4

/4

• • •

Missing some Tweet in this thread? You can try to

force a refresh