For the next few days, our timelines are gonna be full of cutesy images made by a new Google AI called #Imagen.

What you won't see are any pictures of Imagen's ugly side. Images that would reveal its astonishing toxicity. And yet these are the real images we need to see. 🧵

What you won't see are any pictures of Imagen's ugly side. Images that would reveal its astonishing toxicity. And yet these are the real images we need to see. 🧵

How do we know about these images? Because the team behind Imagen has acknowledged this dark side in a technical report, which you can read for yourself here. Their findings and admissions are troubling, to say the least.

gweb-research-imagen.appspot.com/paper.pdf

gweb-research-imagen.appspot.com/paper.pdf

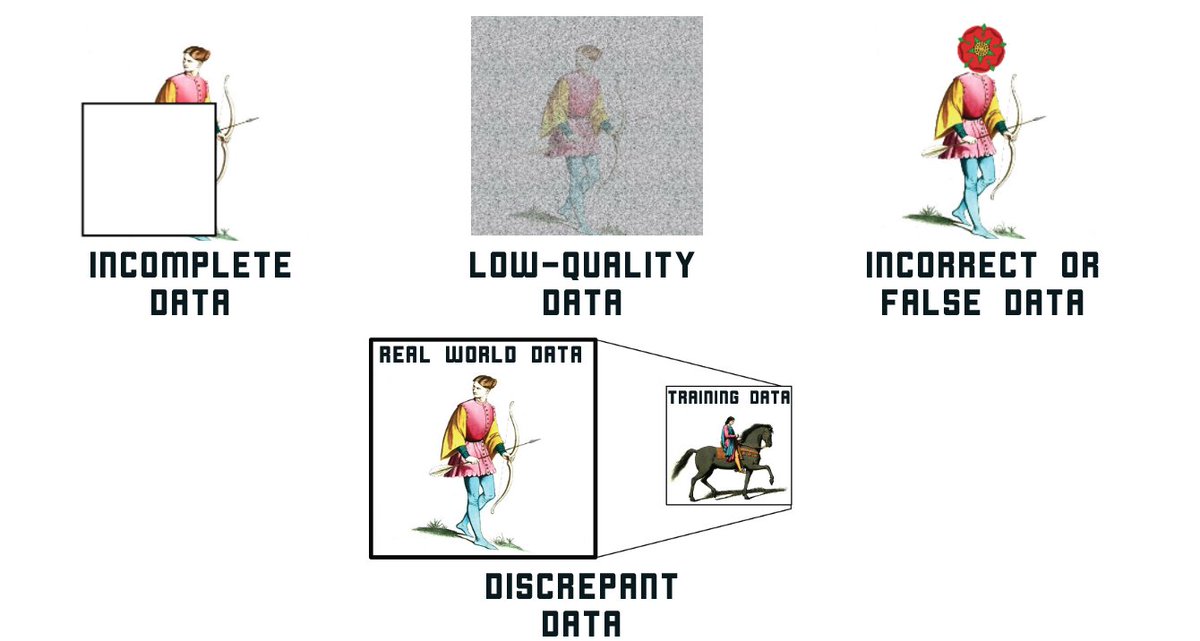

First, the researchers did not conduct a systematic study of the system's potential for harm. But even in their limited evaluations they found that it "encodes several social biases and stereotypes."

They don't list all these observed biases and stereotypes, but they admit that they include "an overall bias towards generating images of people with lighter skin tones and a tendency for images portraying different professions to align with Western gender stereotypes." 💔

The team didn't publish any examples of such images. But the pictures made by a similar system called DALL-E 2 a few weeks ago can give us an idea of what they probably look like. (Warning: they're upsetting)

https://mobile.twitter.com/WriteArthur/status/1512429306349248512

That's not all. Even when Imagen isn't depicting people, it has a tendency to "encode[] a range of social and cultural biases when generating images of activities, events, and objects." Again, they don't give examples, but you can imagine that these depictions are dismaying.

Again, no concrete examples are provided, but in some of the other images in the paper it is possible to see these biases rearing their ugly heads.

For example, when prompted to make a picture of "detailed oil painting[s]" of a "royal raccoon king" and "royal raccoon queen," the system went for decidedly European symbols of royalty.

We can also safely assume that Imagen has a high capacity to generate overtly toxic content. Why? Because one of the datasets the team used to train it contains "a wide range of inappropriate content including pornographic imagery, racist slurs, and harmful social stereotypes."

Let's pause on that. They *knowingly* trained Imagen on porn, violence, racist content, and other toxic material. It wasn't an accident.

They also knowingly trained the system on "uncurated web-scale data." AKA large volumes of stuff from the internet that *they admit* "risks reproducing" things like "oppressive viewpoints, and derogatory, or otherwise harmful, associations to marginalized identity groups."

To be sure, because of all this, the team decided not to release Imagen to the public. It's also why they won't prompt it to make images of people. But the fact remains that they have knowingly created a machine with serious dangers for which they do not have any clear fixes.

Again, this transparency and honesty is commendable. But as the authors admit, mitigating these risks is going to require serious new research. Research that they knowingly chose not to address before revealing the system.

And while they do say that they intend to address these issues in future work, it's going to take as many minds coming together as possible to fix them. A whole community of effort...

But if most folks within that community are only focused on the cute stuff—or on philosophical debates over whether this system is truly "intelligent"—it's going to be hard to generate a groundswell of effort to do anything about the nasty stuff.

Bottom line: if we want these types of AI to benefit all of humanity, we should probably look beyond all the pictures of wizard corgi dogs or cows in tuxedos. Because all that cute stuff will mean nothing if the system ends up hurting people.

Ok that’s it. That's the thread.

• • •

Missing some Tweet in this thread? You can try to

force a refresh