Just 3 days ago, I had the pleasure of watching the #rstudioconf2022 kick off.

I've been attending since 2018 and watching even longer than that.

And, I was just a normal spectator in the audience until this happened.

I've been attending since 2018 and watching even longer than that.

And, I was just a normal spectator in the audience until this happened.

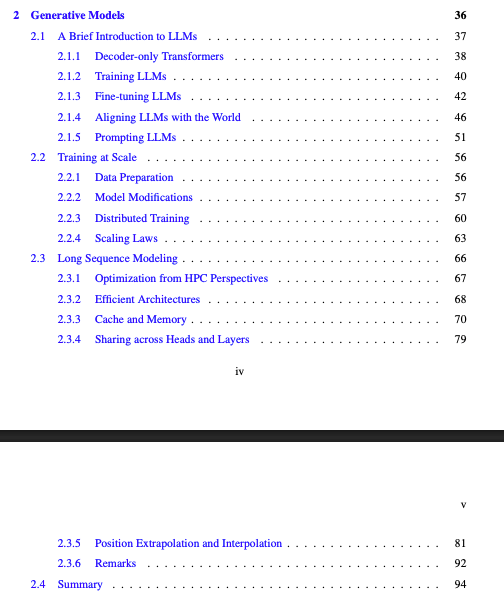

@topepos and @juliasilge's keynote showed all of the open source work their team has been working on to build the best machine learning ecosystem in R called #tidymodels.

And then they brought this slide up.

And then they brought this slide up.

Max and Julia then proceeded to talk about how the community members have been working on expanding the ecosystem.

- Text Recipes for Text

- Censored for Survival Modeling

- Stacks for Ensembles

And then they announced me and my work on Modeltime for Time Series!!!

- Text Recipes for Text

- Censored for Survival Modeling

- Stacks for Ensembles

And then they announced me and my work on Modeltime for Time Series!!!

I had no clue this was going to happen.

Just a spectator in the back.

My friends to both sides went nuts. Hugs, high-fives, and all.

My students in my slack channel went even more nuts.

Just a spectator in the back.

My friends to both sides went nuts. Hugs, high-fives, and all.

My students in my slack channel went even more nuts.

Throughout the rest of the week, I was on cloud-9.

My students that were at the conf introduced themselves.

Much of our discussions centered around Max & Julia's keynote and the exposure that modeltime got.

My students that were at the conf introduced themselves.

Much of our discussions centered around Max & Julia's keynote and the exposure that modeltime got.

And all of this wouldn't be possible without the support of this company. Rstudio / posit.

So, I'm honored to be part of something bigger than just a programming language.

And if you'd like to learn more about what I do, I'll share a few links.

So, I'm honored to be part of something bigger than just a programming language.

And if you'd like to learn more about what I do, I'll share a few links.

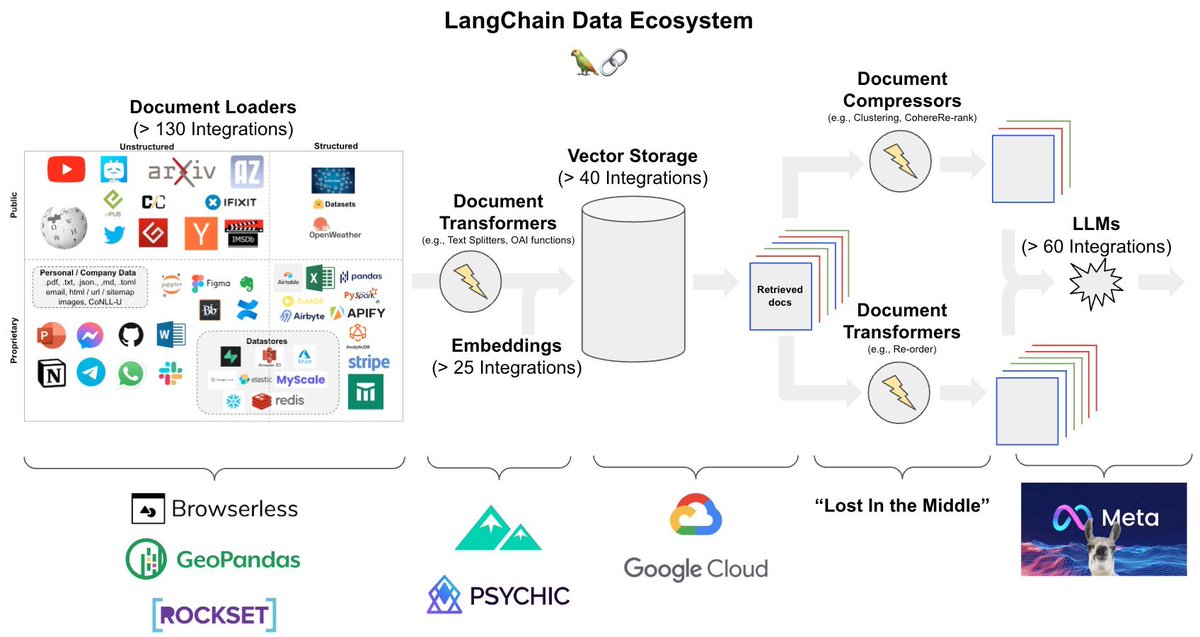

The first is my modeltime package for #timeseries.

This has been a 2-year+ passion project for building the premier time series forecasting system.

It now has multiple extensions including ensembles, resampling, deep learning, and more.

business-science.github.io/modeltime/

This has been a 2-year+ passion project for building the premier time series forecasting system.

It now has multiple extensions including ensembles, resampling, deep learning, and more.

business-science.github.io/modeltime/

The second is my company @bizScienc.

For the past 4-years I've dedicated myself to teaching students how to apply data science to business.

I have 3000+ students worldwide.

Here are some of my tribe that I met at #rstudioconf2022.

For the past 4-years I've dedicated myself to teaching students how to apply data science to business.

I have 3000+ students worldwide.

Here are some of my tribe that I met at #rstudioconf2022.

The third is my 40-minute webinar.

I put a free presentation together to help you on your journey to become a data scientist.

A few things I talk about:

Modeltime for Time Series.

Tidymodels & H2O for Machine Learning

Shiny for Web Apps

and 7 more!

learn.business-science.io/free-rtrack-ma…

I put a free presentation together to help you on your journey to become a data scientist.

A few things I talk about:

Modeltime for Time Series.

Tidymodels & H2O for Machine Learning

Shiny for Web Apps

and 7 more!

learn.business-science.io/free-rtrack-ma…

• • •

Missing some Tweet in this thread? You can try to

force a refresh