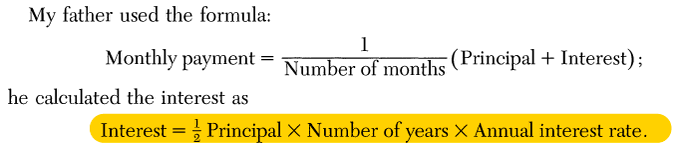

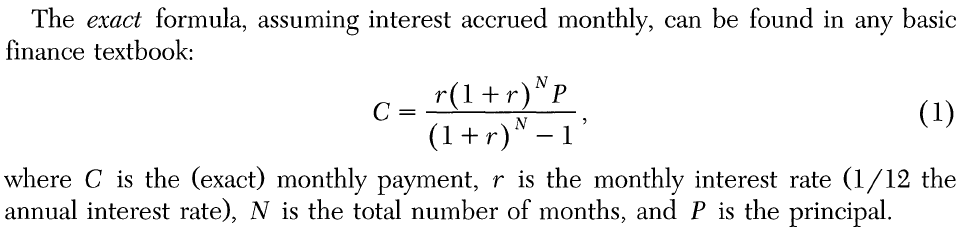

Yesterday @ #MadeByGoogle we also announced the latest on the Super Res Zoom feature on #Pixel7Pro

It's a project my team's been involved in since '18. This year, our teams've made it so much more powerful. You can zoom up to 30x. Let me show you in a 🧵

It's a project my team's been involved in since '18. This year, our teams've made it so much more powerful. You can zoom up to 30x. Let me show you in a 🧵

https://twitter.com/madebygoogle/status/1578032658973540361

And finally we're at 30x hybrid optical/digital zoom, seeing the top of One World Trade Center miles away.

I hope this gives you an idea what the zoom experience looks like on #Pixel7Pro. You can see the whole sequence in an album:

photos.app.goo.gl/SoV1s7EU6c7APb…

PC: Alex Schiffhauer

I hope this gives you an idea what the zoom experience looks like on #Pixel7Pro. You can see the whole sequence in an album:

photos.app.goo.gl/SoV1s7EU6c7APb…

PC: Alex Schiffhauer

• • •

Missing some Tweet in this thread? You can try to

force a refresh