Differentiable programming (dP) is great: train neural networks to match anything w/ gradients! ODEs? Neural ODEs. Physics? Yes. Agent-Based models? Nope, not differentiable... or are they? Check out our new paper at NeurIPS on Stochastic dP!🧵

arxiv.org/abs/2210.08572

arxiv.org/abs/2210.08572

Problem: if you flip a coin with probability p of being heads, how do you generate a code that takes the derivative with respect to that p? Of course that's not well-defined: the coin gives a 0 or 1, so it cannot have "small changes". Is there a better definition?

Its mean (or in math words, "expectation") can be differentiable! So let's change the question: is there a form of automatic differentiation that generates a program which directly calculates the derivative with respect to the mean?

There were some prior approaches to this problem, but they had exponential scaling, bias (the prediction is "off" by a little bit), and high variance in the estimation. Can we make it so you can take any code and get fast and accurate derivatives, just like "standard AD"?

To understand how to get there, let's take a look at Forward-Mode AD. One way to phrase AD is the following: instead of running a program on a one-dimensional number x, can you instead run a program on a two-dimensional number d = (x,y) such that f(d) = (f(x),f'(x)y)?

This is the dual number formulation of forward-mode AD. More details here: book.sciml.ai/notes/08/. Key takeaway: AD is moving from "the standard algebra on real numbers" to a new number type. Recompile the code for the new numbers and the second value is the derivative!

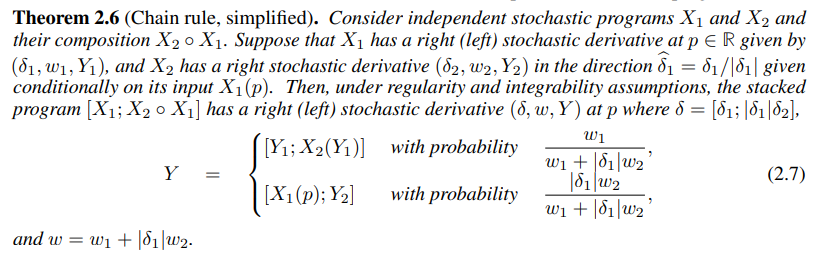

Now let's extend it to discrete stochastic! The core idea behind the paper is the following: if we treat a program as a random variable X(p), can we come up with a similar number definition such that we get two random variables, (X(p),Y(p)), such that E[Y(p)] = dE[X(p)]/dp?

Note that in this formulation, we do not "need" to relax to a continuous relationship. X(p) = {1 with probability p, 0 with probably 1-p}. Y(p) can be defined conditionally on X: if X = 1, then ?, if X = 0, then ? The (?) is then defined so that the property E[Y(p)] = dE[X(p)]/dp

With this, we can interpret "infinitesimal" differently. Instead of "small changes", if you have a discrete stochastic variable, it can be "small probability of a discrete change". Infinitesimal chance of changing +-1, 2, 3, ...

With this you can extend dual numbers to stochastic triples, which have a "continuous derivative" (like dual numbers) and a "discrete derivative", a probability of O(1) changes. This is what it "looks like" in our new AD package github.com/gaurav-arya/St…

You can interpret this as taking a stochastic program and making it run the program with two correlated paths. You run the program and suddenly X% of the way through, "something different" could have happened. Take the difference = derivative. But correlation = low variance!

By delaying "derivative smoothing" this also avoids the bias issues of "traditional" techniques like the score function method. Want derivatives of agent-based models like stochastic game of life? Just slap these numbers in and it will recompile to give fast accurate derivatives!

Now you can start differentiating crazy codes. Choose random number and choose which agent is infected? Differentiate particle filters? All of these are now differentiable!

Of course there are a lot more details. How exactly do you define the conditional random number such that you get the right distribution? Two paths of a program, what about 3 or 4? Etc. Check out the extensive appendix with all of the details.

arxiv.org/abs/2210.08572

arxiv.org/abs/2210.08572

That's enough math for now. If you want to start differentiating agent-based models today, take a look at our new #julialang package StochasticAD.jl with this method! github.com/gaurav-arya/St… Expand the #sciml universe!

Also, please like and follow if you want more content on differentiable programming and scientific machine learning! Neural networks in everything!

Also like and follow co-authors @NotGauravArya @MoritzSchauer @_Frank_Schaefer! Gaurav, an undergrad @MIT has really been the tour de force here. We had something going, but he really made it miles better. Really bright future ahead for him!

@MoritzSchauer put together a nice demo of the package in action!

https://twitter.com/MoritzSchauer/status/1582377917798965248

Sorry quick correction: the score-function method is unbiased, but it has a high variance as the plots show. Other methods in this area, like Gumbel-Softmax are biased, with a variance-bias trade-off to manage.

• • •

Missing some Tweet in this thread? You can try to

force a refresh