Interested in reconstructing computational dynamics from neural data using RNNs?

Here we review dynamical systems (DS) concepts, recent #ML/ #AI methods for recovering DS from data, evaluation, interpretation, analysis, & applic. in #Neuroscience:

biorxiv.org/content/10.110…

A 🧵...

Here we review dynamical systems (DS) concepts, recent #ML/ #AI methods for recovering DS from data, evaluation, interpretation, analysis, & applic. in #Neuroscience:

biorxiv.org/content/10.110…

A 🧵...

Some important take homes:

1) To formally constitute a true state space or reconstructed DS, some math. conditions need to be met. PCA and other dim. reduc. tools often won’t give you a state space in the DS sense (may even destroy it). Just training an RNN on data may not either

1) To formally constitute a true state space or reconstructed DS, some math. conditions need to be met. PCA and other dim. reduc. tools often won’t give you a state space in the DS sense (may even destroy it). Just training an RNN on data may not either

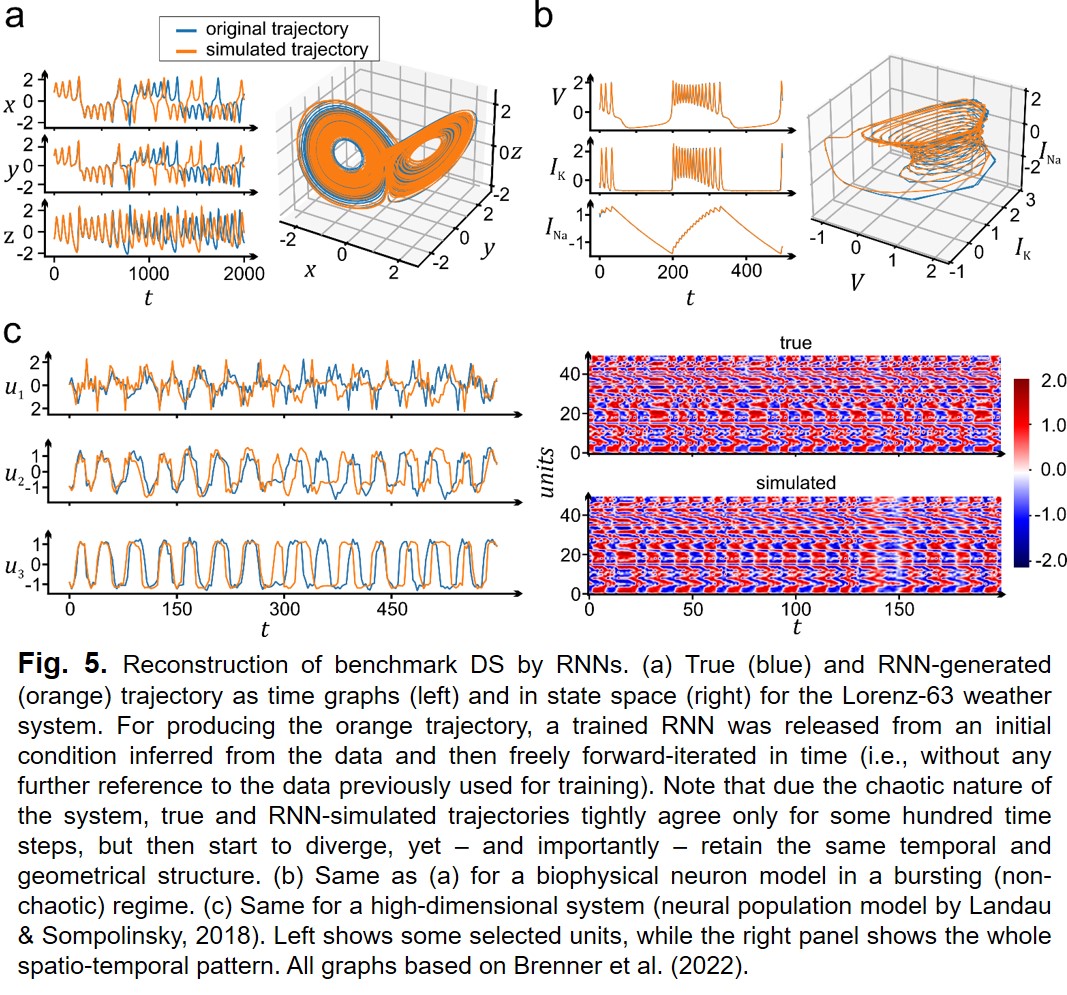

2) For DS reconstruction (DSR), a trained RNN should be able to reproduce *invariant geometrical and temporal properties* of the underlying system when run on its own.

3) Correlation (R^2) with data or MSE may not be good measures for reconstruction quality, especially if the system is chaotic, since then nearby trajectories quickly diverge even for the *very same system with same parameters*. Geometrical & invariant measures are needed.

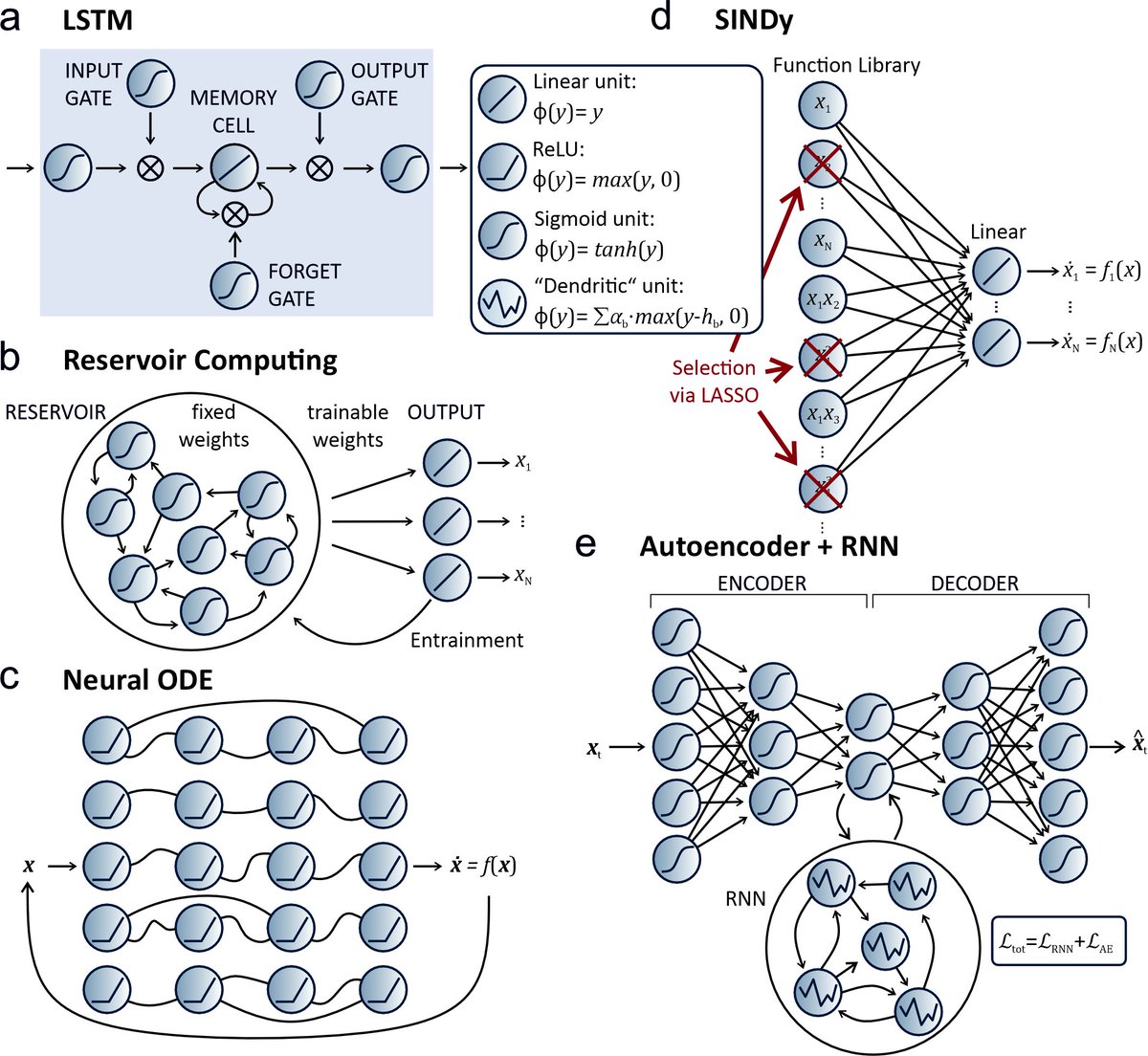

4) DSR is a burgeoning field in #ML/ #AI & #DataScience: Everything from basis expansions/ library methods, Graph NNs, Transformers, Reservoir Comp., Neural ODEs to numerous types of RNNs has been suggested. Often these employ algos purpose-tailored for DSR.

5) RNNs are dynamically & comput. universal - they can approx. any DS, can implement any Turing machine. They can emulate biophysical neurons in detail, though having a very different functional form → You don’t need a biophysical model if you’d like to simulate biophysics.

6) RNNs can learn to mimic physiological data and to behave just like the underlying DS, incl. single-unit rec. on single trials … if DSR was successful, we can analyze and simulate the RNN further as a surrogate for the system it has been trained on.

7) By coupling the RNN to the data via specifically designed observation models, we can interpret operations in the RNN in biological terms and connect them to the biological substrate.

8) RNNs are great for multi-modal data integration: You can couple many observed data streams for DSR to the same latent RNN through sets of data-specific decoder models.

9) There are many types of dym. and attractors, some with complex geom. struc. like separatrix cycles or chaos. As we get closer to true DSR, as neurosci. moves to more ecol. tasks, the picture of neural dym. may get more complex than the simple point attr.s that still dominate.

10) There are many open issues in DSR, most importantly perhaps generalization beyond dynamical regimes seen in training.

We would love to hear your thoughts on this, any suggestions where to send this, or hints to important lit. omissions so we can integrate them. We apologize for likely having missed a lot, esp. of the #ComputationalNeuroscience lit.!

• • •

Missing some Tweet in this thread? You can try to

force a refresh