Among all the cool things #ChatGPT can do, it is super capable of handling and manipulating data in bulk, making numerous data wrangling, scraping, and lookup tasks obsolete.

Let me show you a few cool tricks, no coding skills are required!

(A thread) 👇🧵

Let me show you a few cool tricks, no coding skills are required!

(A thread) 👇🧵

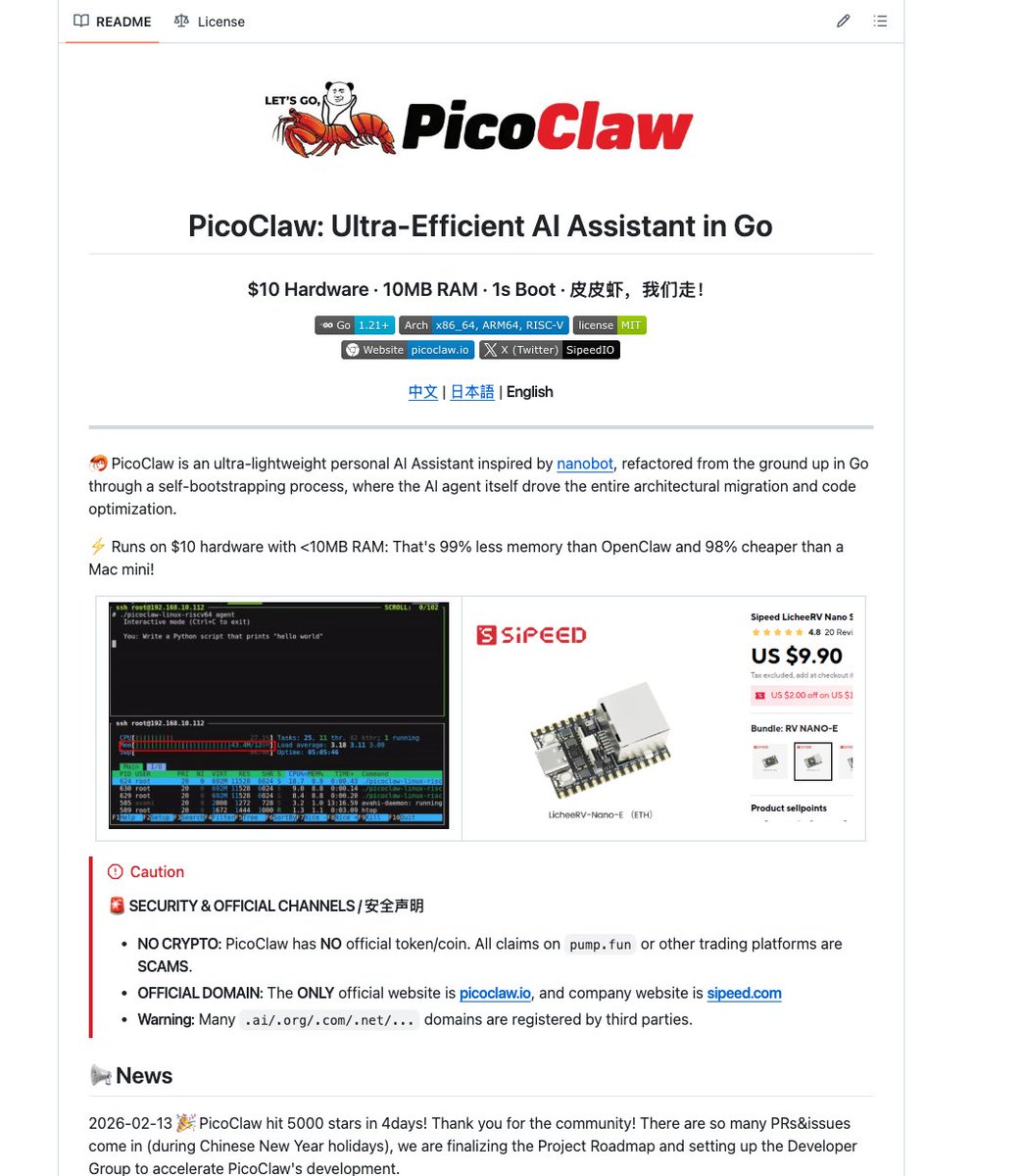

Let's start easy by heading to chat.openai.com/chat and pasting a list of 60 countries in the text field

Let's ask #ChatGPT to give us the main language, latitude, longitude, and country code for each of these countries

That was easy enough, right?

Now let's add more data to our output by asking #ChatGPT to provide the population of each of these countries

Now let's add more data to our output by asking #ChatGPT to provide the population of each of these countries

Boom! 💥

No #Python, PowerBI or code was needed!

Now let's try something a bit harder by asking #ChatGPT to add crime rates and COVID death tolls for the year 2020

No #Python, PowerBI or code was needed!

Now let's try something a bit harder by asking #ChatGPT to add crime rates and COVID death tolls for the year 2020

No sweat!

... and it's not even limited to tabular data!

Let's ask #ChatGPT to convert our table to a JSON file

... and it's not even limited to tabular data!

Let's ask #ChatGPT to convert our table to a JSON file

Pretty impressive, right?

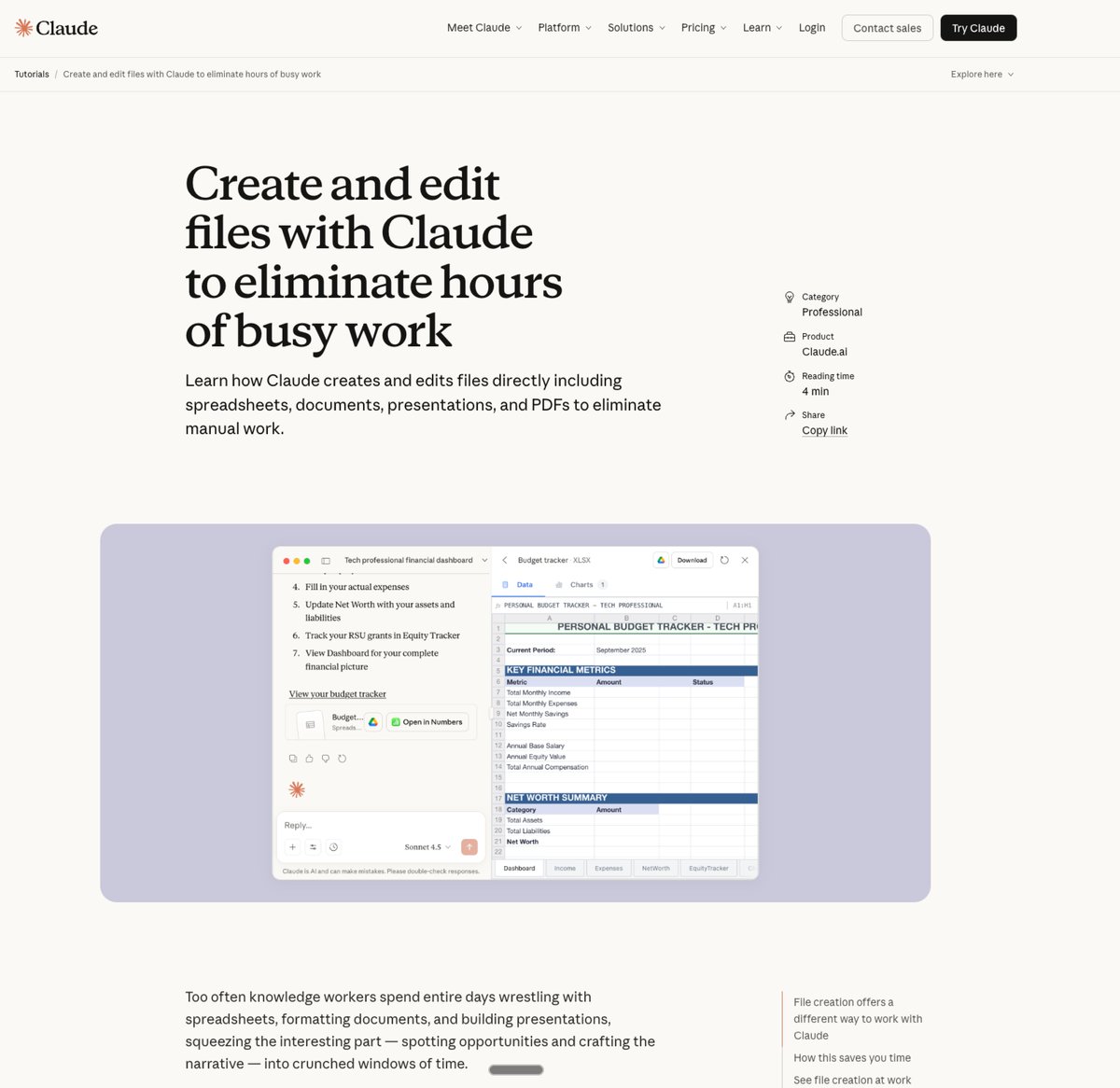

Now say you want to store that data in a database (e.g. the excellent @detahq), but you're not sure how to do it.

You bet! We can ask #ChatGPT how to do that

Now say you want to store that data in a database (e.g. the excellent @detahq), but you're not sure how to do it.

You bet! We can ask #ChatGPT how to do that

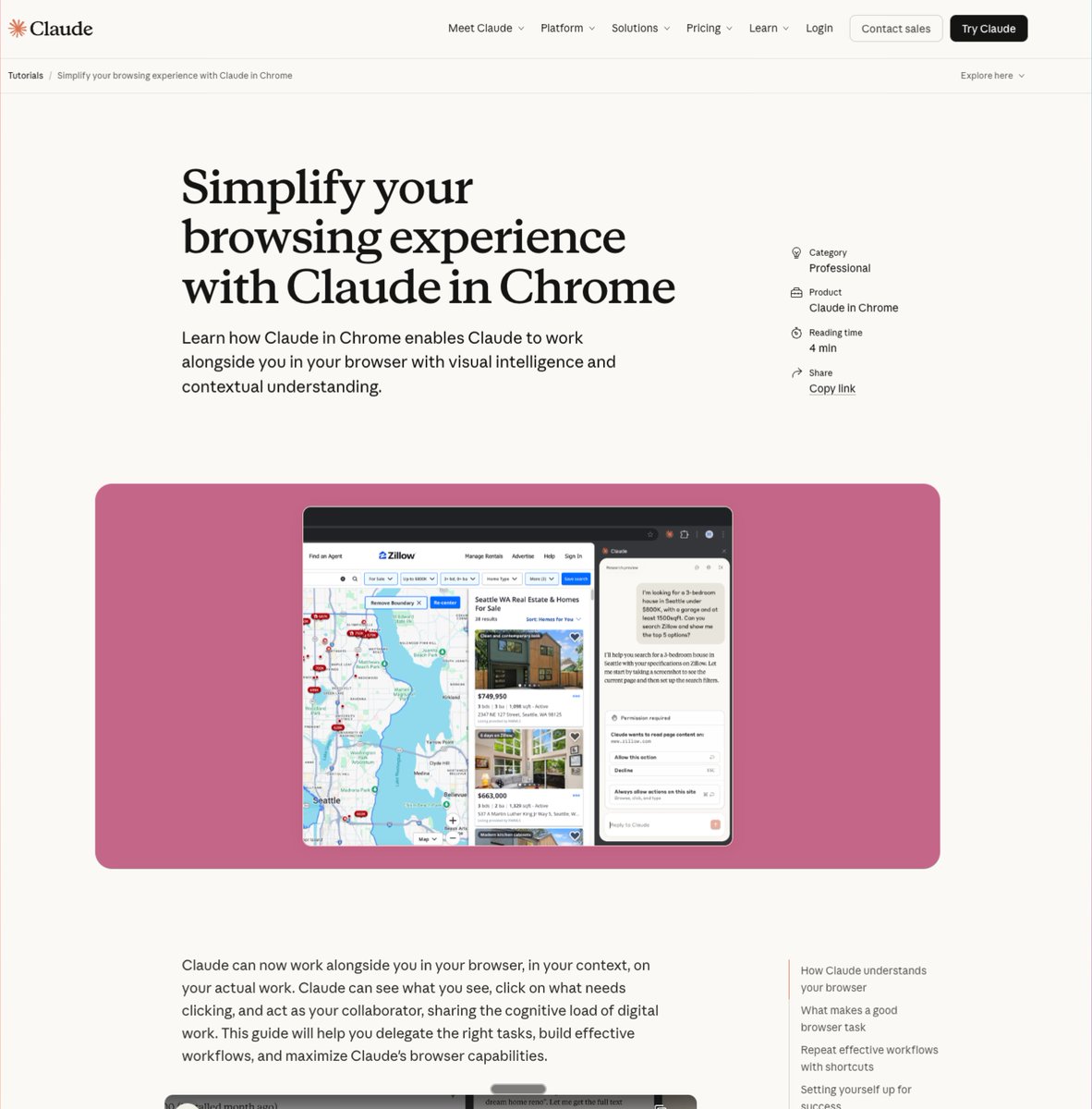

Let's conclude this thread by asking #ChatGPT to create a @streamlit app with a CSV uploader and filter boxes to filter `longitude`, `latitude`, and `country code`.

Not only does #ChatGPT displays the code, but it also provides clear explanations for each step! 👏

Not only does #ChatGPT displays the code, but it also provides clear explanations for each step! 👏

This is just a quick overview of what you can do with #ChatGPT.

I'm only scratching the surface here.

For more cool things you can do with it, check out my other thread

I'm only scratching the surface here.

For more cool things you can do with it, check out my other thread

https://twitter.com/DataChaz/status/1599754823074598912.

If you found this helpful, two requests:

1. Follow me @DataChaz to read more content like this.

2. Share it with an RT, so others can read it too! 🙌

1. Follow me @DataChaz to read more content like this.

2. Share it with an RT, so others can read it too! 🙌

Note that while #AI is capable of handling tasks such as sourcing and sorting, as well as some aspects of app development, it is not yet advanced enough to replace the need for human verification.

Even with its impressive capabilities, AI still requires human oversight.

Even with its impressive capabilities, AI still requires human oversight.

• • •

Missing some Tweet in this thread? You can try to

force a refresh