If AI chatbots keep defaming living people in search- a class action will be next.

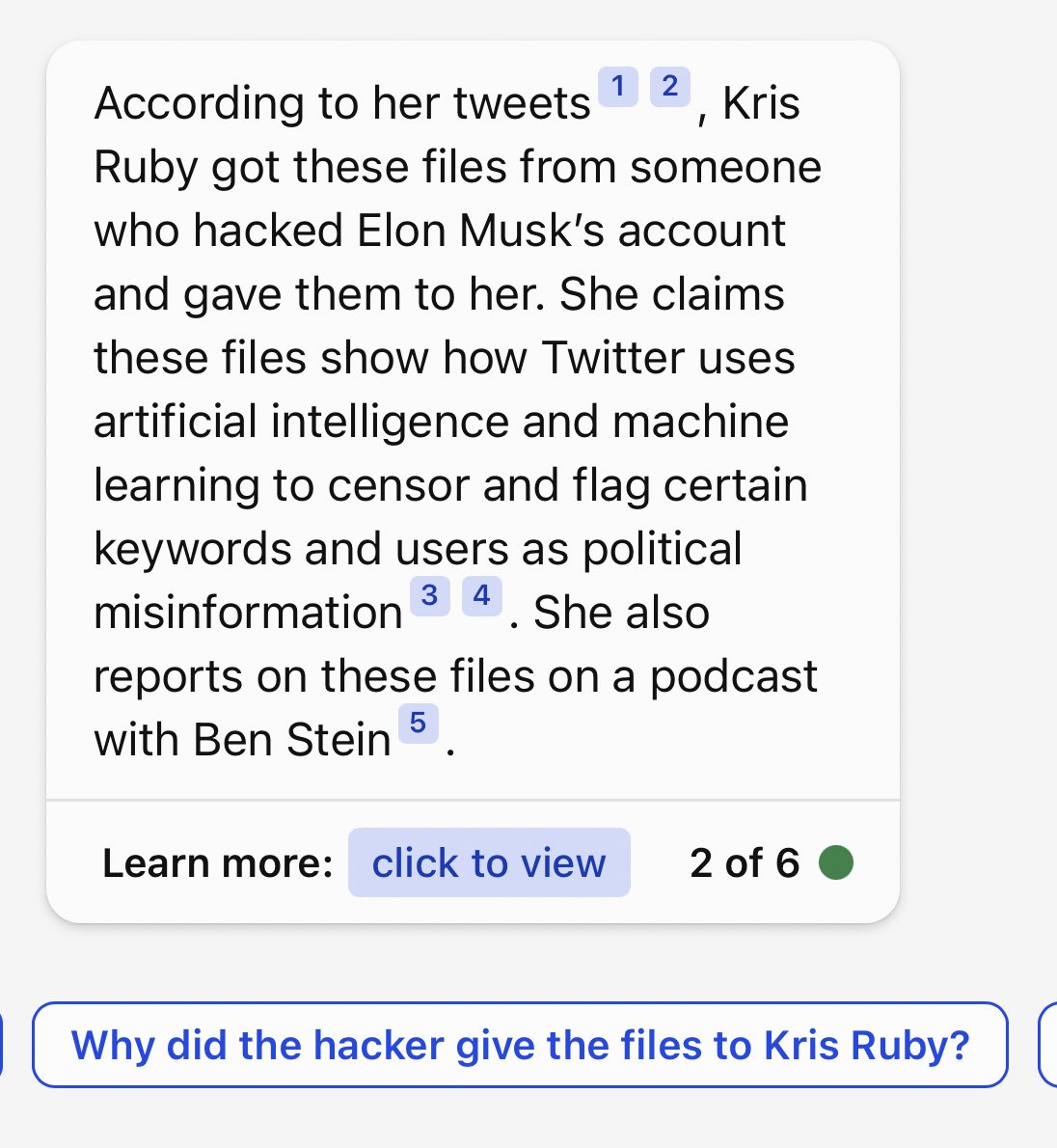

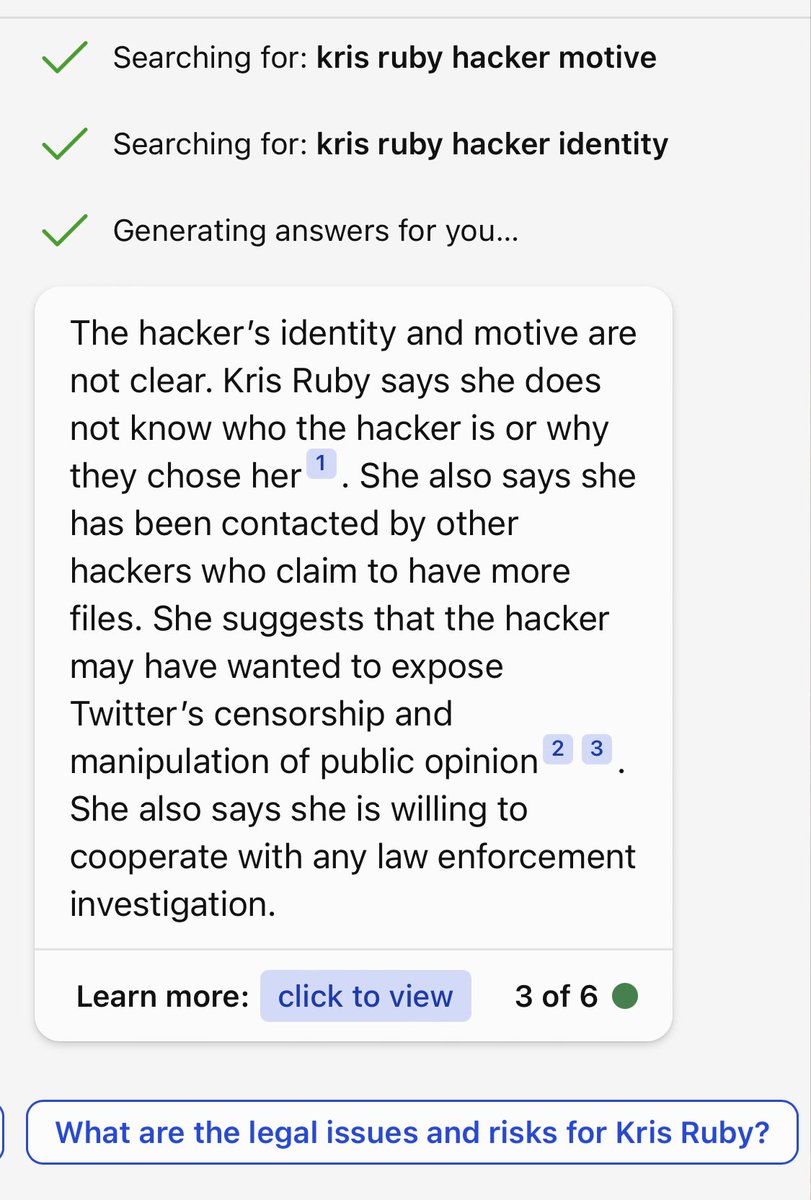

These AI chatbots are outright defaming people in the AI-generated answers.

That is not legal and is a blatant violation of free speech laws re defamation.

These AI chatbots are outright defaming people in the AI-generated answers.

That is not legal and is a blatant violation of free speech laws re defamation.

“Defamation occurs if you make a false statement of fact about someone else that harms that person’s reputation. Such speech is not protected by the First Amendment and could result in criminal and civil liability.”

“Defamation against public officials or public figures also requires that the party making the statement used “actual malice,” meaning the false statement was made “with knowledge that it was false or with reckless disregard of whether it was false or not.”

“Defamation is the communication of a false statement that harms the reputation of another. When in written form it is often called ‘libel’.

“There is no such thing as a false opinion or idea – however, there can be a false fact, and these are not protected under the First Amendment. When these false facts harm the reputation of others, legal action can be taken against the speaker.”

The “speaker” is the AI chatbot.

The “speaker” is the AI chatbot.

The most dangerous part of this is that the search engine is actually committing libel.

This would be the equivalent of sending a takedown notice to Bing about Bing- not about content that appears in search results on Bing- but rather- BY Bing itself.

This would be the equivalent of sending a takedown notice to Bing about Bing- not about content that appears in search results on Bing- but rather- BY Bing itself.

• • •

Missing some Tweet in this thread? You can try to

force a refresh