Here for @AMD DC event. Starts at 10am PT, follow this thread along with the stream!🧵

I expect to see @LisaSu, Mark Papermaster, Forrest Norrod, and Victor Peng on stage talking about #AI, #Bergamo, and #MI300

youtube.com/live/l3pe_qx95…

I expect to see @LisaSu, Mark Papermaster, Forrest Norrod, and Victor Peng on stage talking about #AI, #Bergamo, and #MI300

youtube.com/live/l3pe_qx95…

Lisa on stage

Focused on building industry standard CPUs. Now the standard in the cloud. 640 epyc instances available in the cloud today

Details on these tests likely to be in the backend of the slide deck

AWS Nitro + 4th Gen epyc. I think this is a #Bergamo comment

Expanding to EDA too

Now for cloud native computing - scale out with containers. Benefit from density and energy efficiency. Enter #Bergamo

Optimised for density, not perf, but completely same isa for software compatibility. 35% smaller core physically optimized, same socket and same IO support. Same platform support as Genoa

Deploying #Bergamo internally, 2.5x over Milan, substantial TCO. Easy decision. Partnered with AMD to provide design optimisations at a silicon level too

Time for #Genoa-X. Dan mcnamara to the stage

Customer adoption, Petronas (tie in with Mercedes F1?). Looks like oil and gas getting back in the limelight as an important vertical

GA on azure for #Genoa-X

Now for #siena. Coming later this year

Citadel talking about workloads requiring 100k cores and 100PB databases. Moved to latest gen AMD for 35% speedup.

1 million cores *. Forrest says very few workloads require that much. So efficiency and performance matters.

Density required to be as close to the financial market as possible. Latency is key, so also xilinx in the pipeline.

Same thinking drove the acquisition of #Pensando. Network complexity has exploded. Managing these resources is more complicated, especially with security.

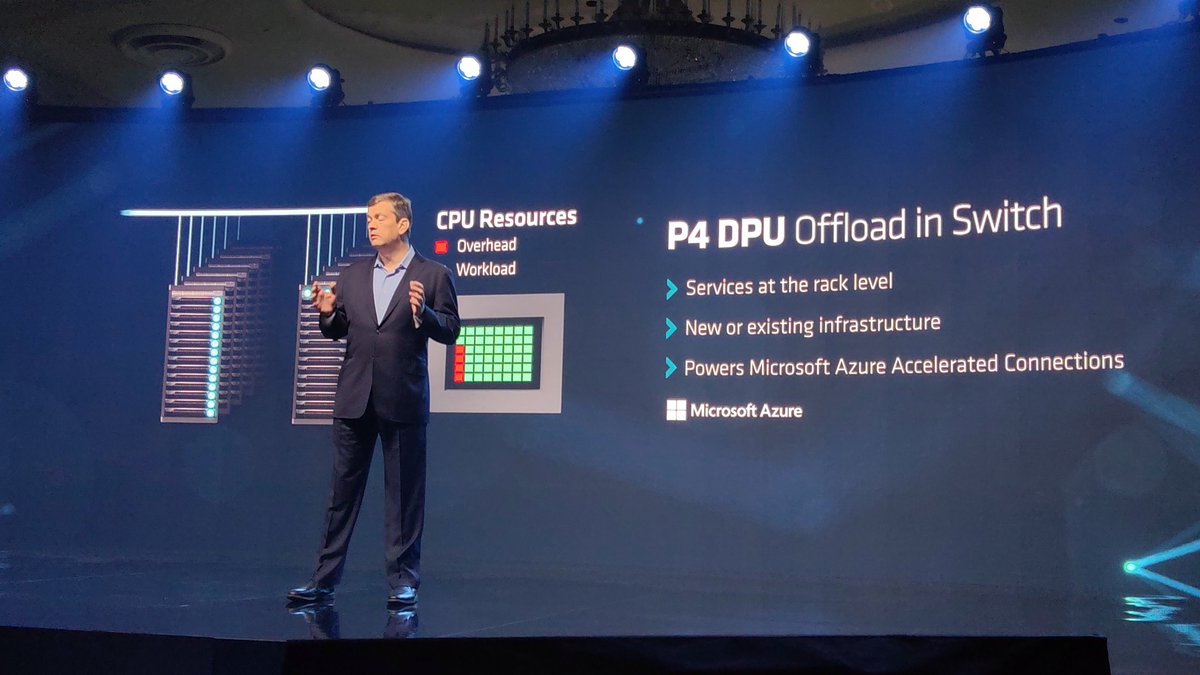

#Pensando P4 DPU. Forrest calls it the best networking architecture team in the industry

SmartNICs already in the cloud. Available as VMware vSphere solutions.

New P4 DPU offload in a switch. #Pensando silicon alongside the switching silicon.

AMD wants to accelerate AI solutions at scale. AMD has #AI hardware

Reminder : it's "Rock-'em". Not 'Rock-emm'.

Running 100k+ validation tests nightly on latest AI configs

I'm excited about #MI300 - pytorch founder

Day 0 support for #ROCm on PyTorch 2.0

@huggingface CEO on the stage.

New @AMD and @huggingface partnership being announced today. Instinct, radeon, ryzen, versal. AMD hardware in HF regression testing. Native optimization for AMD platforms.

Were talking training and inference. AMD hardware has advantages.

Still waiting for #MI300!

Now sampling #MI300A

So you replace 3 CPU chiplets with 2 GPU chiplets, add in more HBM for a total of 192GB of HBM3. That's 5.2 TB/sec of mem bandwidth.

153 BILLION TRANSISTORS.

That's #MI300X from @AMD

153 BILLION TRANSISTORS.

That's #MI300X from @AMD

8x #MI300X in OCP infrastructure for open standards. Accelerates TTM and decreases dev costs. Easy to implement. $AMD

#MI300X for LLMs

That's a wrap for today. More sessions later, not sure about embargoes, but will say what I can when I can!

• • •

Missing some Tweet in this thread? You can try to

force a refresh