LLM Fine tuning is here!

San Francisco’s top AI engineers came together to see what’s possible with fine-turning and only 4 hours of hacking.

Here’s an exclusive what we saw at the “Anything But Wrappers” hackathon (🧵):

San Francisco’s top AI engineers came together to see what’s possible with fine-turning and only 4 hours of hacking.

Here’s an exclusive what we saw at the “Anything But Wrappers” hackathon (🧵):

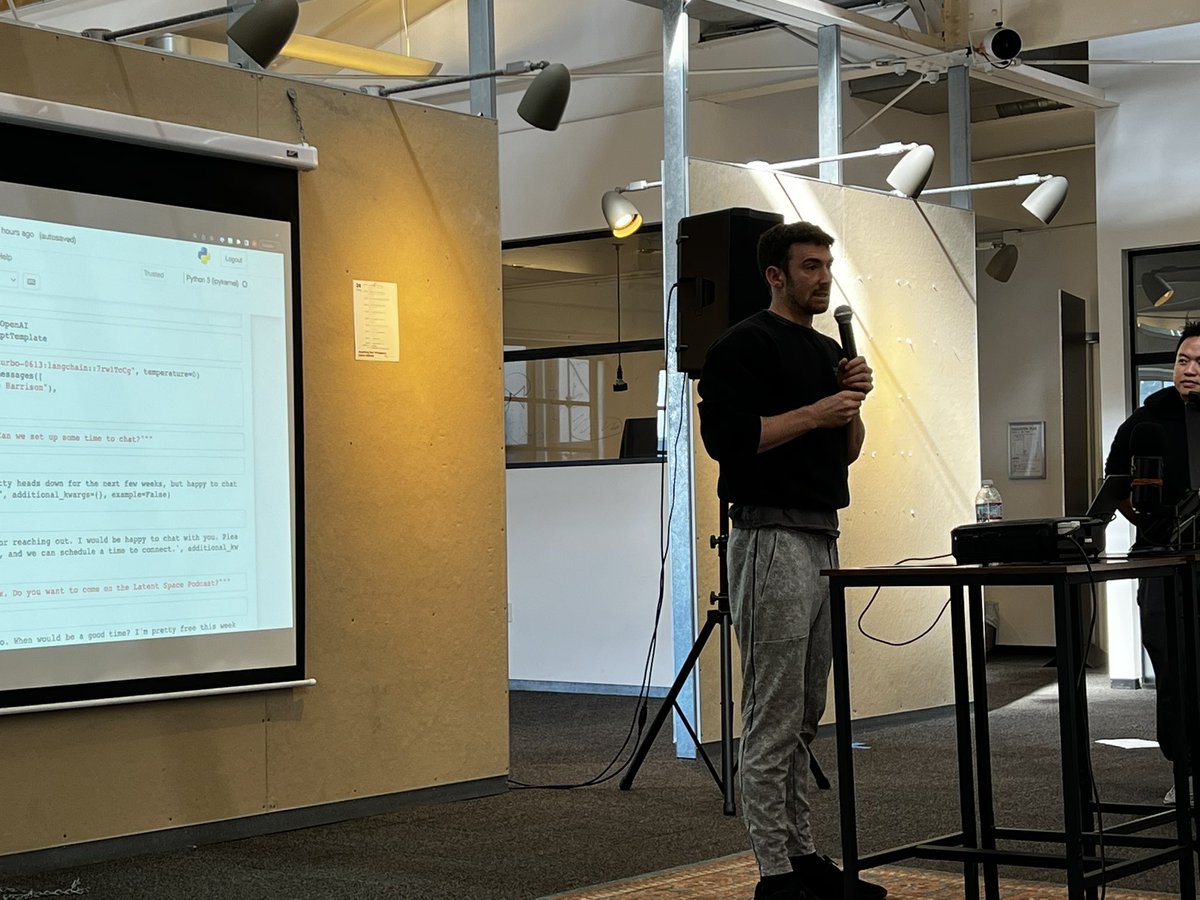

1/ Fine tuning on emails

Harrison fine-tuned GPT-3.5 on his emails to politely reject notes from VCs and respond to cool guys like @swyx

@hwchase17 @LangChainAI

Harrison fine-tuned GPT-3.5 on his emails to politely reject notes from VCs and respond to cool guys like @swyx

@hwchase17 @LangChainAI

2/ “Launching a bunch of cloud resources all at once to rack up a huge bill”

Plus, a shoutout to alphachive, an internal tool used by researchers at Stanford to rate papers

🏅 Winner most expensive

Plus, a shoutout to alphachive, an internal tool used by researchers at Stanford to rate papers

🏅 Winner most expensive

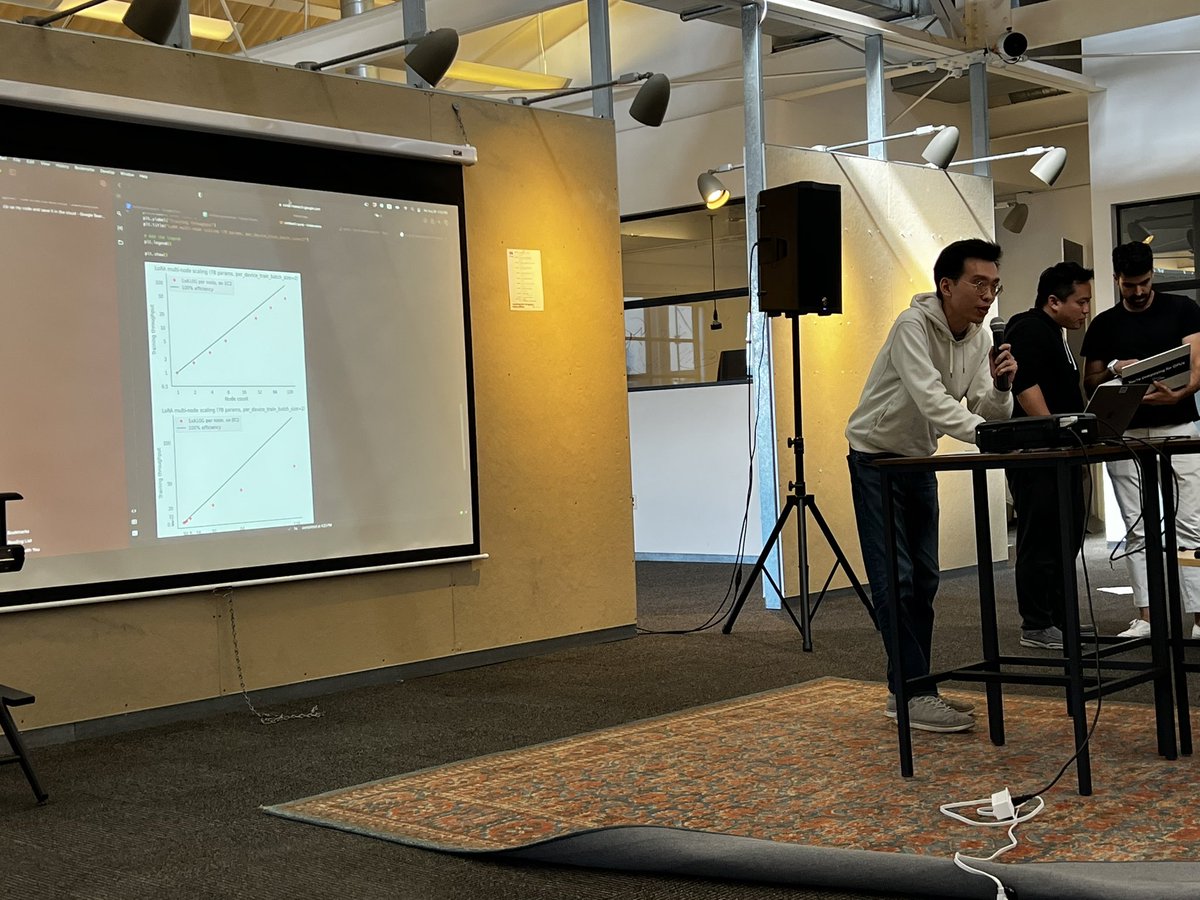

4/ “What if we distribute?”

You can train a LoRA on 128 GPUs on different machines with only 50% overhead

Cool experiment by @ericyu3_

You can train a LoRA on 128 GPUs on different machines with only 50% overhead

Cool experiment by @ericyu3_

5/ Llama Linter

Fine tuned model that learned to lint JavaScript better than GPT-3.5 and even GPT-4

@rachpradhan @chinzonghan3101 1

🏅Most practical (tie)

Fine tuned model that learned to lint JavaScript better than GPT-3.5 and even GPT-4

@rachpradhan @chinzonghan3101 1

🏅Most practical (tie)

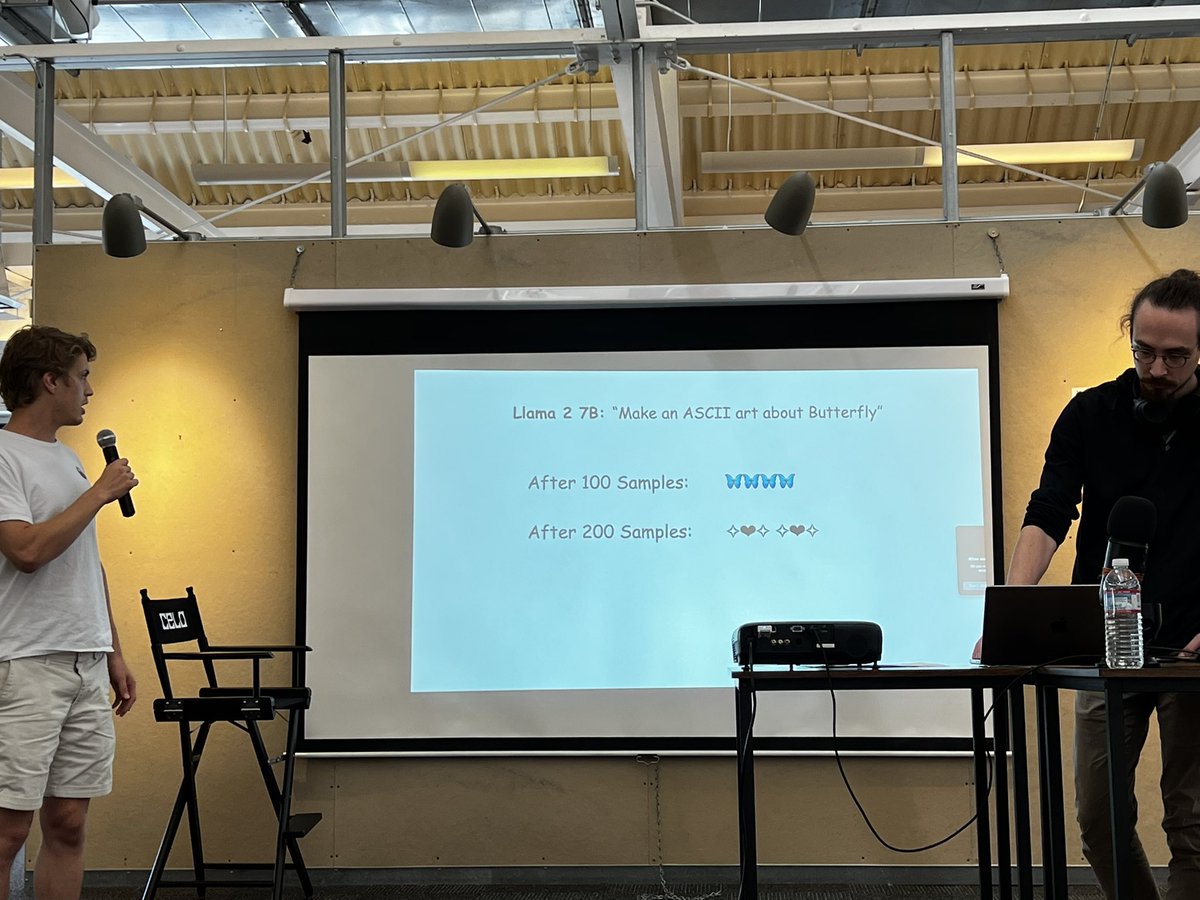

6/ Creative ASCII

Fine-tuned GPI-3 that generates ASCII art from any text prompt

@jamesmurdza

🏅 Most creative

Fine-tuned GPI-3 that generates ASCII art from any text prompt

@jamesmurdza

🏅 Most creative

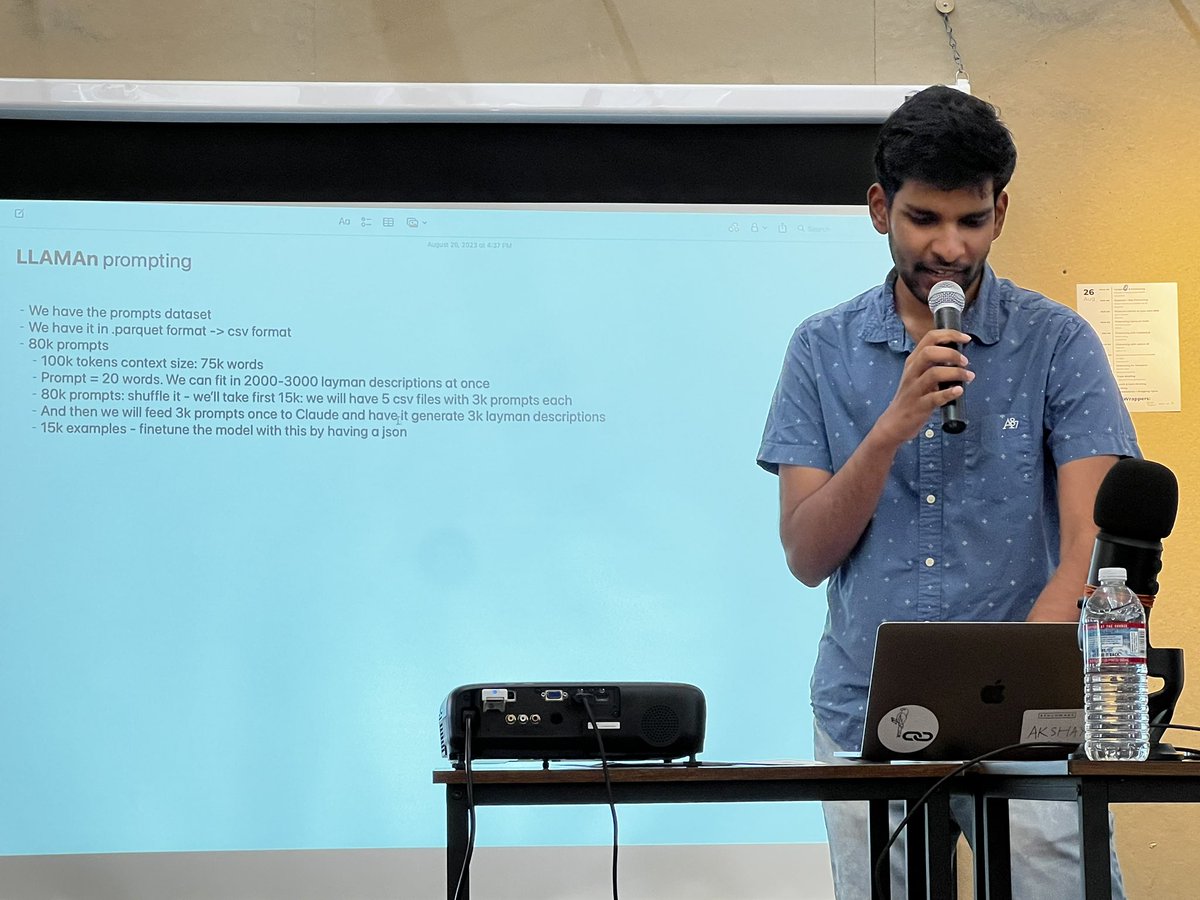

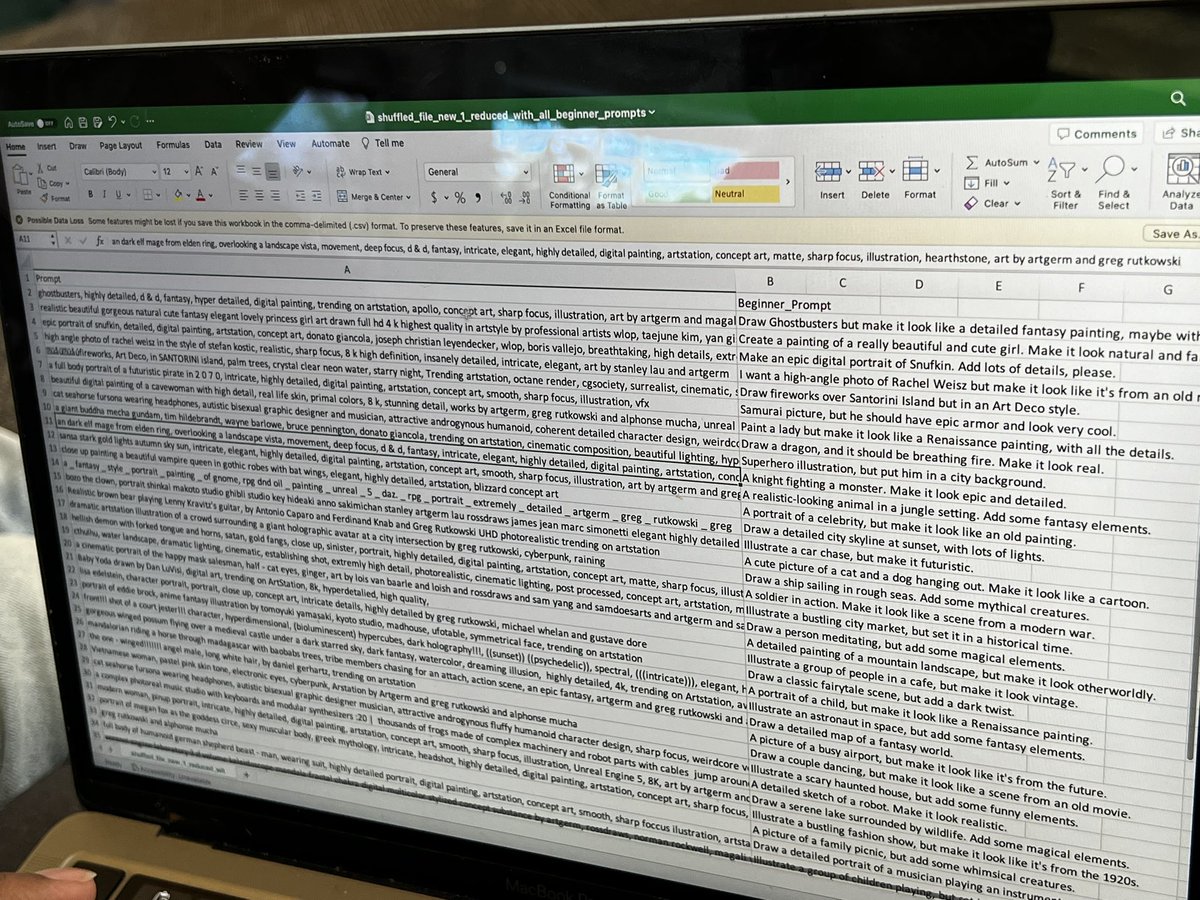

7/ Text to Prompt

Give a simple text description and have a fine tuned model turn it into a prompt suitable for DALLE or Midjourney

@akshayvkt @realyogeshdarji

Give a simple text description and have a fine tuned model turn it into a prompt suitable for DALLE or Midjourney

@akshayvkt @realyogeshdarji

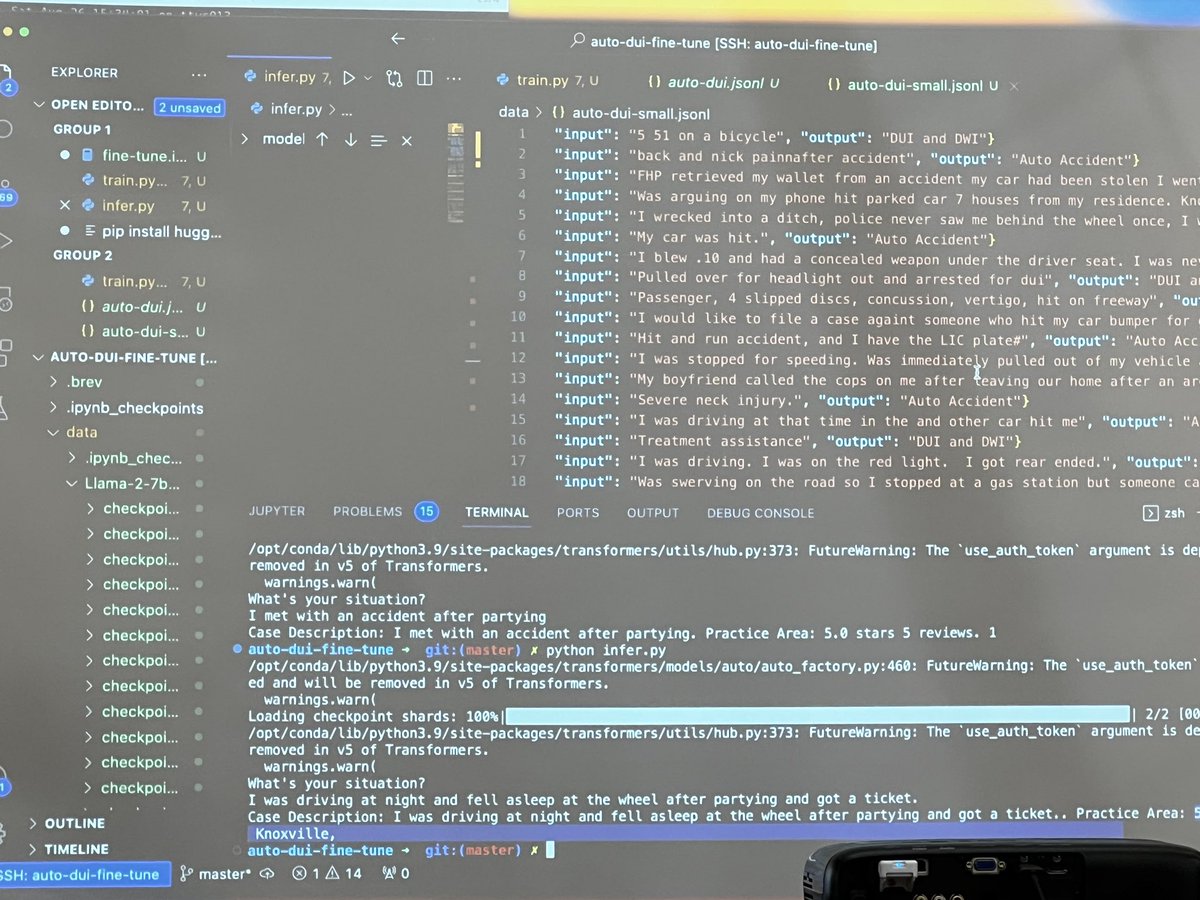

8/ Fine tuned LLaMA as supervised learner

Instead of training a small transformer to classify queries, just give them to LLaMA

🏅 Most practical (tie)

Instead of training a small transformer to classify queries, just give them to LLaMA

🏅 Most practical (tie)

That’s all for this time

Follow me @AlexReibman for more live reports on the SF hacker ecosystem

And huge thanks to the sponsors:

@AmplifyPartners @CRV @brevdev @CeloOrg @latentspacepod @LangChainAI @replicatehq @anyscalecompute @metaphorsystems @_FireworksAI @phindsearch

Follow me @AlexReibman for more live reports on the SF hacker ecosystem

And huge thanks to the sponsors:

@AmplifyPartners @CRV @brevdev @CeloOrg @latentspacepod @LangChainAI @replicatehq @anyscalecompute @metaphorsystems @_FireworksAI @phindsearch

https://twitter.com/alexreibman/status/1692009609953993069

• • •

Missing some Tweet in this thread? You can try to

force a refresh

Read on Twitter

Read on Twitter