Developed the IAT, GNAT, and SPF. Co-founded Project Implicit, Society for the Improvement of Psychological Science, and the Center for Open Science.

How to get URL link on X (Twitter) App

https://twitter.com/BrianNosek/status/1587024198567952386Context: You might be tempted to post new content on both platforms until it is clear that Mastodon is going mainstream. This WILL NOT work.

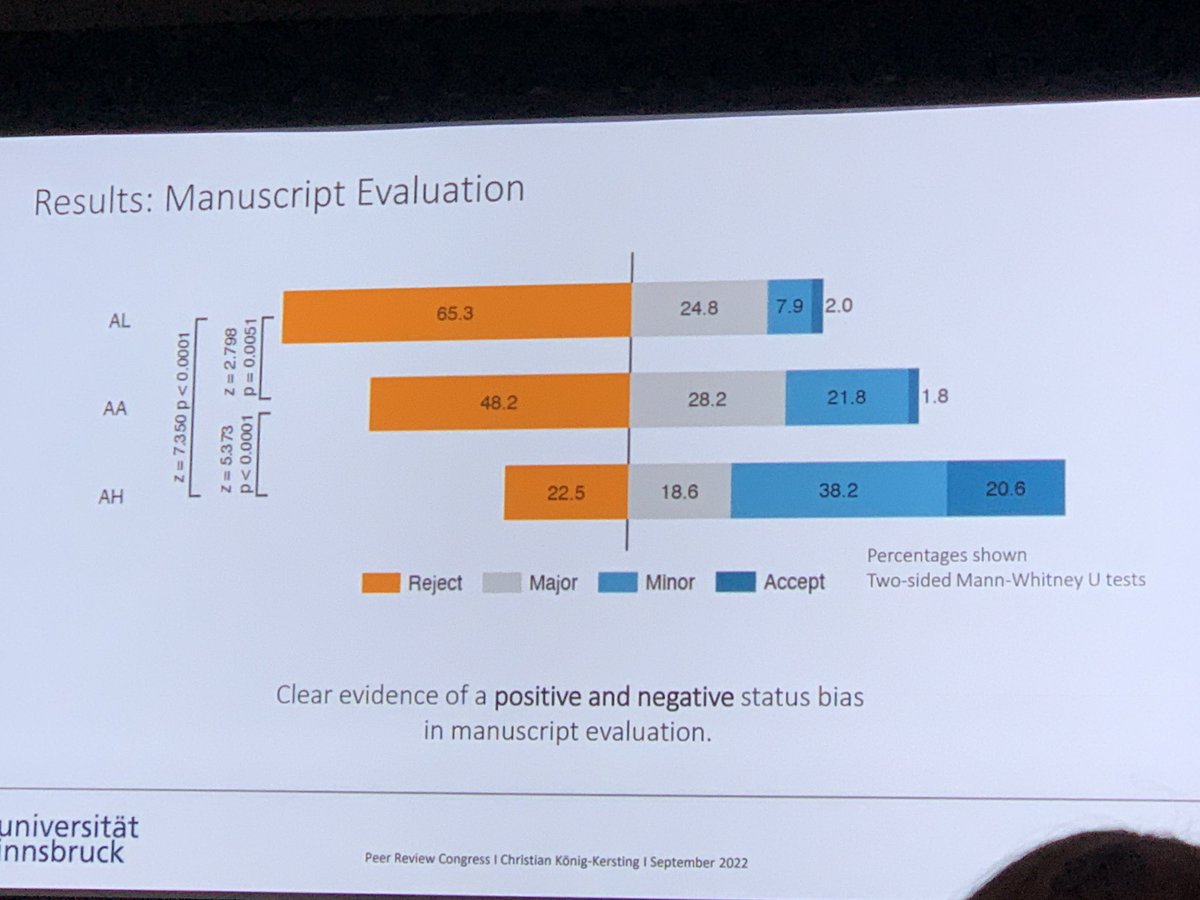

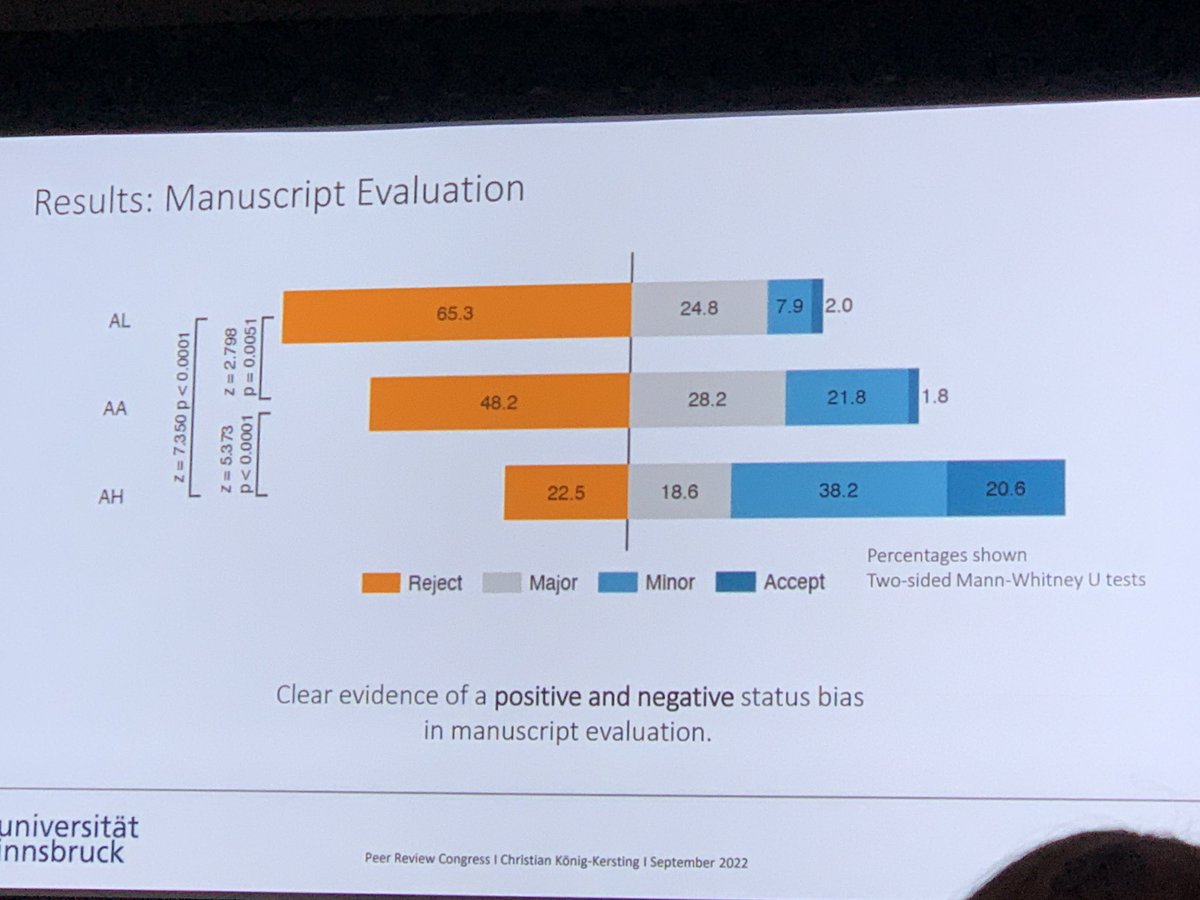

Or, look at it another way. If the reviewers knew only the low status author, just 2% said to accept without revisions. If the reviewers knew only the high status author, almost 21% said to accept without revisions.

Or, look at it another way. If the reviewers knew only the low status author, just 2% said to accept without revisions. If the reviewers knew only the high status author, almost 21% said to accept without revisions.

https://twitter.com/westwoodsam1/status/1522491798949609472Key downsides that needed managing for me: (a) dysfunctional culture that rewarded flashy findings over rigor and my core values, (b) extremely competitive job market, and (c) mysterious and seemingly life-defining "tenure"

Most of our prior taste tests were amenable to some blinding such as comparing fast food chicken and fries and testing generic vs. brand-name.

Most of our prior taste tests were amenable to some blinding such as comparing fast food chicken and fries and testing generic vs. brand-name.

r > .50?

r > .50?

https://twitter.com/BrianNosek/status/1408081726044319749@Edit0r_At_Large The journal did invite a resubmission if we wanted to try to address them. However, we ultimately decided not to resubmit because of timing. We had a grant deadline to consider.

Co-authors: @Tom_Hardwicke @hmoshontz @AllardThuriot @katiecorker Anna Dreber @fidlerfm @JoeHilgard @melissaekline @MicheleNuijten @dingding_peng Felipe Romero @annemscheel @ldscherer @nicebread303 @siminevazire 2/

Co-authors: @Tom_Hardwicke @hmoshontz @AllardThuriot @katiecorker Anna Dreber @fidlerfm @JoeHilgard @melissaekline @MicheleNuijten @dingding_peng Felipe Romero @annemscheel @ldscherer @nicebread303 @siminevazire 2/

Came downstairs and the tree is transformed with whoopee cushions, fake(?) poop, and cockroaches all-over it.

Came downstairs and the tree is transformed with whoopee cushions, fake(?) poop, and cockroaches all-over it.

https://twitter.com/Maxyusc/status/1132226934690029568For example, the first time preregistering an analysis plan, many people report being shocked at how hard it is without seeing the data. It produces a recognition that our analysis decision-making (and hypothesizing) had been much more data contingent than we realized. 2/

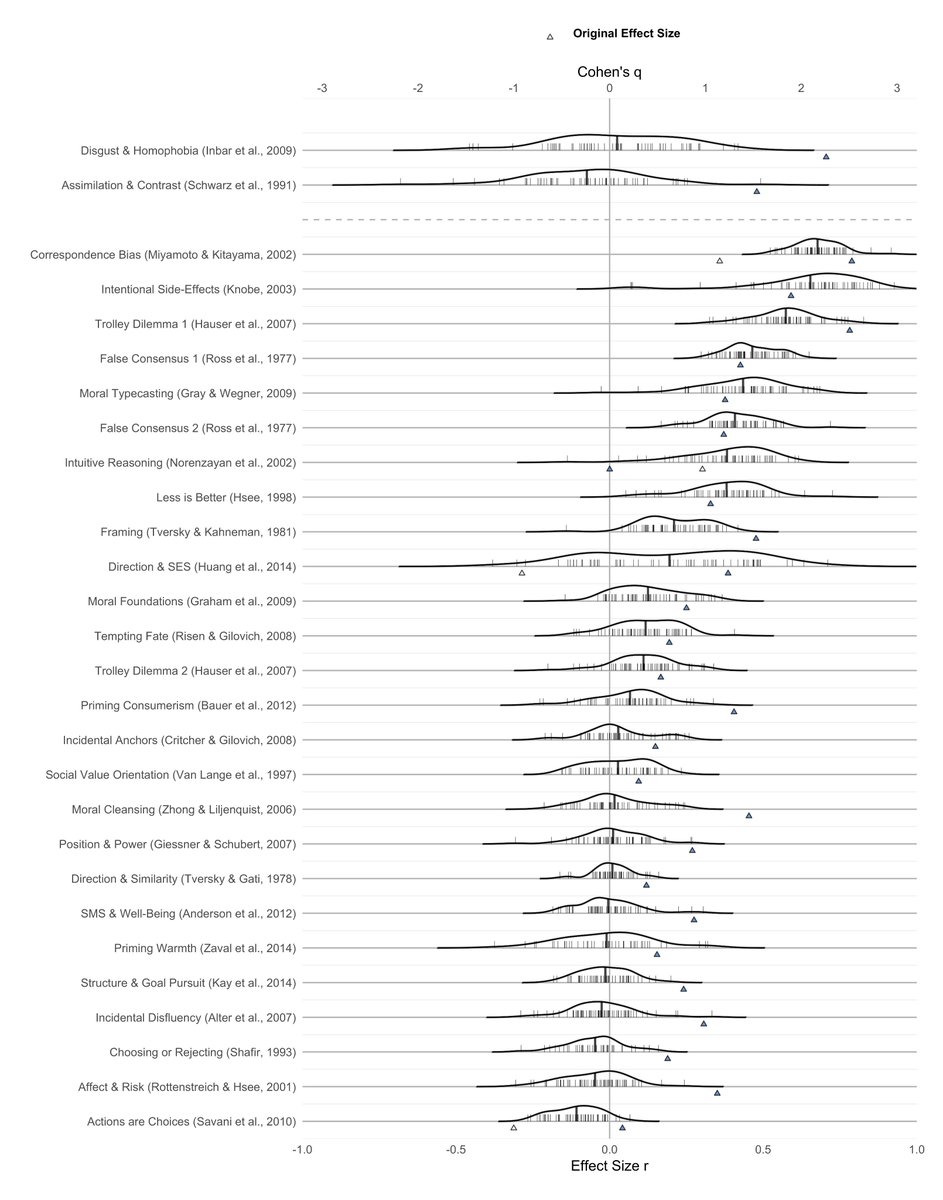

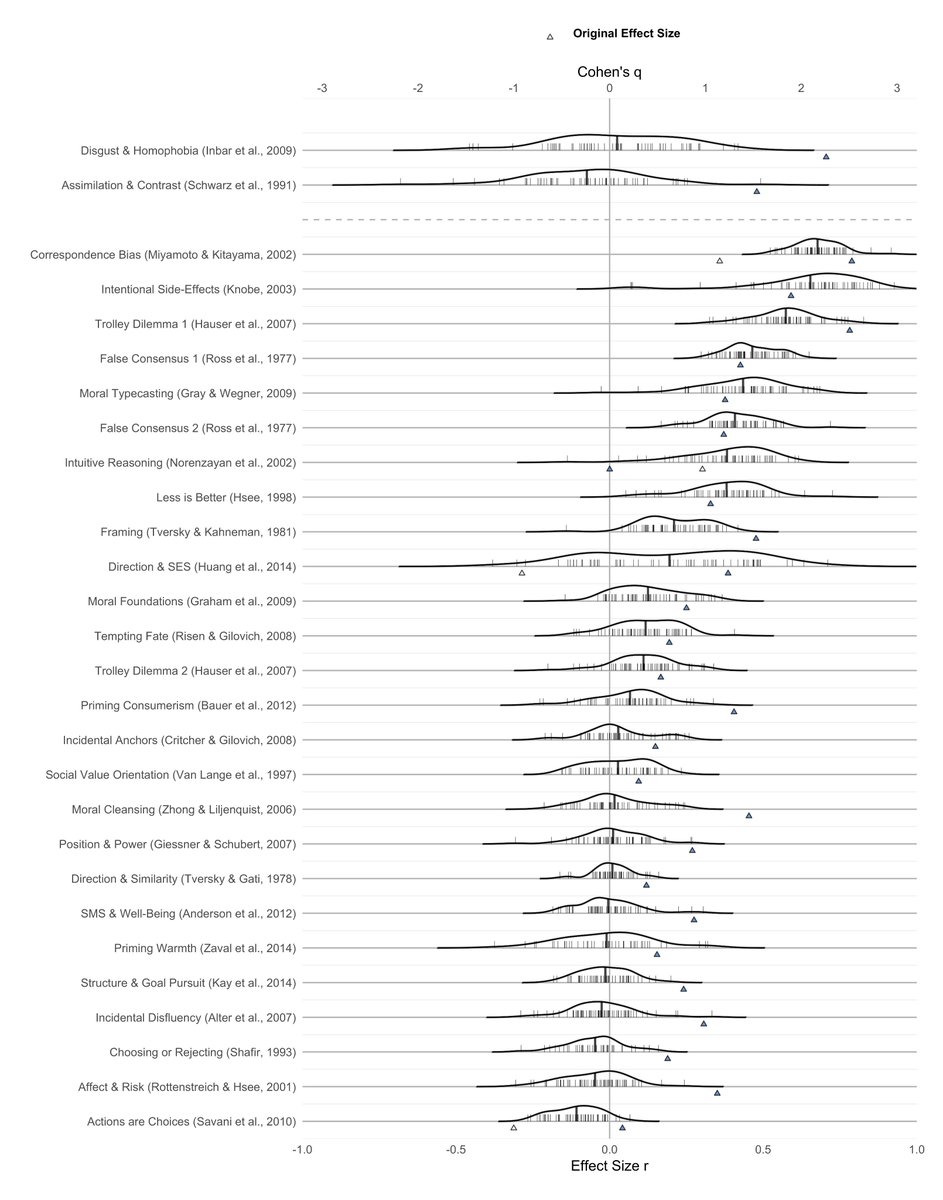

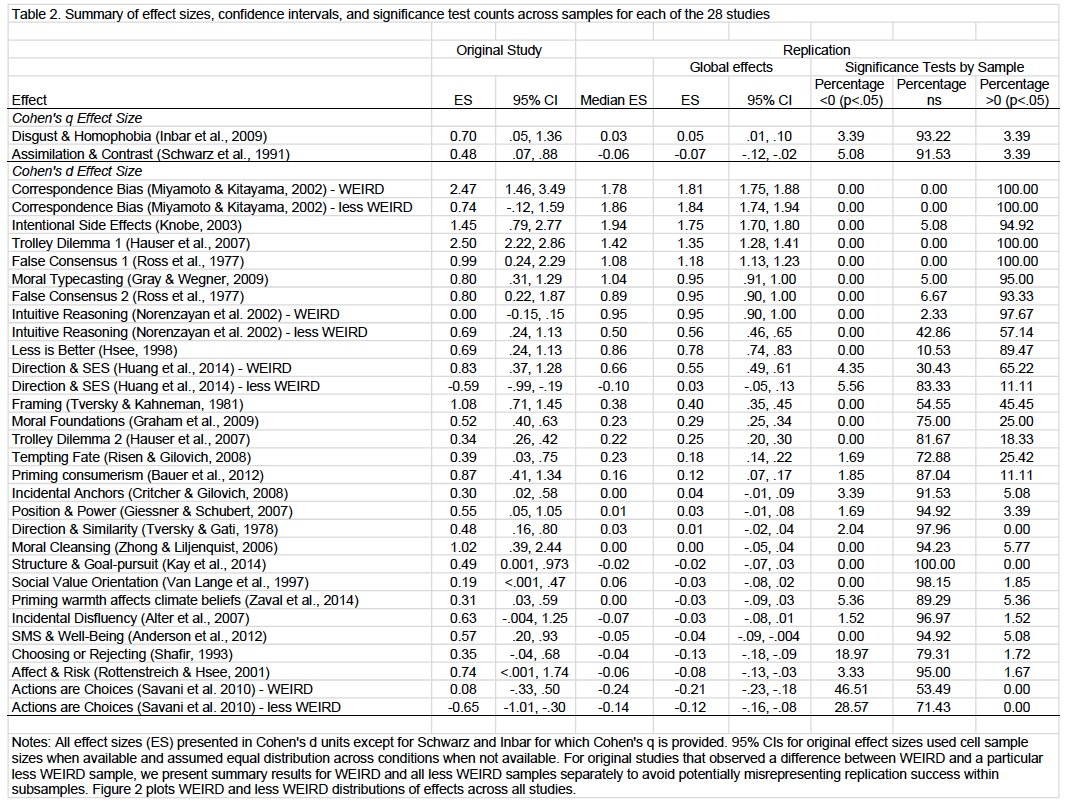

ML2 minimized boring reasons for failure. First, using original materials & Registered Reports cos.io/rr all 28 replications met expert reviewed quality control standards. Failure to replicate not easily dismissed as replication incompetence. psyarxiv.com/9654g

ML2 minimized boring reasons for failure. First, using original materials & Registered Reports cos.io/rr all 28 replications met expert reviewed quality control standards. Failure to replicate not easily dismissed as replication incompetence. psyarxiv.com/9654g

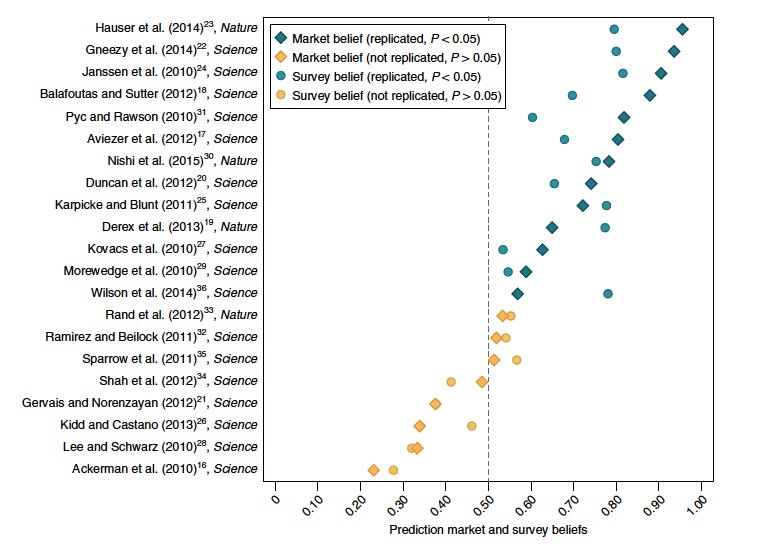

Using prediction markets we found that researchers were very accurate in predicting which studies would replicate and which would not. (blue=successful replications; yellow=failed replications; x-axis=market closing price) socarxiv.org/4hmb6/ nature.com/articles/s4156… #SSRP

Using prediction markets we found that researchers were very accurate in predicting which studies would replicate and which would not. (blue=successful replications; yellow=failed replications; x-axis=market closing price) socarxiv.org/4hmb6/ nature.com/articles/s4156… #SSRP