How to get URL link on X (Twitter) App

Schmidhuber’s take on whether it makes sense to ban large language models like GPT in education, and future of human labor.

Schmidhuber’s take on whether it makes sense to ban large language models like GPT in education, and future of human labor.

https://twitter.com/enpitsu/status/1610587513059684353

This is similar to the “Fake Kanji” with recurrent neural network experiments I did many years ago, when computers were 1000x less powerful :) Kind of fun to see updated results with modern diffusion models.

This is similar to the “Fake Kanji” with recurrent neural network experiments I did many years ago, when computers were 1000x less powerful :) Kind of fun to see updated results with modern diffusion models.

This release is led by @robrombach @StabilityAI

This release is led by @robrombach @StabilityAI

https://twitter.com/AaronHertzmann/status/1549091375928397824

Here’s another four samples of the same prompt.

Here’s another four samples of the same prompt.

You can clearly see this, because the prompts for images that end up going viral for one model, clearly don’t “work” for another model.

You can clearly see this, because the prompts for images that end up going viral for one model, clearly don’t “work” for another model.https://twitter.com/_nateraw/status/1541830523194056704

https://twitter.com/memesiwish/status/1502260368613130244

Oh boy, this is going to be a fun thread.

Oh boy, this is going to be a fun thread.

https://twitter.com/janellecshane/status/1530988224864063488Interesting observation.

https://twitter.com/hardmaru/status/1530415349367070720

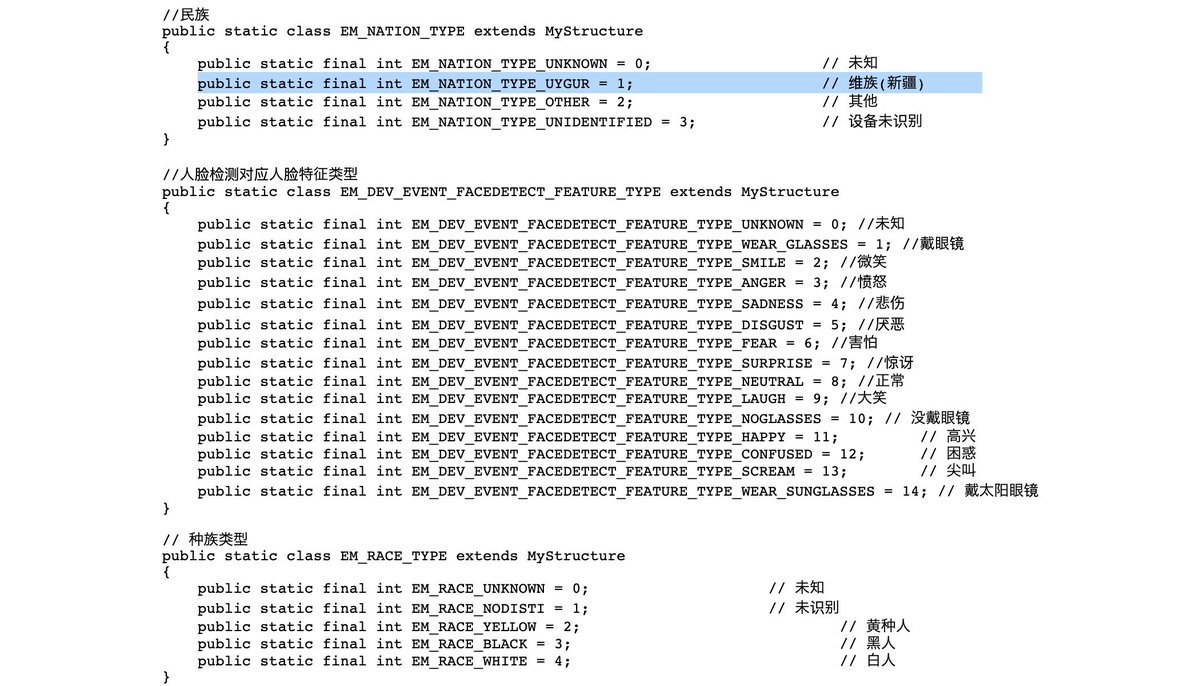

https://twitter.com/pmddomingos/status/1336187141366317056Maybe can start with “Facial feature discovery for ethnicity recognition” (Published by @WileyInResearch in 2018):

https://twitter.com/hardmaru/status/1135377283940700160

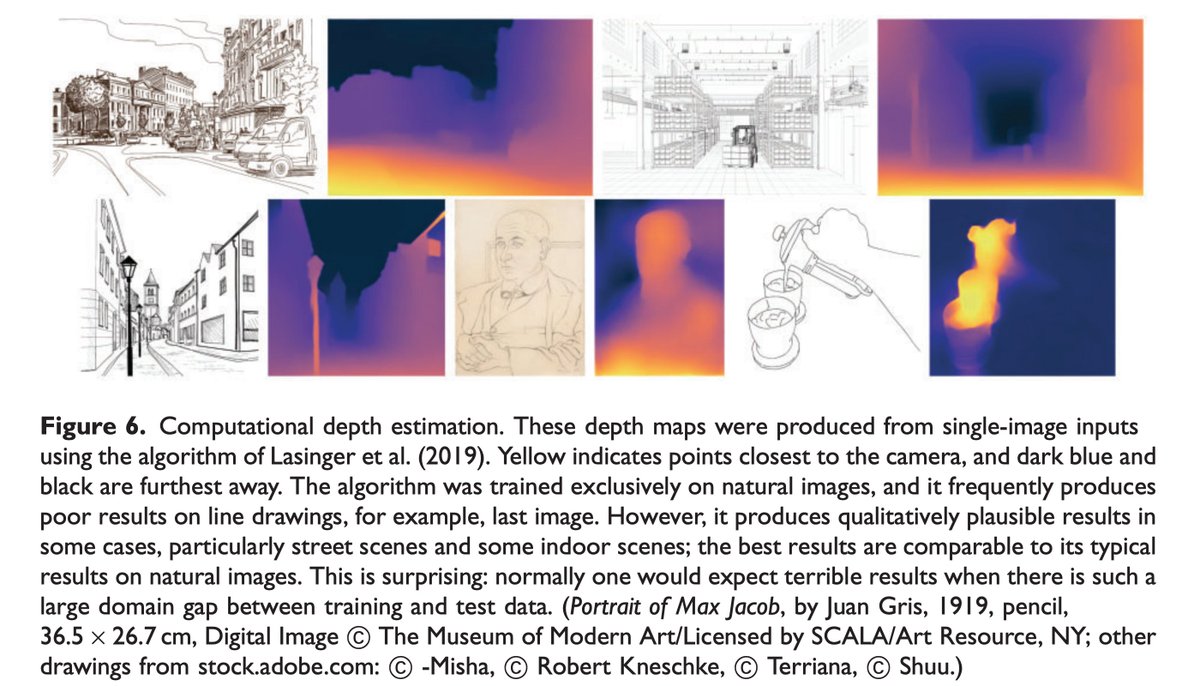

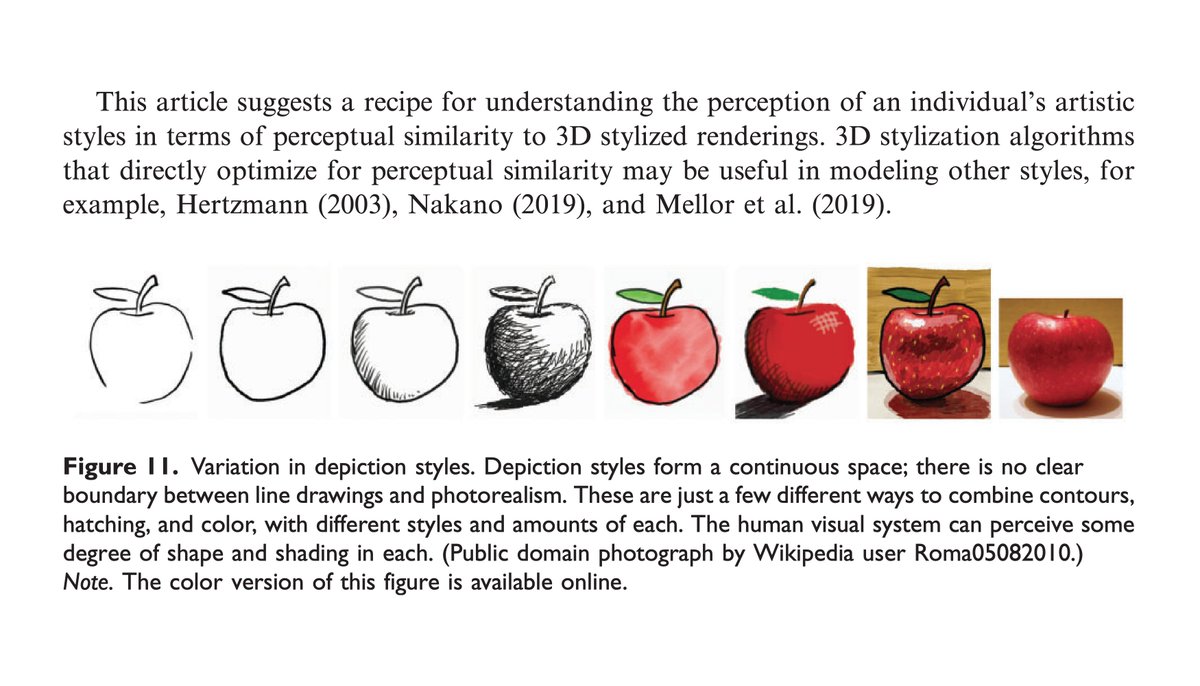

The coolest result in this paper is when they took a depth estimation model (single-image input) trained on natural images (arxiv.org/abs/1907.01341), and showed that the pre-trained model also works on certain types of line drawings, such as drawings of streets and indoor scenes.

The coolest result in this paper is when they took a depth estimation model (single-image input) trained on natural images (arxiv.org/abs/1907.01341), and showed that the pre-trained model also works on certain types of line drawings, such as drawings of streets and indoor scenes.