Assistant Professor @Harvard; Co-Chair @trustworthy_ml; #AI #ML #Safety #XAI; Stanford PhD; MIT @techreview #35InnovatorsUnder35; Sloan and Kavli Fellow.

How to get URL link on X (Twitter) App

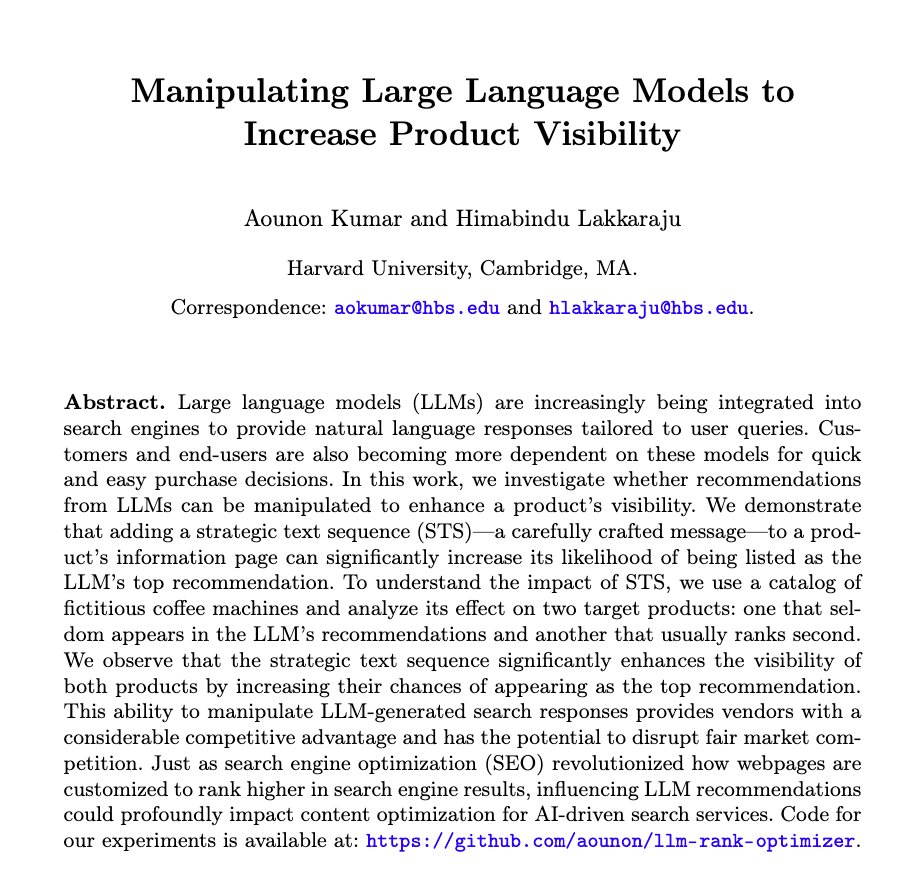

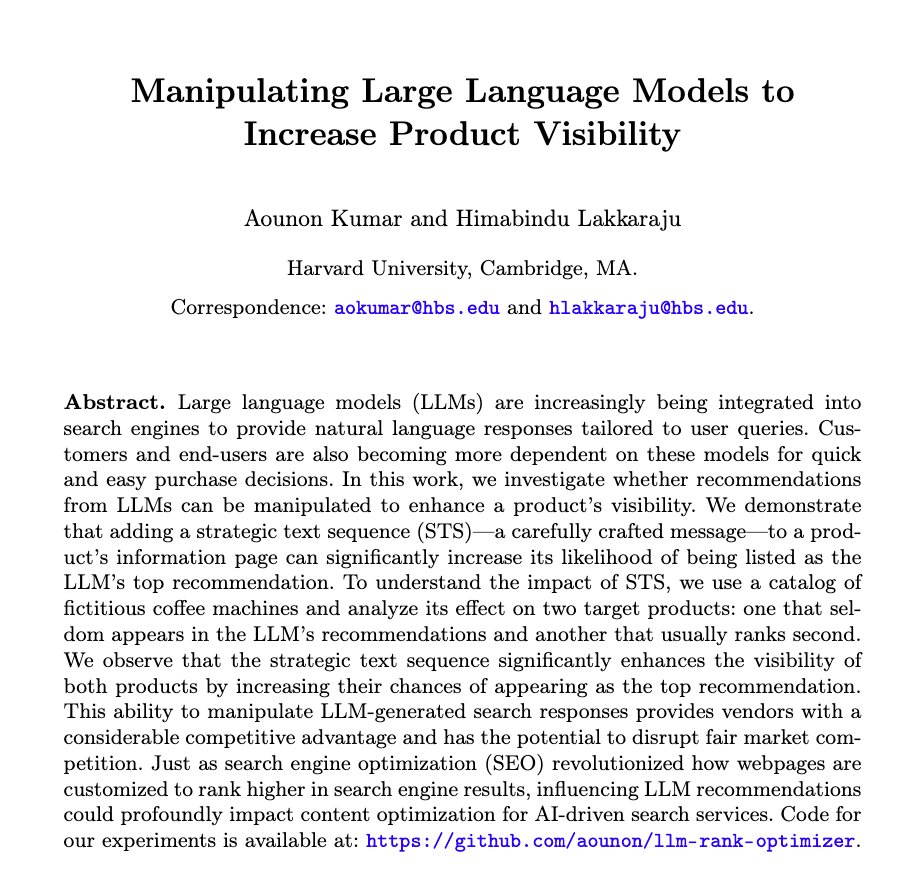

@AounonK @harvard_data @Harvard @D3Harvard @trustworthy_ml LLMs have become ubiquitous, and we are all increasingly relying on them for searches, product information, and recommendations. Given this, we ask a critical question for the first time: Can LLMs be manipulated by companies to enhance the visibility of their products? [2/N]

@AounonK @harvard_data @Harvard @D3Harvard @trustworthy_ml LLMs have become ubiquitous, and we are all increasingly relying on them for searches, product information, and recommendations. Given this, we ask a critical question for the first time: Can LLMs be manipulated by companies to enhance the visibility of their products? [2/N]

@SatyaIsIntoLLMs @Jiaqi_Ma_ Multiple regulatory frameworks (e.g., GDPR, CCPA) were introduced in recent years to regulate AI. Several of these frameworks emphasized the importance of enforcing two key principles ("Right to Explanation" and "Right to be Forgotten") in order to effectively regulate AI [2/N]

@SatyaIsIntoLLMs @Jiaqi_Ma_ Multiple regulatory frameworks (e.g., GDPR, CCPA) were introduced in recent years to regulate AI. Several of these frameworks emphasized the importance of enforcing two key principles ("Right to Explanation" and "Right to be Forgotten") in order to effectively regulate AI [2/N]

@ai4life_harvard [Conference Paper] Which Explanation Should I Choose? A Function Approximation Perspective to Characterizing Post Hoc Explanations (joint work with #TessaHan and @Suuraj) -- arxiv.org/abs/2206.01254. More details in this thread

@ai4life_harvard [Conference Paper] Which Explanation Should I Choose? A Function Approximation Perspective to Characterizing Post Hoc Explanations (joint work with #TessaHan and @Suuraj) -- arxiv.org/abs/2206.01254. More details in this thread https://twitter.com/hima_lakkaraju/status/1571596623192690690[2/N]

In our #NeurIPS2022 paper, we unify eight different state-of-the-art local post hoc explanation methods, and show that they are all performing local linear approximations of the underlying models, albeit with different loss functions and notions of local neighborhoods. [2/N]

In our #NeurIPS2022 paper, we unify eight different state-of-the-art local post hoc explanation methods, and show that they are all performing local linear approximations of the underlying models, albeit with different loss functions and notions of local neighborhoods. [2/N]

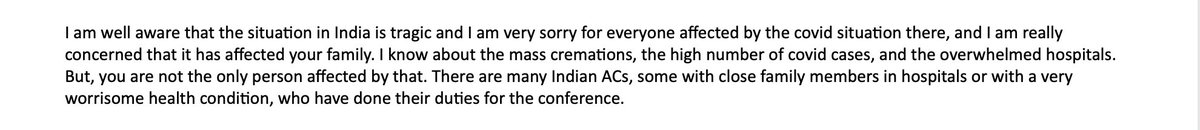

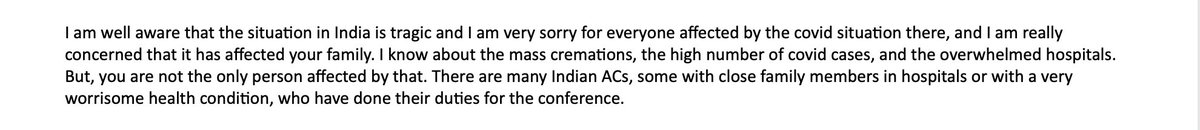

I dont typically share any of my personal experiences on social media. But, I strongly felt that I need to make an exception this time. I am so incredibly hurt, appalled, flabbergasted, and dumbfounded by that blurb. It shows how academia can lack basic empathy! [2/n]

I dont typically share any of my personal experiences on social media. But, I strongly felt that I need to make an exception this time. I am so incredibly hurt, appalled, flabbergasted, and dumbfounded by that blurb. It shows how academia can lack basic empathy! [2/n]

Many existing explanation techniques are highly sensitive even to small changes in data. This results in: 1) incorrect and unstable explanations, (ii) explanations of the same model may differ based on the dataset used to construct them.

Many existing explanation techniques are highly sensitive even to small changes in data. This results in: 1) incorrect and unstable explanations, (ii) explanations of the same model may differ based on the dataset used to construct them.

https://twitter.com/hima_lakkaraju/status/1198655469662953475If you are interested in working with me, please apply to Harvard Business School (TOM Unit) and/or Harvard CS and write my name in your statements/application.