AI engineer, digital artist, public speaker, online educator and co-founder of @eden_art_ and https://t.co/BDW8z5h0Fd. Also building https://t.co/9DVk5HJtI4

How to get URL link on X (Twitter) App

The fact that all this visual splendor is compressed in just 4Gb of neural network weights totally blows my mind. Call it compression, call it emergence, it's just 🤯🤯

The fact that all this visual splendor is compressed in just 4Gb of neural network weights totally blows my mind. Call it compression, call it emergence, it's just 🤯🤯

https://twitter.com/xsteenbrugge/status/1556099146762919937?t=elwa804pYDGdmmlB8Q-Y6g&s=19

https://twitter.com/LUKEILLUSTRATE/status/1559697309184802819Next, I had to come up with a visual narrative that would work well with the style of the Diffusion interpolations. You can't just tell any story here: like with any medium, you have to work within the constraints of the technology. (2/n)

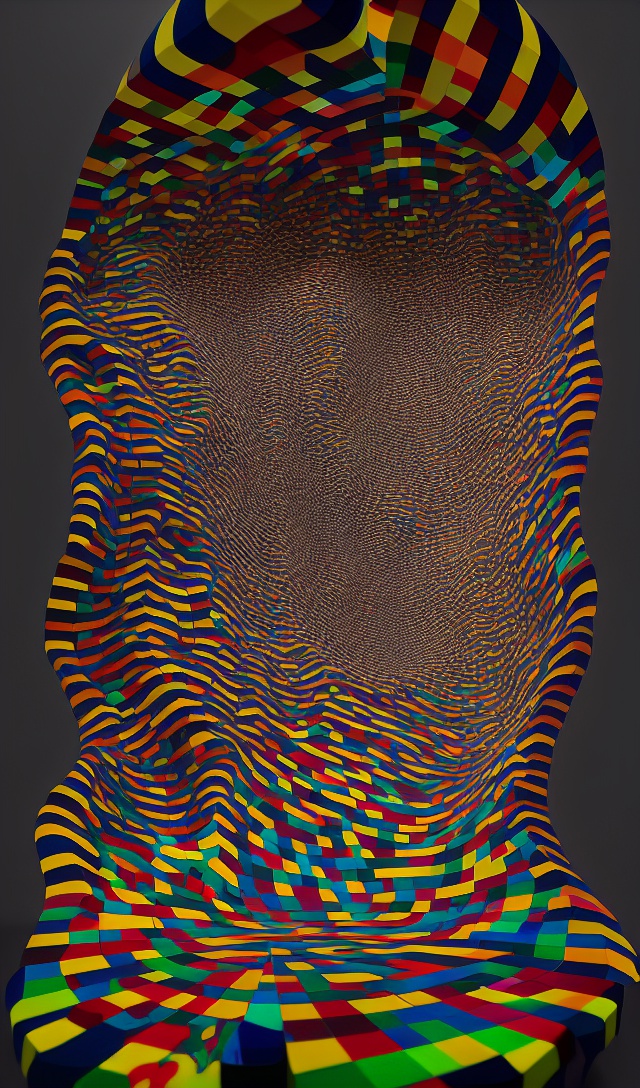

"The magnificent portal of mother Gaia"

"The magnificent portal of mother Gaia"

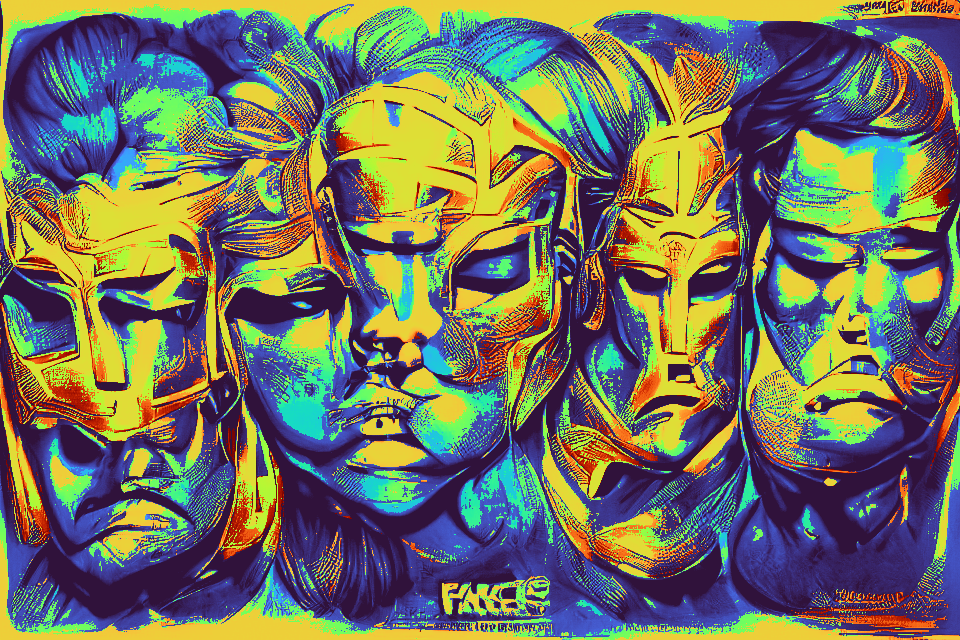

"The real problem of humanity is that we have Paleolithic emotions, medieval institutions and godlike technology"

"The real problem of humanity is that we have Paleolithic emotions, medieval institutions and godlike technology"

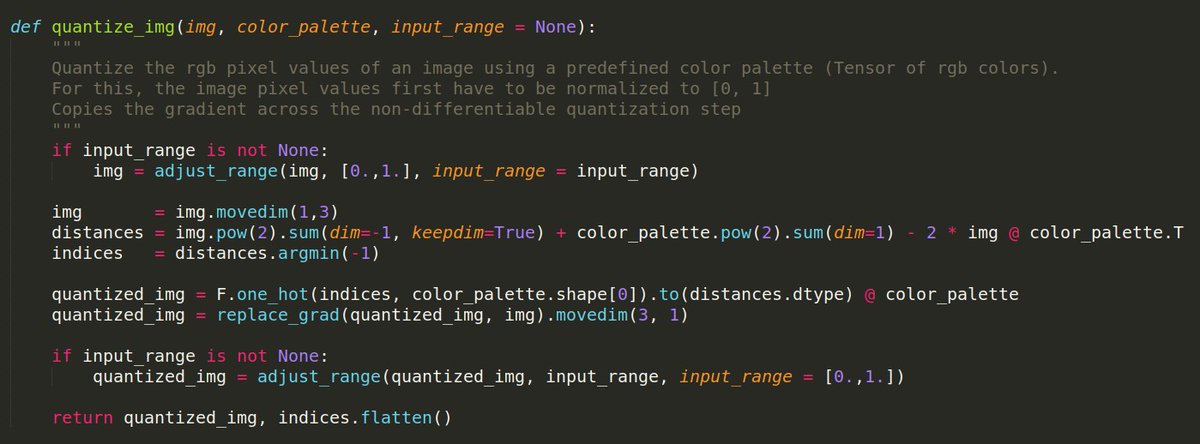

https://twitter.com/ak92501/status/1445872262771478529TLDR:

https://twitter.com/xsteenbrugge/status/1445327569847521281

"𝒎𝒚 𝒉𝒆𝒂𝒅 𝒊𝒔 𝒇𝒖𝒍𝒍 𝒐𝒇 𝒏𝒐𝒊𝒔𝒆"

"𝒎𝒚 𝒉𝒆𝒂𝒅 𝒊𝒔 𝒇𝒖𝒍𝒍 𝒐𝒇 𝒏𝒐𝒊𝒔𝒆"

"Inception"

"Inception"