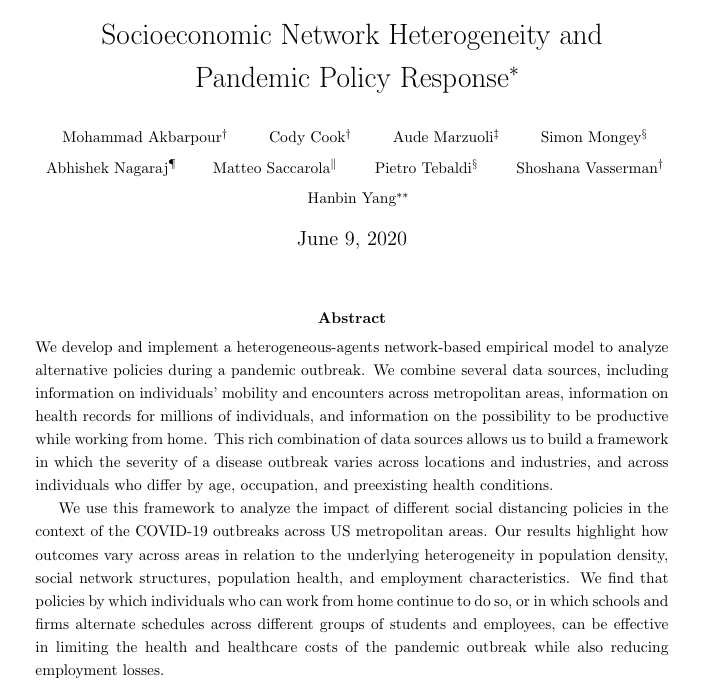

Hello #econtwitter! The wonderful @SNageebAli + Greg Lewis + I have just posted our working paper.

scholar.harvard.edu/files/vasserma…

We would love your comments. Thread 👇

scholar.harvard.edu/files/vasserma…

We would love your comments. Thread 👇

Motivation:

Policy debates re privacy on the internet often stress these trade-offs:

1) Firms getting user data -> better matches + service to larger market 👍

2) But lack of privacy is icky 👎

3) And facilitates "too much" price discrimination 👎

See obamawhitehouse.archives.gov/sites/default/…

Policy debates re privacy on the internet often stress these trade-offs:

1) Firms getting user data -> better matches + service to larger market 👍

2) But lack of privacy is icky 👎

3) And facilitates "too much" price discrimination 👎

See obamawhitehouse.archives.gov/sites/default/…

Lots of responses:

Policy -- GDPR, CCPA, FCC "best practices", etc

Industry -- "privacy oriented" products by Apple, Mozilla, etc.

Academics -- budding theoretical literature on privacy + value of data; small but growing empirical lit (eg this from NBERSI

Policy -- GDPR, CCPA, FCC "best practices", etc

Industry -- "privacy oriented" products by Apple, Mozilla, etc.

Academics -- budding theoretical literature on privacy + value of data; small but growing empirical lit (eg this from NBERSI

https://twitter.com/shoshievass/status/1151862980277129221?s=20)

Our question:

Can personalized pricing be beneficial when consumers have control over whether + what info to disclose to a seller?

- Product is not personalized (no match value)

- Information is "verifiable" (can't outright lie but can obfuscate)

- Strategies are ex-interim IC

Can personalized pricing be beneficial when consumers have control over whether + what info to disclose to a seller?

- Product is not personalized (no match value)

- Information is "verifiable" (can't outright lie but can obfuscate)

- Strategies are ex-interim IC

The Gist:

- Personalized pricing+consumer control over disclosure is beneficial (even w/o match value)

-> Can be used to weakly improve _every_ consumer's lot

- Disclosure can help when facing a monopolist; it can help even more when there is competition

- Message tech matters

- Personalized pricing+consumer control over disclosure is beneficial (even w/o match value)

-> Can be used to weakly improve _every_ consumer's lot

- Disclosure can help when facing a monopolist; it can help even more when there is competition

- Message tech matters

A simple example:

- Monopolist sells a good to a consumer w value v~U[0,1]

- Consumer observes v and sends a message based on available tech:

--> "Simple evidence": Either send "my type is 'v'" or send nothing.

--> "Rich evidence": "v is in the interval [a,b]"

- Monopolist sells a good to a consumer w value v~U[0,1]

- Consumer observes v and sends a message based on available tech:

--> "Simple evidence": Either send "my type is 'v'" or send nothing.

--> "Rich evidence": "v is in the interval [a,b]"

Tl;dr: Simple evidence doesn't help any type of consumer.

Intuition: Type v can always disclose {v}, get price v & payoff 0. If no one discloses, the profit maxing price is 1/2. If some disclose, all others get some optimal price p. Then all types v>p won't disclose -> p >= 1/2

Intuition: Type v can always disclose {v}, get price v & payoff 0. If no one discloses, the profit maxing price is 1/2. If some disclose, all others get some optimal price p. Then all types v>p won't disclose -> p >= 1/2

But we can do better. With "rich evidence", consumers can partition themselves into segments [a_{k+1}, a_{k}] s.t. every type is served at the lowest acceptable price in her partition. For v~U[0,1], the optimal partition (for consumers) has a nice geometry: [1,1/2], [1/2,1/4],...

Why is this an equilibrium? Each type is getting their lowest achievable price on-path. Finer (feasible) messages can only induce a higher equilibrium price.

Why is this optimal? We're maximizing the avg pooling discount: maximally big pools for the highest types.

Why is this optimal? We're maximizing the avg pooling discount: maximally big pools for the highest types.

Is this general? Yes and no.

Obs 1 is general: simple info can't help.

Obs 2 is more complicated: We generalize Zeno by defining a "greedy" partition: construct a maximal segment s.t. everyone is narrowly served at the optimal price and recurse.

--> Pareto improving? 👍 Optimal?

Obs 1 is general: simple info can't help.

Obs 2 is more complicated: We generalize Zeno by defining a "greedy" partition: construct a maximal segment s.t. everyone is narrowly served at the optimal price and recurse.

--> Pareto improving? 👍 Optimal?

Optimality depends on the distribution of types. Intuitively, we need to ensure that greedily constructing segments really minimizes the avg price across all types. We can find counter-examples (👇) but finding necessary + sufficient cond'ns has proven hard. F(v) = v^k works tho.

What about competition?

We consider a model of horizontal Bertrand competition: consumers have location type l in [-1,1] + pay a linear transportation cost to buy from firms at -1 & 1

Same kinds of messages as before, "simple" & "rich". But now can send diff msgs to each firm

We consider a model of horizontal Bertrand competition: consumers have location type l in [-1,1] + pay a linear transportation cost to buy from firms at -1 & 1

Same kinds of messages as before, "simple" & "rich". But now can send diff msgs to each firm

What changes?

(1) Any disclosure can be helpful. If every type reveals herself, the firms play a full-info Bertrand pricing game --> farther firm charges 0; nearer firm charges the value of not traveling to the farther firm. Absent disclosure, the firms don't compete as hard

(1) Any disclosure can be helpful. If every type reveals herself, the firms play a full-info Bertrand pricing game --> farther firm charges 0; nearer firm charges the value of not traveling to the farther firm. Absent disclosure, the firms don't compete as hard

(2) Simple Evidence can be helpful. How?

Disclose to the farther firm so he bids down to a price of 0. To the nearer firm, moderate its monopoly power: disclose if "hard to get" (e.g. near the center); conceal if extremal (so that reaching the farther firm is very costly).

Disclose to the farther firm so he bids down to a price of 0. To the nearer firm, moderate its monopoly power: disclose if "hard to get" (e.g. near the center); conceal if extremal (so that reaching the farther firm is very costly).

(3) Rich Evidence disclosure does even better.

-> Construct a "Zeno's partition" on each side of 0.

--> Each segment induces a price of 0 from the farther firm

--> Nearer firm sets price to bring the boundary (farthest) type of each segment to indifference wrt the farther firm

-> Construct a "Zeno's partition" on each side of 0.

--> Each segment induces a price of 0 from the farther firm

--> Nearer firm sets price to bring the boundary (farthest) type of each segment to indifference wrt the farther firm

Recap: What have we found?

--> Giving consumers control over disclosure can let them harness price discrimination to their benefit

--> The technology of disclosure is important: track/no track may not help when facing a monopolist

--> Note we have used only disclosure; no BBM

--> Giving consumers control over disclosure can let them harness price discrimination to their benefit

--> The technology of disclosure is important: track/no track may not help when facing a monopolist

--> Note we have used only disclosure; no BBM

In fact, max consumer surplus is bounded away from the BBM max (at least for uniform).

Many more thoughts, but I'll leave it here for now. Once again, paper here: scholar.harvard.edu/files/vasserma…

Many more thoughts, but I'll leave it here for now. Once again, paper here: scholar.harvard.edu/files/vasserma…

• • •

Missing some Tweet in this thread? You can try to

force a refresh