@moo_hax shows that the #MachineLearning system powering @proofpoint email protection (versions up to 2019-09-08) are vulnerable to model stealing & evasion attacks.

nvd.nist.gov/vuln/detail/CV…

Adversarial ML is now an #infosec problem. Wow.

THREAD 1/

And all hot on the heels of @CERT_Division first vuln note on the topic, a week back. Wow again.

Trailblazing, @moo_hax! 1/

Here is their tool for you to play with github.com/moohax/Proof-P…

3/

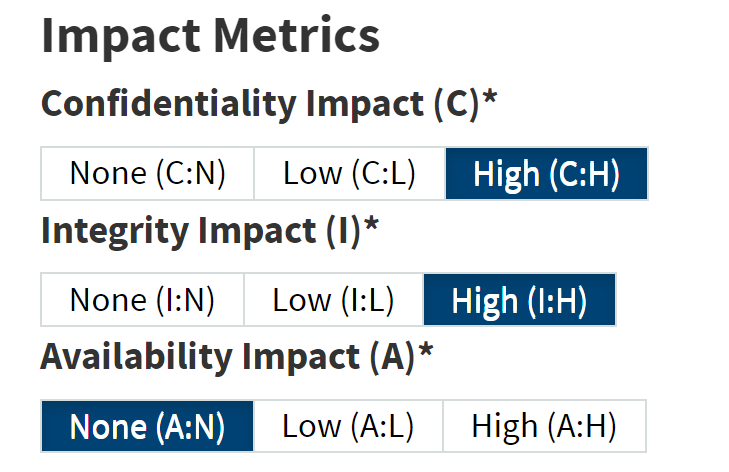

@NISTcyber assigned 9.1, which pushes to the CRITICAL region. (Yes I know CVSS scores have their own problems, but come back to that later)

4/

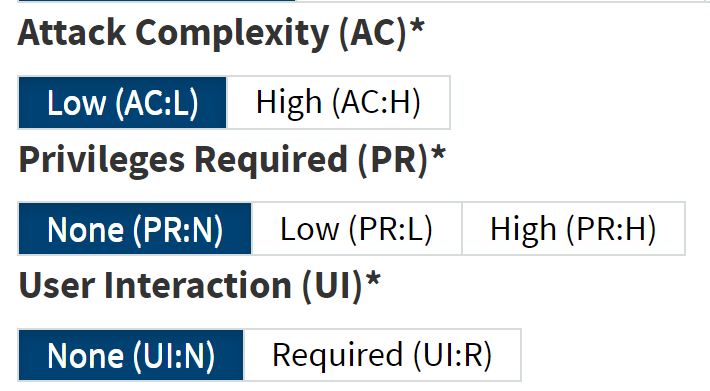

@moo_hax attack is:

EASY to mount (low attack complexity)

+ NO PRIVILEGES required (you are basically querying and observing the response

+ NO User interaction is required

O-M-G! 🔥🔥🔥 5/

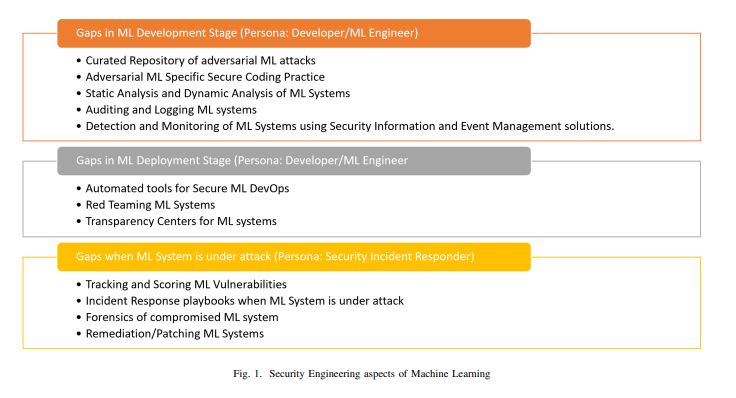

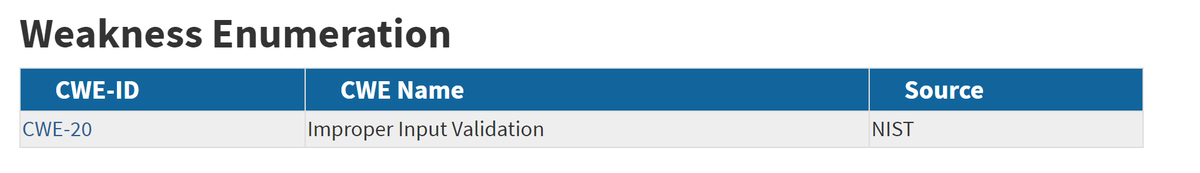

To me this shows that @NISTcyber (for no fault of theirs) trying to gouge a new vuln paradigm (like adversarial ML) into the outmoded traditional vuln paradigm 7/

8/

That's why @jsnover remark on needing @MITREattack for ML systems is so on point

cc: @stromcoffee 9/