TL;DR:

- 25 out of 28 organizations we interviewed noted that they dont have right tools in place to secure ML assets

- SDL for industry grade ML models has lots of open questions

arxiv.org/abs/2002.05646 1/

There is an adversarial ML research explosion -1 50 papers in the last 2 years - and been around since 2004 (see @biggiobattista paper sciencedirect.com/science/articl… )

We ask broader question: What does adversarial ML mean to ML and sec engineers in industry? 2/

We spoke to ML engineers and security analysts in the organization to learn how they approach adversarial ML 3/

1) What is the process the org follows currently when securing ML systems

2) What kind of attack would impact their org most?

3) If their ML system was under attack, how would the sec analyst approach it? 4/

a) Traditional Sec More important

As one security analyst put it, "Our top threat vector is spearphishing and malware on the box. This [adversarial ML] looks futuristic."

Large organizations or governments are spearheading change. SMBs not so much. 5/

our problem and it would be worrisome if someone

can reverse engineer it"

No kidding. 7/

There is a lot of insights into how orgs secure their "vanilla" software today. For instance, 122 orgs follow some form of Security Development Life Cycle to design, develop, deploy and safeguard vanilla software (See @Lipner safecode.org/wp-content/upl…)

8/

9/

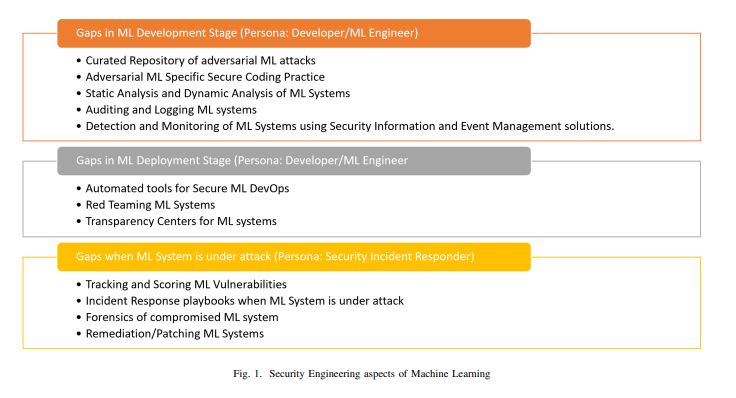

We took the Secure Development Framework, and looked for gaps. 10/

11/

What are the artifacts that should be analyzed when an ML system is under attack? ML attack? Model file? The queries that were scored?

Training data? Architecture? Telemetry? Hardware?How should these artifacts be collected? 12/

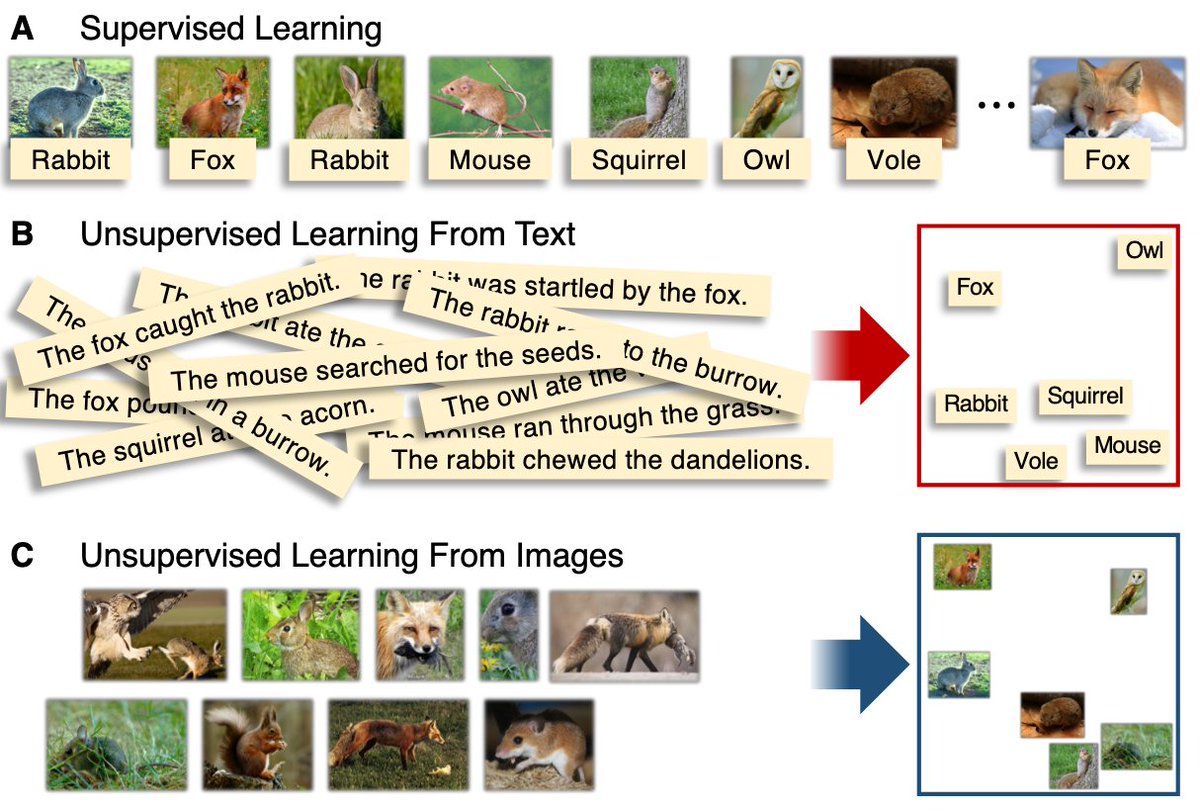

learning vs. supervised learning) and ML environment

(running on host vs cloud vs edge)? 13/

But that same tech is controlling everything: your finances to healthcare to the video you'll watch on Netflix 14/

This sums it up well. For me, we are deploying ML systems like it is 2020 but securing it like 1910.

- @JohnLaTwC is the root cause of SDL in the industry

- Magnus, @MSwannMSFT and @goertzel are security gurus

- @AndiC1122 and @sharonxia get it out of ya.