The kind folk at cyber-itl.org shared a new @zoom_us security issue with me.

I want to take this opportunity to describe:

The issue

How Zoom et al should fix it

How purchasers should identify it before corporate purchasing

What individuals should do

1/

I’m choosing to share this info because Zoom has been very good in responding to security researchers and security problems.

It is apparent they care now... but how bad is their security deficit?

Let’s quantify

2/

If you use Zoom at home for personal reasons to remain connected to loved ones during the pandemic - that’s very important.

You should probably continue using the product.

Hopefully Zoom will update and improve.

3/

@m0thran did the analysis I’m about to share. (You should follow him)

Let’s quantify the issue and then show what to do about it.

4/

It lacks so many base security mitigations it would not be allowed as a target in many Capture The Flag contests.

Linux Zoom would be considered too easy to exploit!

How do we know?

5/

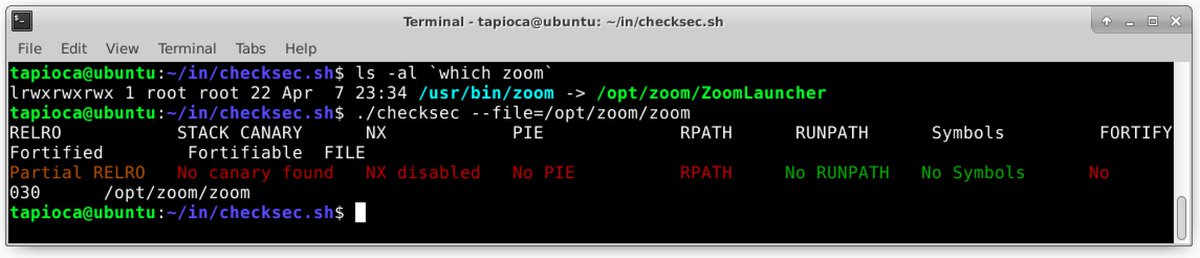

The Linux checksec shell script works fine for this.

Notice the binary lacks DEP/ASLR/Canaries/Fortification/RO section orders

[ @wdormann image]

6/

Disabling all of them is impressive.

Perhaps Zoom using a 5 year out of date development environment helps (2015).

7/

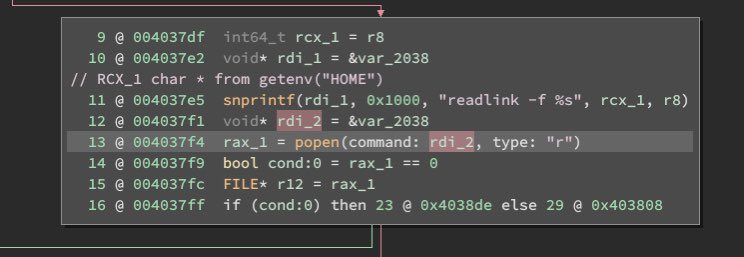

Here’s an example of grabbing an untrusted environment variable and handing it to the insecure popen(3) function for execution [ @m0thran ]

There are plenty of secure-coding-101 flaws here.

8/

The next best time is NOW!

So what can Zoom, and other companies, do to avoid these problems?

/9

For compiler/linker/loader security:

Create unit tests!

Add them to the build cycle!

No builds ship if basic safety mitigations are lacking, were removed, or are insufficient.

Heck, use checksec

/10

With up to date toolchains and safety measures enabled, put those unit tests are in place.

Not doing this is irresponsible.

What about unsafe functions?

/11

Code is scanned during build and the build fails if unsafe function calls are found.

Basic development hygiene. Do it.

/12

Pull them from MSFT (anything with a *_s replacement)

or GCC/Clang Source Fortification ( all with *_chk replacement)

or any number of books in writing secure code ( The Art of Security Assessment or CERT’s Secure Code )

/13

Check that weak functions were replaced (systemd is an example of failures here).

Better: to begin with avoid risky functions if possible.

/14

cyber-itl.org/data/2016/11/2…

In fact I think Zoom will start turning this around due to the external focus.

Companies consuming/purchasing this specific software are at fault to.

What can they do?...

/15

If the software is missing basic security artifacts, it’s unlikely the company considers the security of their product.

Look for competitor products.

/16

Google has a large number of Linux client systems.

Exceedingly week security on those systems that processes data from outside of Google is a valid risk.

If so, valid call

/17

Plenty of free solutions for the basics:

github.com/trailofbits/wi…

trapkit.de/tools/checksec…

unix.com/man-page/osx/1…

/18

That’s not Linux.

It’s not an urgent threat model... yet. If folks rush to exploit this soft target that could change.

The Windows and Mac clients aren’t “quite” as bad. Likely because Zoom unintentionally used more recent dev toolchains.

/19

If these alternatives are your company’s product, where are your security unit tests?!?

If you are a corporate consumer you should include simple security metrics in your product evaluation and purchasing process.

20/20

The popen() example was meant as an example of identifying poor security coding practices.

There are 453 calls to bad security funcs (unbounded string copies, bad random, access(), ...)

6316 to risky funcs like popen

Feel free to choose a more exploitable example :)