Our prospective replication study released!

5 years: 16 novel discoveries get round-robin replication.

Preregistration, large samples, transparency of materials.

Replication effect sizes 97% the size of confirmatory tests!

psyarxiv.com/n2a9x

Lead: @JProtzko 1/

5 years: 16 novel discoveries get round-robin replication.

Preregistration, large samples, transparency of materials.

Replication effect sizes 97% the size of confirmatory tests!

psyarxiv.com/n2a9x

Lead: @JProtzko 1/

When teams made a new discovery, they submitted it to a prereg’d confirmatory test (orange).

Confirmatory tests subjected to 4 replications (Ns ~ 1500 each)

Original team wrote full methods section. Team conducted independent replications (green) and a self-replication (blue).

Confirmatory tests subjected to 4 replications (Ns ~ 1500 each)

Original team wrote full methods section. Team conducted independent replications (green) and a self-replication (blue).

Based on confirmatory effect sizes and replication sample sizes, we’d expect 80% successful replications (p<.05). We observed 86%.

Exceeding possible replication rate based on power surely due to chance. But, outcome clearly indicates that high replicability is achievable

Exceeding possible replication rate based on power surely due to chance. But, outcome clearly indicates that high replicability is achievable

Another way to look at the data is comparing replication effect sizes (3 independent=blue; self=orange) to the confirmatory test effect size (0.0).

We observe little systematic variation, self & independent replications were very similar to confirmatory outcomes on average.

We observe little systematic variation, self & independent replications were very similar to confirmatory outcomes on average.

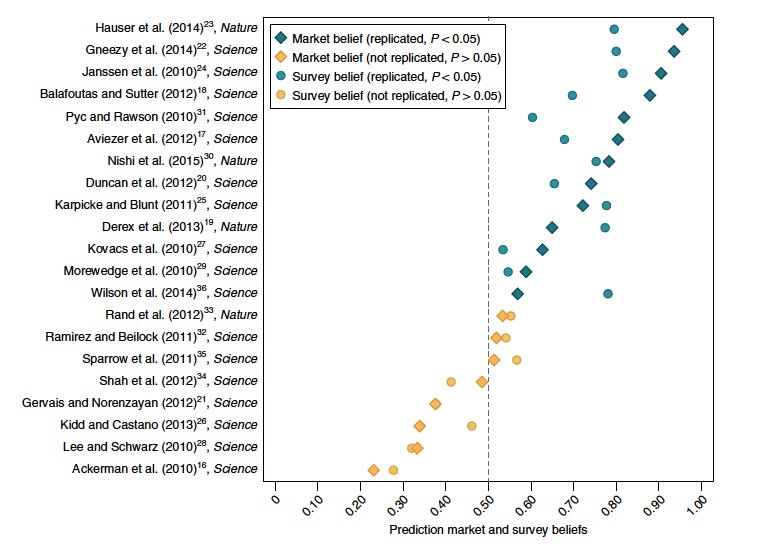

We also tested whether the discoveries were trivial or highly predictable, perhaps making them “easy” to replicate. We did not observe this. A survey of researchers showed high heterogeneity in predictions indicating that these novel findings did not have high prior-odds.

This was the work of four labs, the PI's @JonathanSchool6 (UCSB), Leif Nelson (Berkeley), Jon Krosnick (Stanford), and I (UVA), and many lab collaborators like @CharlieEbersole @jordanaxt @NickButtrick in mine.

This isn’t a randomized trial adopting high rigor versus not. We designed the study to evaluate decline effects, but we did not observe them. To us, the most plausible reason we didn’t observe decline is due to some combination of the rigor enhancing methods we adopted.

This is an existence proof that high replicability is achievable in social-behavioral sciences with novel discoveries. Next step is to unpack the causal role of methodological interventions on improving replicability and explore generalizability across topics and methodologies.

Here is one more visualization of the data showing lack of systematic decline from confirmation study (0) through the replication studies (1-4) for each of the 16 novel findings coming from the labs over the last 5 years.

Congrats to @JProtzko & team for completing this beast!

Congrats to @JProtzko & team for completing this beast!

If you prefer to receive your papers in video form, here's a presentation by @JonathanSchool6 of the preliminary results at last year's Metascience 2019 meeting. metascience.com/events/metasci…

• • •

Missing some Tweet in this thread? You can try to

force a refresh