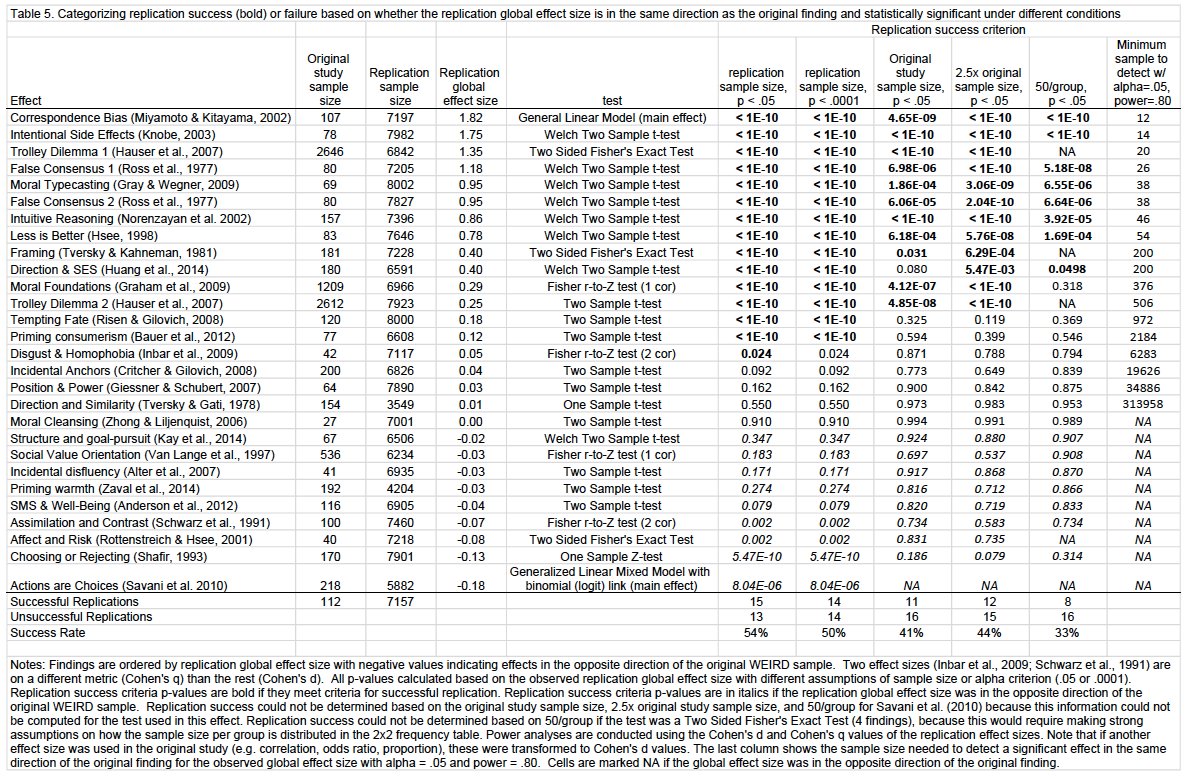

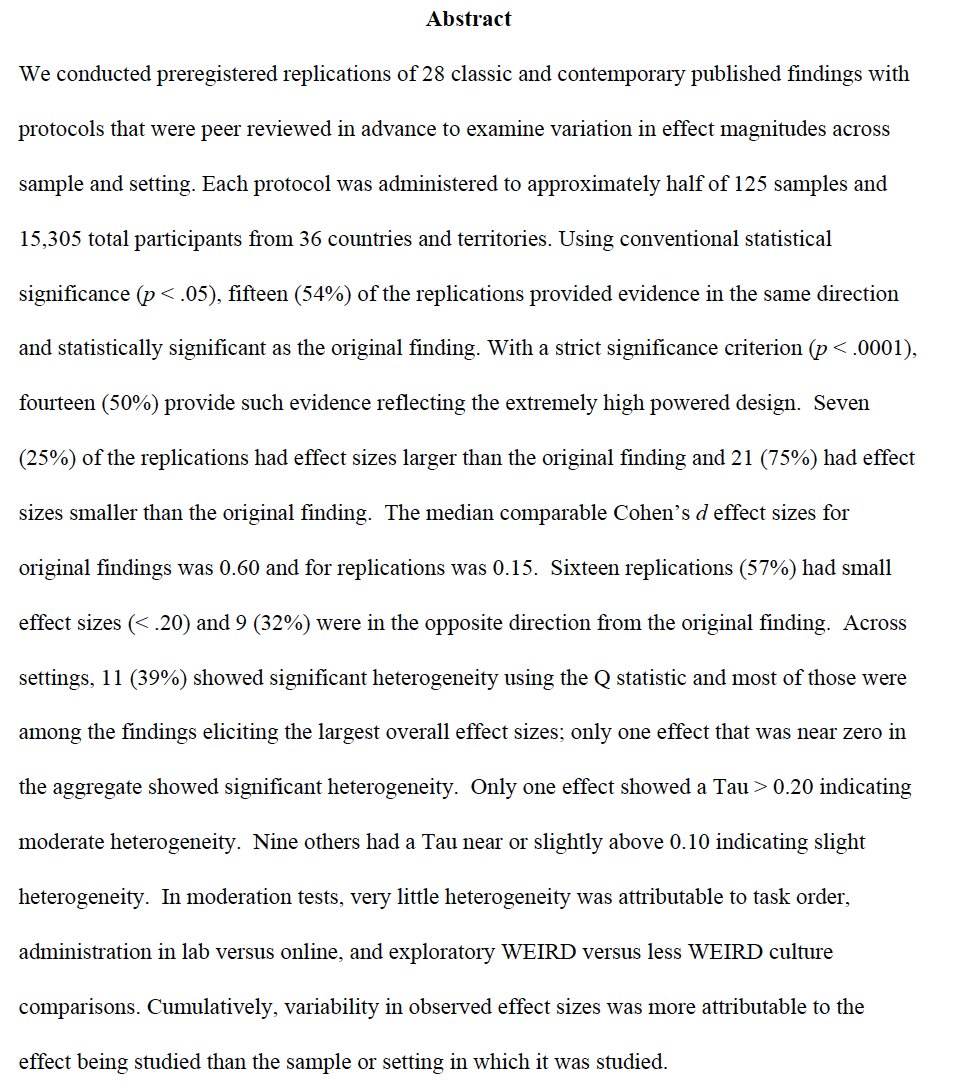

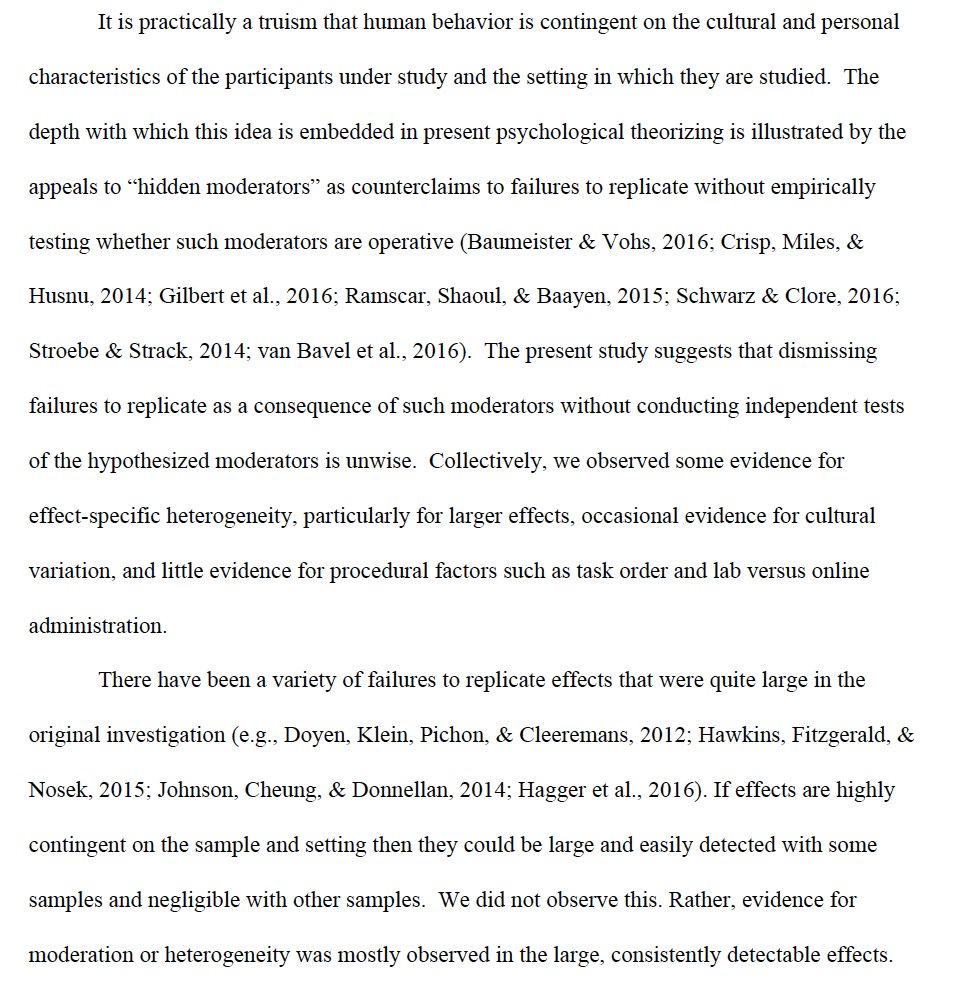

Successfully replicated 14 of 28 psyarxiv.com/9654g

ML2 may be more important than Reproducibility Project: Psychology. Here’s why...

@michevianello @fredhasselman @raklein3

ML1: econtent.hogrefe.com/doi/full/10.10…

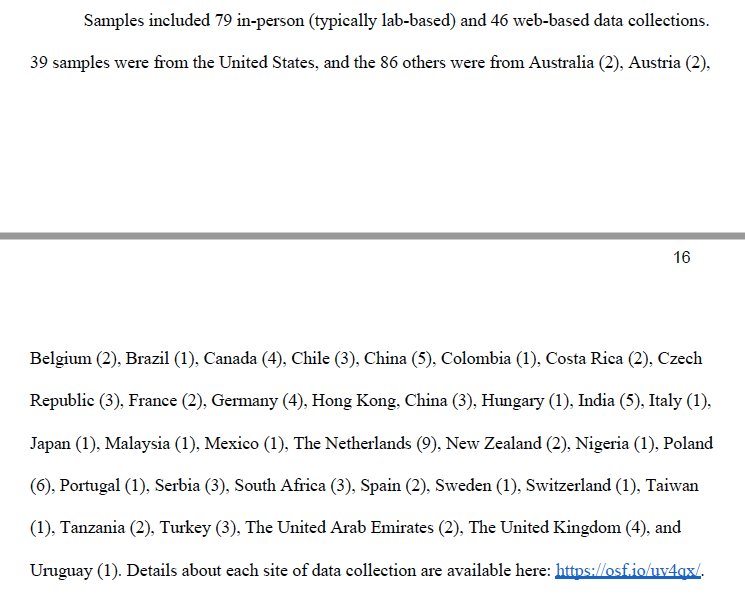

ML2: psyarxiv.com/9654g

ML3: sciencedirect.com/science/articl…

SSRP: nature.com/articles/s4156…

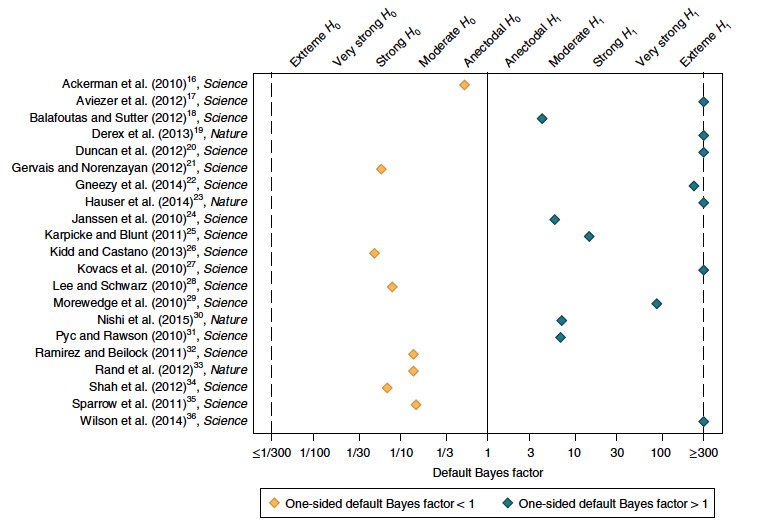

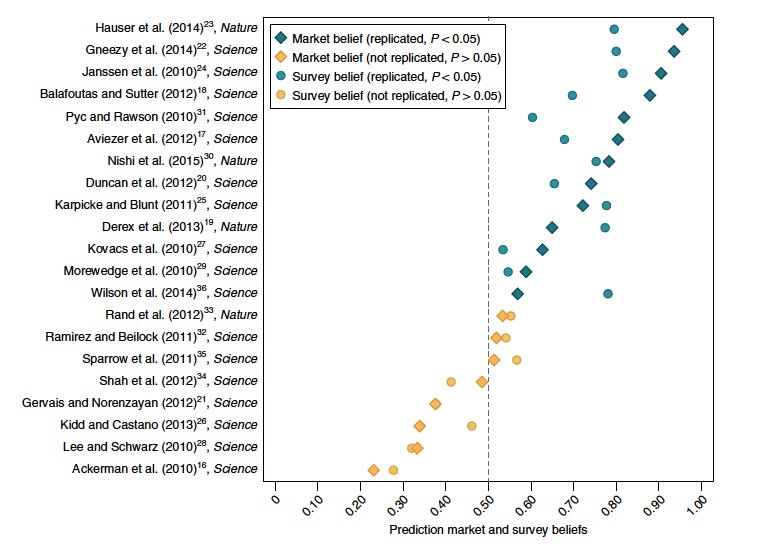

EERP: science.sciencemag.org/content/351/62…

RPP: science.sciencemag.org/content/349/62…

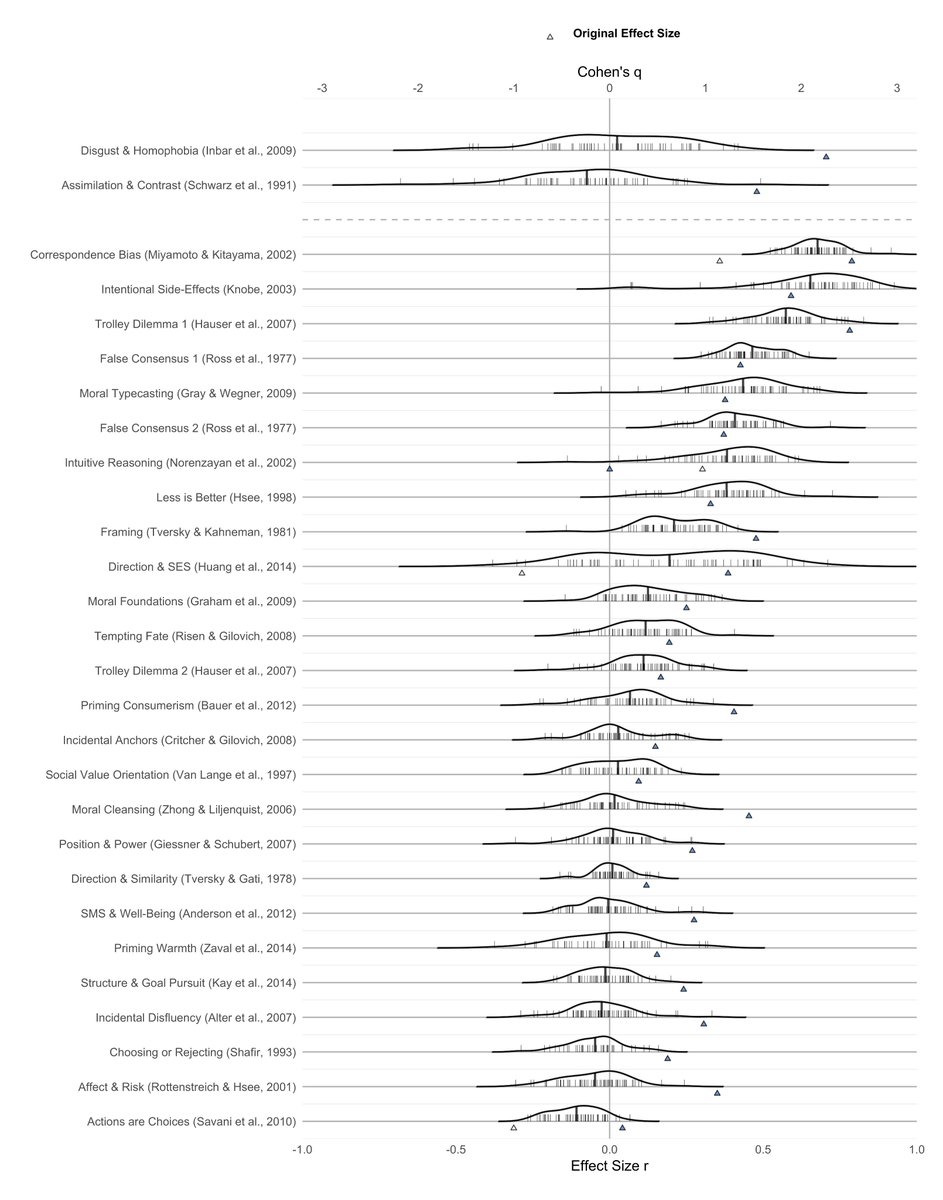

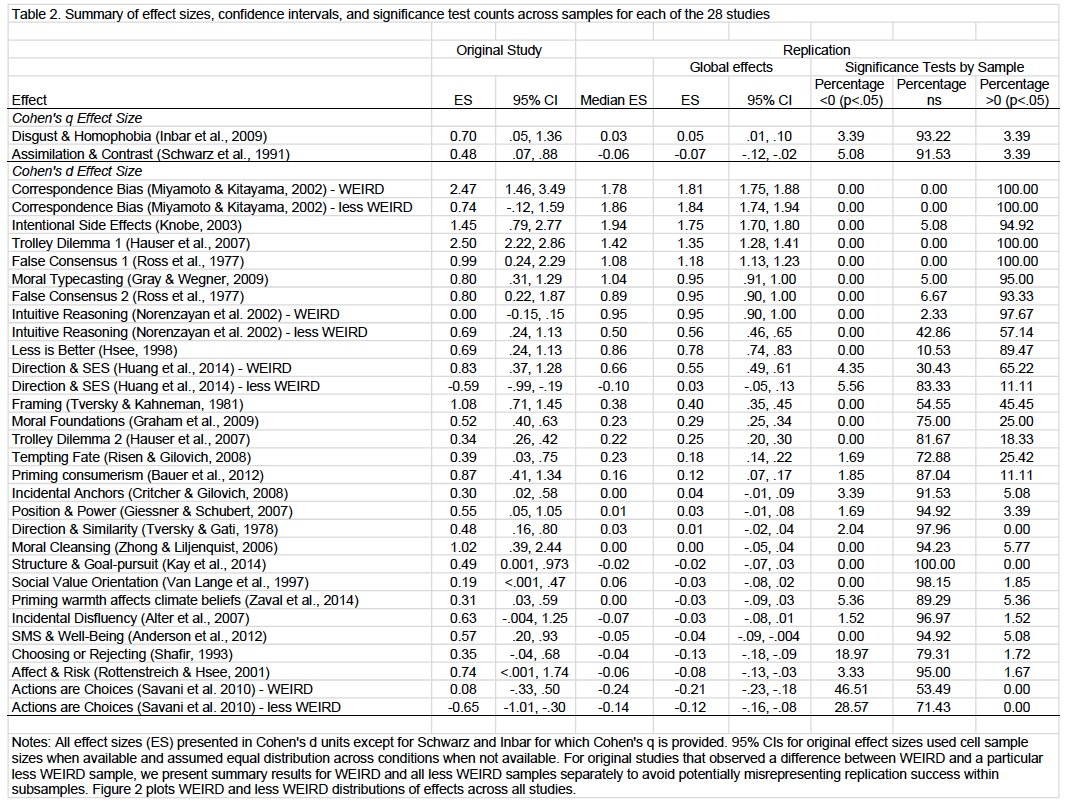

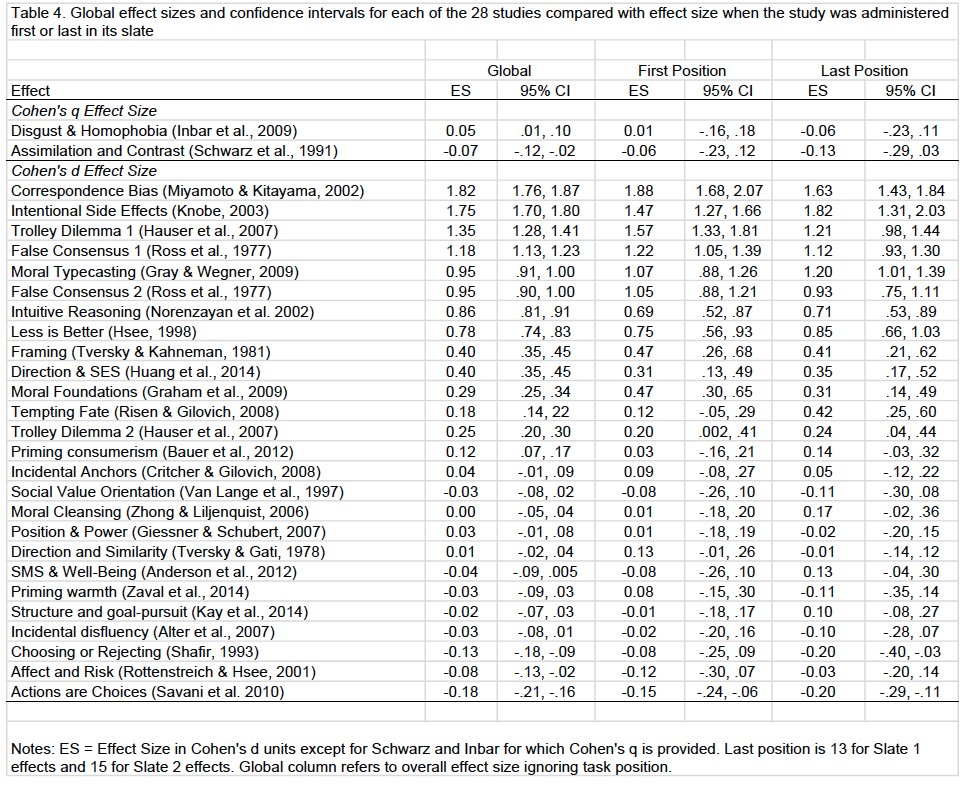

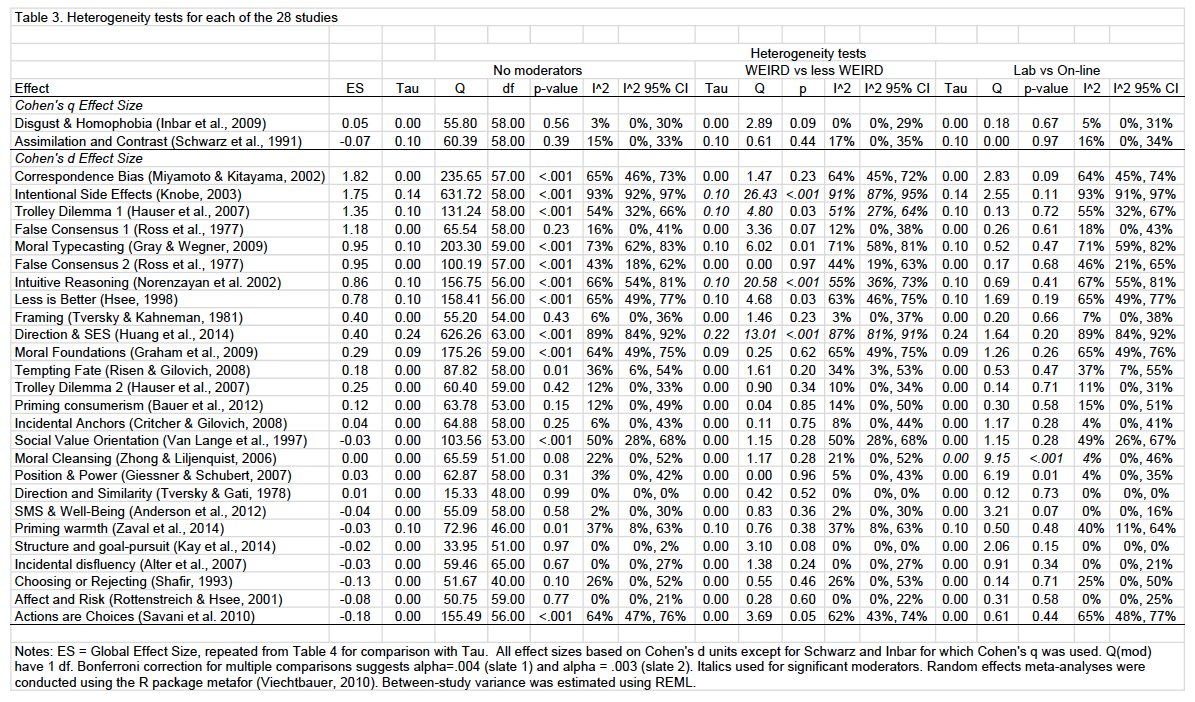

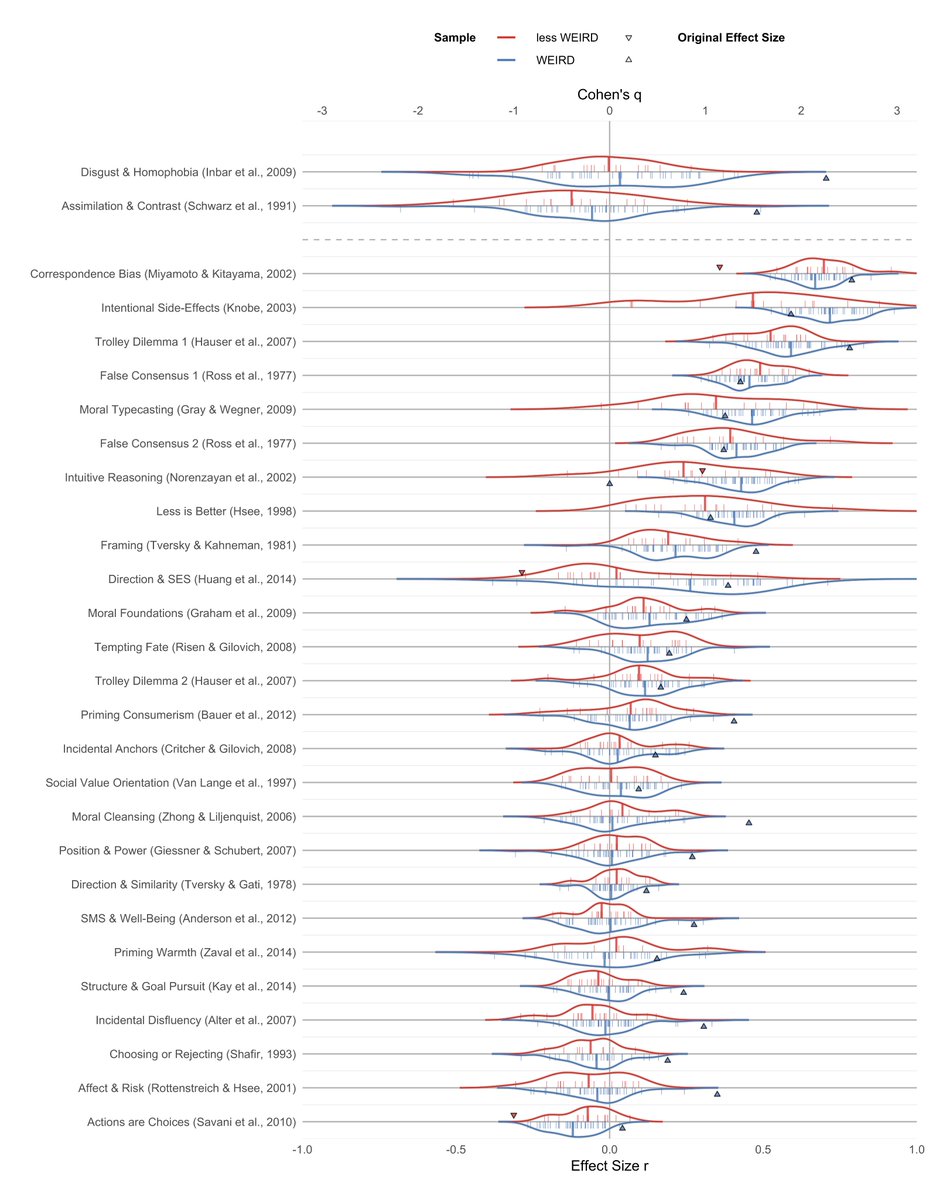

I find this Figure S2 to be particularly stunning. psyarxiv.com/9654g

ML2: psyarxiv.com/9654g

RPP: pnas.org/content/112/50…

EERP: science.sciencemag.org/content/351/62…

SSRP: nature.com/articles/s4156…

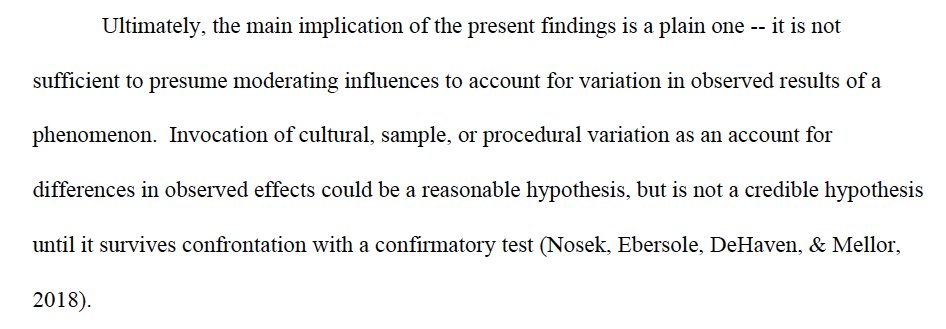

Test moderators. Do exploratory analysis on a subset, then apply model to holdout sample to maximize diagnosticity of stat inferences. psyarxiv.com/9654g