(1/n) I’ve been following discussions of #Bayesian sequential analyses, type I error, alpha spending etc. At the risk of offending everyone (please be kind!), I see reasons Bayesian sponsors and regulators can still find value in type I error rates and so forth.

(2/n) I’m focused on the design phase. After the trial, the data is the data. Lots of good stuff has been written on the invariance of Bayesian rules to stopping decisions. But in prospectively evaluating a trial design, even for a Bayesian there is a cost to interims, etc.

(3/n) Example…ultra simple trial. Normally distributed data. Known sigma=2. Mean is either 0 (null) or 1 (good). Simple decision space at end of trial…approve or not. A Bayesian utility would place values over the 4 combinations of truth/decision.

(4/n) To keep to 240 characters in what follows, those 4 combinations of truth/decision are approve good drug (AG), approve null drug (AN), do not approve good drug (DG) or do not approve null drug (DN).

(5/n) Approving a good drug is good. Approving a null drug is bad. Definitely can argue about the utilities of non approval. It won’t affect my main point so I’m going to keep it simple and say U(approve good=AG)=1, U(AN)=(-a), U(DG)=0, and U(DN)=0.

(6/n) After you collect data, you get a posterior probability the drug is good (Pi). You should approve if the expected utility of approval is greater than the expected utility of non-approval. With a little math, approve if Pi > a/(1+a).

(7/n) Suppose I run a fixed (non-adaptive) trial with 50 patients and use a=1. My prior probability of a good drug is 10%. I approve if my sample mean exceeds .675. In the design phase, what is the expected utility of my entire trial?

(8/n) The expected utility is the sum, over the 4 decision/truth outcomes, of Pr(outcome)*U(outcome). One of these terms is

Pr(approve null drug)*U(approve null drug) = Pr(app | null) Pr(null) U(AN). Pr(app|null) is the type I error.

Pr(approve null drug)*U(approve null drug) = Pr(app | null) Pr(null) U(AN). Pr(app|null) is the type I error.

(9/n) Another term is Pr(approve good drug)*U(approve good drug) = Pr(app | good) Pr(good) U(AG). Pr(app | good) is the power. Both the type I error and power are key components of the Bayesian expected trial utility.

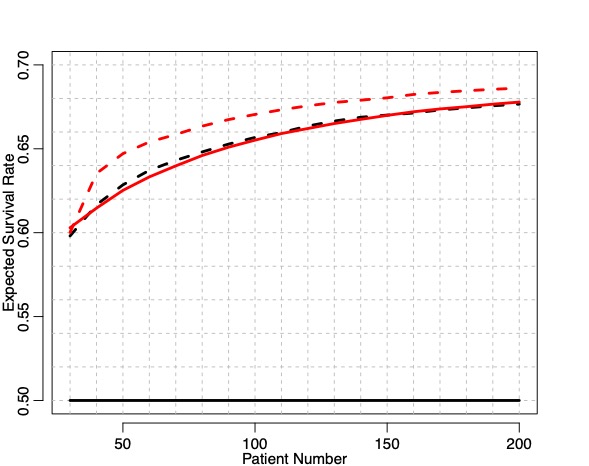

(10/n) With a fixed n=50 trial, my expected utility is 0.080 (an infinite sized trial would have expected utility 0.100). I’d need to have meaningfully scaled utilities for this to be interpretable (lives saved, etc.), but here I just want to do some comparisons.

(11/n) Now consider a Bayesian sequential trial with max n=50. I look after every patient, and stop/approve whenever my posterior probability meets the Pi > a/(1+a) condition. What is the expected utility of this sequential trial? It’s MUCH lower….0.023 (compared to 0.080).

(12/n) If I’m a regulator, this matters to me. I want trials that meet some minimum standard so the utility of the drug supply I approve stays above a certain level. It’s reasonable for me to ask trials to have sufficient expected utility.

(13/n) I can certainty create an adaptive Bayesian trial that has the same expected utility as the N=50 trial. I have to have a bigger maximum than n=50 (expected N might still be lower), and I may want to think about my interim schedule.

(14/14) None of this argues for type I error etc, after the trial is done. Nor is it anti-adaptive. Just simply that many of the things frequentist think about (have a low chance of approving a bad drug) still have relevance somewhere in Bayesian trial design.

• • •

Missing some Tweet in this thread? You can try to

force a refresh