💎 In yesterday's #growthgems newsletter I shared insights from the recent "Understanding, Optimizing and Predicting LTV in Mobile Gaming" GameCamp webinar

Read the 15 #LTV 💎 from this 100 min video in the twitter thread below 👇

Read the 15 #LTV 💎 from this 100 min video in the twitter thread below 👇

💎 1/15

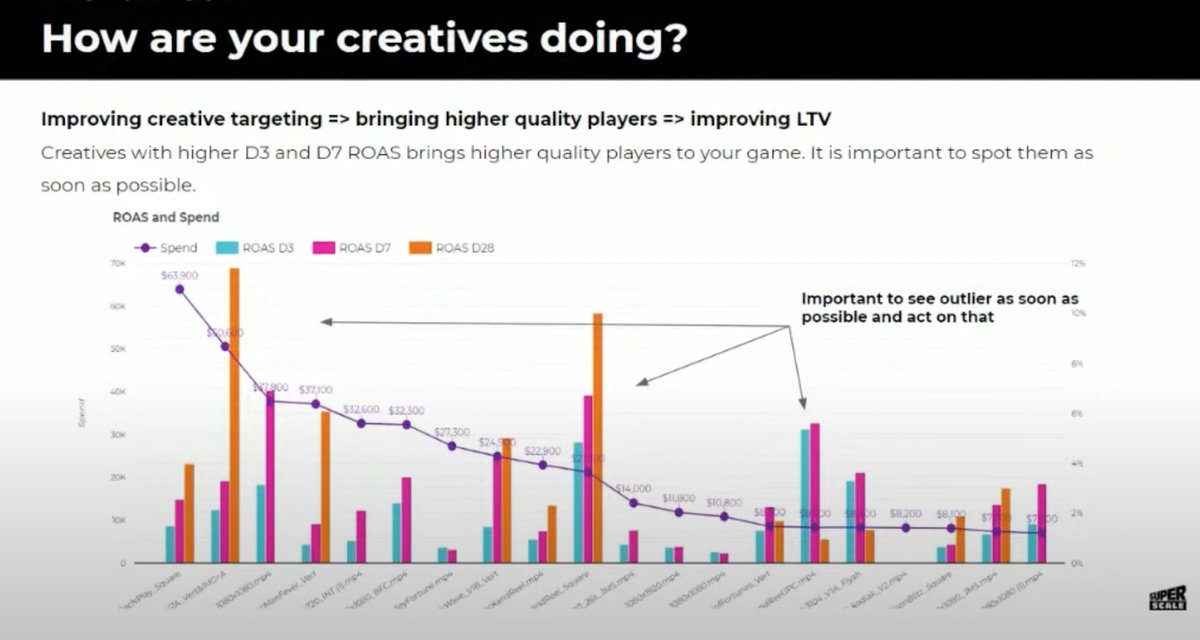

Chart your creatives on a X axis and check your D3/D7/D28 ROAS to quickly spot outlier creatives (both good and bad) so you can act on that (by reallocating spend for example).

Chart your creatives on a X axis and check your D3/D7/D28 ROAS to quickly spot outlier creatives (both good and bad) so you can act on that (by reallocating spend for example).

💎 2/15

A benchmark comparing ROAS (e.g. D7/D28) for each week (X axis) with success thresholds allows you to evaluate your UA strategies.

A benchmark comparing ROAS (e.g. D7/D28) for each week (X axis) with success thresholds allows you to evaluate your UA strategies.

💎 3/15

Understanding the CPI to spend relationship is a key factor in understanding UA payback and how you can scale your campaign.

Understanding the CPI to spend relationship is a key factor in understanding UA payback and how you can scale your campaign.

💎 4/15

Ask yourself this to find the most profitable players (Android):

1. Group great at buying IAPs, frequently?

2. Group heavily engaged, does engagement grow over time?

3. Group of players watch ads frequently? Have a benchmark to compare these new LAL/audiences to.

Ask yourself this to find the most profitable players (Android):

1. Group great at buying IAPs, frequently?

2. Group heavily engaged, does engagement grow over time?

3. Group of players watch ads frequently? Have a benchmark to compare these new LAL/audiences to.

💎 5/15

Do not create too many groups/segments of players when looking at LTV. You need to make sure they are different so you can understand which group is better. It is not enough to segment based on how much they purchase however, you need to use other attributes too.

Do not create too many groups/segments of players when looking at LTV. You need to make sure they are different so you can understand which group is better. It is not enough to segment based on how much they purchase however, you need to use other attributes too.

💎 6/15

If your LTV curve looks like a step function with jumps, either your game is relying mainly on LiveOps offers (not ideal design) or the amount of payers/players is too low.

If your LTV curve looks like a step function with jumps, either your game is relying mainly on LiveOps offers (not ideal design) or the amount of payers/players is too low.

💎 7/15

Your special offers are great if you can increase revenue per user while minimizing the discount. The value is in the personalization and showing the right offer (with a relevant content and price) at the right time.

Your special offers are great if you can increase revenue per user while minimizing the discount. The value is in the personalization and showing the right offer (with a relevant content and price) at the right time.

💎 8/15

Predicting LTV is different at different game stages: soft launch, some time after global launch or when the game is at maturity.

Predicting LTV is different at different game stages: soft launch, some time after global launch or when the game is at maturity.

💎 9/15

In soft launch we do not have the whole LTV curve but we somehow need to calculate the lifetime length so product knowledge is crucial because you're extrapolating. You have to know:

- Monetization limits (depth)

- User behavior

In soft launch we do not have the whole LTV curve but we somehow need to calculate the lifetime length so product knowledge is crucial because you're extrapolating. You have to know:

- Monetization limits (depth)

- User behavior

💎 10/15

Most important step in LTV model development: LTV model and forecast validation. ALWAYS have a validation sample to test the model against, and it also must be representative. Do not build the model to work especially well against your validation sample (i.e. "overfit")

Most important step in LTV model development: LTV model and forecast validation. ALWAYS have a validation sample to test the model against, and it also must be representative. Do not build the model to work especially well against your validation sample (i.e. "overfit")

💎 11/15

Some time after global launch you need different LTV models: country groups (Tier 1 vs. Tier 2 vs. Tier 3), acquisition sources and optimization types (Google Ads vs. video networks) and monetization types (in-apps vs. ad-based, or live ops vs. regular purchases).

Some time after global launch you need different LTV models: country groups (Tier 1 vs. Tier 2 vs. Tier 3), acquisition sources and optimization types (Google Ads vs. video networks) and monetization types (in-apps vs. ad-based, or live ops vs. regular purchases).

💎 12/15

Always think about how the LTV model will be used. Example: LTV model for the UA team needs to be working with a small sample size so decisions can be made at the campaign level vs. LTV model for strategic decisions needs to be more accurate and can be more thorough.

Always think about how the LTV model will be used. Example: LTV model for the UA team needs to be working with a small sample size so decisions can be made at the campaign level vs. LTV model for strategic decisions needs to be more accurate and can be more thorough.

💎 13/15

If you are encountering issues when leveraging machine learning, build a quick model with rough "soft launch techniques" for quick validation. Have a few models using very limited amount of data so you can retrain the machine learning models as soon as possible.

If you are encountering issues when leveraging machine learning, build a quick model with rough "soft launch techniques" for quick validation. Have a few models using very limited amount of data so you can retrain the machine learning models as soon as possible.

💎 14/15

Understanding the impact of LiveOps events on LTV is difficult when only 3 or 4 LiveOps done. Look at peaks during the LiveOps event. "Slice" the LTV curve into smaller periods, define a validation cohort for each slice and calculate the impact on LTV over the period.

Understanding the impact of LiveOps events on LTV is difficult when only 3 or 4 LiveOps done. Look at peaks during the LiveOps event. "Slice" the LTV curve into smaller periods, define a validation cohort for each slice and calculate the impact on LTV over the period.

💎 15/15

When evaluating the impact of LiveOps on LTV, do not forget to take into account the novelty effect: peaks tend to be higher during the first LiveOps events.

When evaluating the impact of LiveOps on LTV, do not forget to take into account the novelty effect: peaks tend to be higher during the first LiveOps events.

That's all!

Mining ⛏️ long-form resources to extract #mobilegrowth insights 💎 takes time.

If you enjoyed it, please share/RT🙏

Receive gems directly in your inbox with the free newsletter growthgems.co/free-newslette…

I'm going back down for more 💎 — Sylvain

Mining ⛏️ long-form resources to extract #mobilegrowth insights 💎 takes time.

If you enjoyed it, please share/RT🙏

Receive gems directly in your inbox with the free newsletter growthgems.co/free-newslette…

I'm going back down for more 💎 — Sylvain

• • •

Missing some Tweet in this thread? You can try to

force a refresh