"The brain is a computer" is a damn problematic metaphor. I prefer to say that "the brain is an intuition machine".

The term computer is conventionally understood as to be a digital computer. It's the kind that we program. It's the kind that is designed by minds and manufactured in assembly lines. It's the kind that can't repair itself. It's the kind without any autonomy.

It is a horrible metaphor. The brain is an intuition machine is a better metaphor. It's that kind that learns from experience. It is the kind that develops in an inside-out manner. It is the kind that creates itself. It is the kind that repairs itself. It is autonomous.

Both computers and intuition machines are computational in nature. From the Universal Turing perspective, it simply implies that it is not driven by magic or hypercomputation. There's is no such thing as magic or hypercomputation in reality.

However, computation is the same as causality is an impoverished notion. Allow me use another definition. Computation is the interplay of intentionality and mechanism. I borrowed this from Brian Cantwell Smith.

What this means is that is that computation is that kind of process that translates intentions into executable actions. When you write a program, in either an imperative or declarative language, this effectively is what is happening.

When a living organism intends to fight or flee, this is what happens. Its intentions are translated in the procedures that instruct it to move.

Computers do not have their own intentions, they are specified by their designers in hardware or in software. Intuition Machines have their own intentions, but their specification is grown through experience.

Computers and intuition machines do not share the same architecture because they are designed differently. @conways_law argues that our human designs reflect the organization of the designers. Analogously, intuition machines reflect the organization of a designer without a mind!

But why does an intuition machine or a brain need to build itself from the inside out?

There is an obvious difference in the information available in a first person perspective as compared to a third person perspective. The latter can only approximate the behavior of what it refers to.

Now imagine something that has to build itself from within. It does so in a gradual manner, continuously integrating and testing itself with the environment. It must do this in a manner that it is always alive. At no point in its construction that it is ever dead.

This implies a constraint on the operations it can perform to evolve. It has a different set of operations from the operations available to an external designer.

This is why in biology, wheels are mostly absent except for a few very rare cases. Wheels are physically disconnected from the bodies of their containing vehicles.

Similarly, we cannot understand anything unless it is semantically connected with a previous concept that we have previously understood. We cannot download instructions on how to fly a helicopter unless it is in the unique language of our individual brains.

Unfortunately, unlike computers that are manufactured to have the same instruction set, we invent our own instruction set as we develop our minds.

Before 2.4 years of age, this internal instruction set is in flux. We have a difficult time accessing memories as an infant because they are encoded in an entirely different instruction set.

santafe.edu/news-center/ne…

santafe.edu/news-center/ne…

Not all metaphors coming from computer science are bad ones for understanding the brain. The notion of user interface and virtual machine are two that seem useful.

The user illusion describes consciousness as a user interface: amazon.com/User-Illusion-…

I find the notion of a virtual machine (a machine simulating another machine) as understanding perhaps the relationship of system 1 processing and system 2 processing. Our reasoning system is emulated on top of an intuitive machine substrate.

Late binding is another very useful metaphor. It can be argued that all complex cognitive thinking is a consequence of late-binding.

I would go as far as saying that the correct metaphor for explaining the brain is not (1) as a computational system or (2) as a dynamical system. Rather, an approach with agents and language seems most promising.

Multi-agent architectures of the brain are not new. However, they all make the mistake that the language communicated by the agents is strict and not ambiguous as found in human natural language.

This gets us back to the instruction set idea mentioned earlier. The brain is a multi-agent system that communicates in an internal language that evolves as we learn. This is not a designed language but rather a living language, it shares features with DNA and human language.

Employing language as a metaphor for a brain makes clearer the notion of top-down causation. We understand how shared language can shape the coordination of agents in society. This same notion we can employ as an explanation for the coordination of populations of neurons.

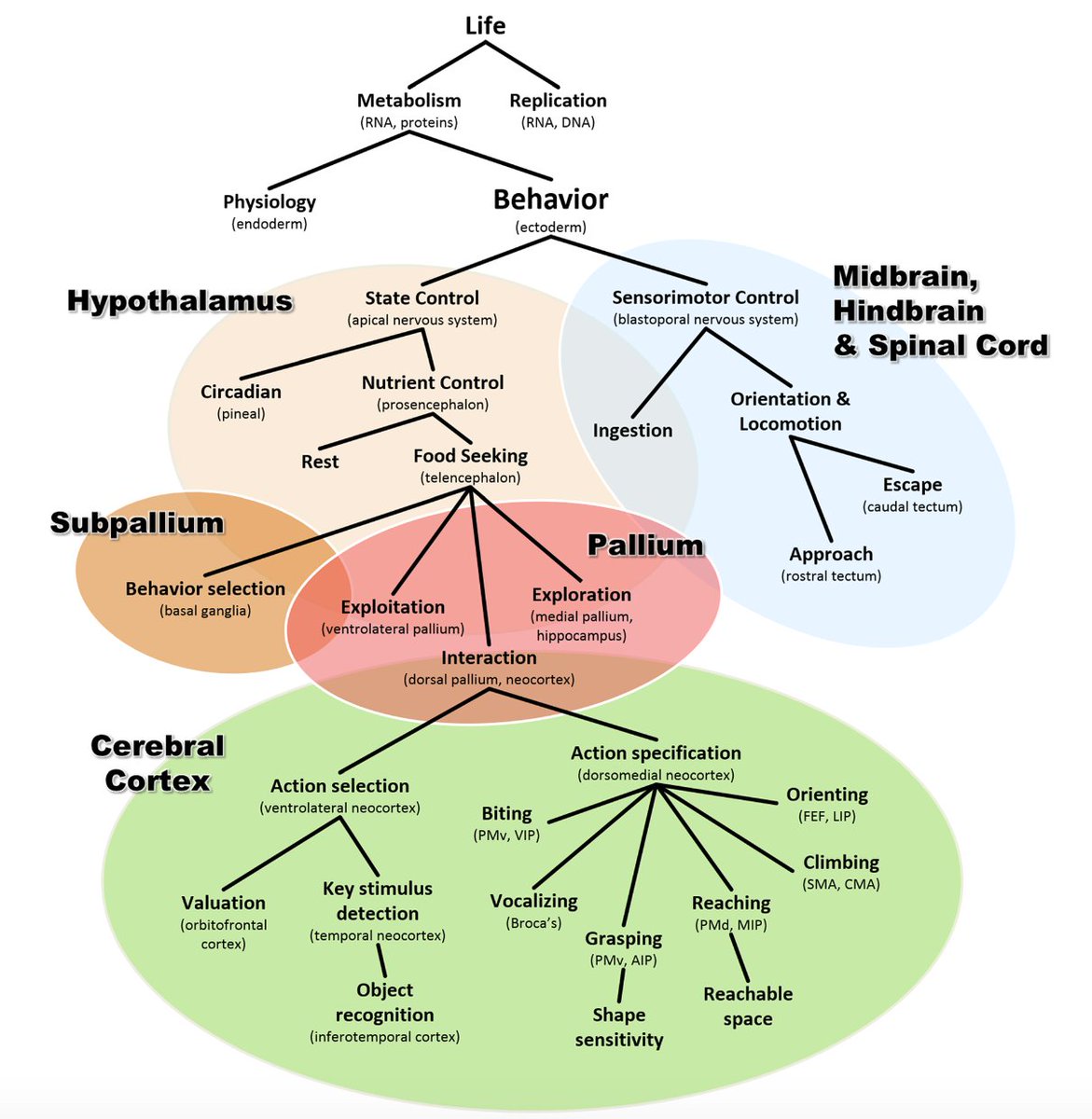

Each subsystem of the brain has its own internal language and an external language that it employs to communicate to other subsystems. There are a multitude of languages in the brain and a multitude of shared meanings between languages.

Where do all these languages come from? Most of the internal languages of each subsystem is innate, but the inter-subsystem languages are learned. Each subsystem is also a language learning system.

The brain composes itself by learning the interoperability language between its subsystems.

Why isn't this interoperability language hard-wired from the beginning? (1) The human brain continues to grow after birth and (2) functionality that originally belonged to non-cortical systems needs to migrate to the neocortex.

The human brain reorganizes itself by migrating innate cognitive functionality into the neocortex. This is difficult to explain, but the explanation is made easier from the perspective of language learning.

One can think of this of how a computer bootstraps itself from firmware. That is a process that migrates functionality from an original form of limited resources to a higher form with more resources.

The human infant does not re-learn object permanence, intuitive physics or intuitive psychology as many researchers have argued. These are innate capabilities of mammals and do not have to be re-learned.

Rather, the infant brain expands and transfers these capabilities to the neo-cortex. The human infant rediscovers these capabilities over time in a consistent manner.

Therefore, the human curriculum of infant learning is a deceptive template to use for machine learning. Learning and re-wiring existing learned subsystems are different enough and should not be conflated.

The astrocyte scaffolding maintains a consistent cognitive self. As we sleep, our brains are refreshed and rewired to preserve our self as we wake up. Without this refreshing and rewiring, we can go mad as a consequence of the natural chaos the exists with every dynamical system.

What we have between neurons and neuroglia is a dual coding system that maintains the brain's integrity over time. I suspect there exists a digital system that may be stored as part of the neuroglia.

Analagous how the immune system is able to recall the complex patterns of bacteria and viruses that may infect the body. The neuroglia have beeen exapted to store the complex patterns of our cognition.

We remember things because our neuroglia remembers things.

Human cognition is a consequence of innate circuitry. The cognitive gadgets that we inherit from civilization (i.e. social learning, imitation, mind reading, language) are learnable only because we already have innate circuitry and innate inclination to learn this.

We have here a recurring pattern of code-duality. Where two different cognitive processes that evolve at different speeds reinforce each other's construction.

A mistake of almost every theory is the assumption that there is only one dynamical system or computational system that is involved in cognition. There are always at least two.

@threadreaderapp unroll

• • •

Missing some Tweet in this thread? You can try to

force a refresh