Gibson came up with the word affordance. It's derived from the verb 'afford'. I've always liked the term since it implies the recognition of possibilities. en.wikipedia.org/wiki/Affordance

There's a problem though with his method. He took a verb and created a noun. He should have listened to David Bohm who realized that our noun-centric language could be restricting our ability to understand the world. He called his verb-centric language rheomode.

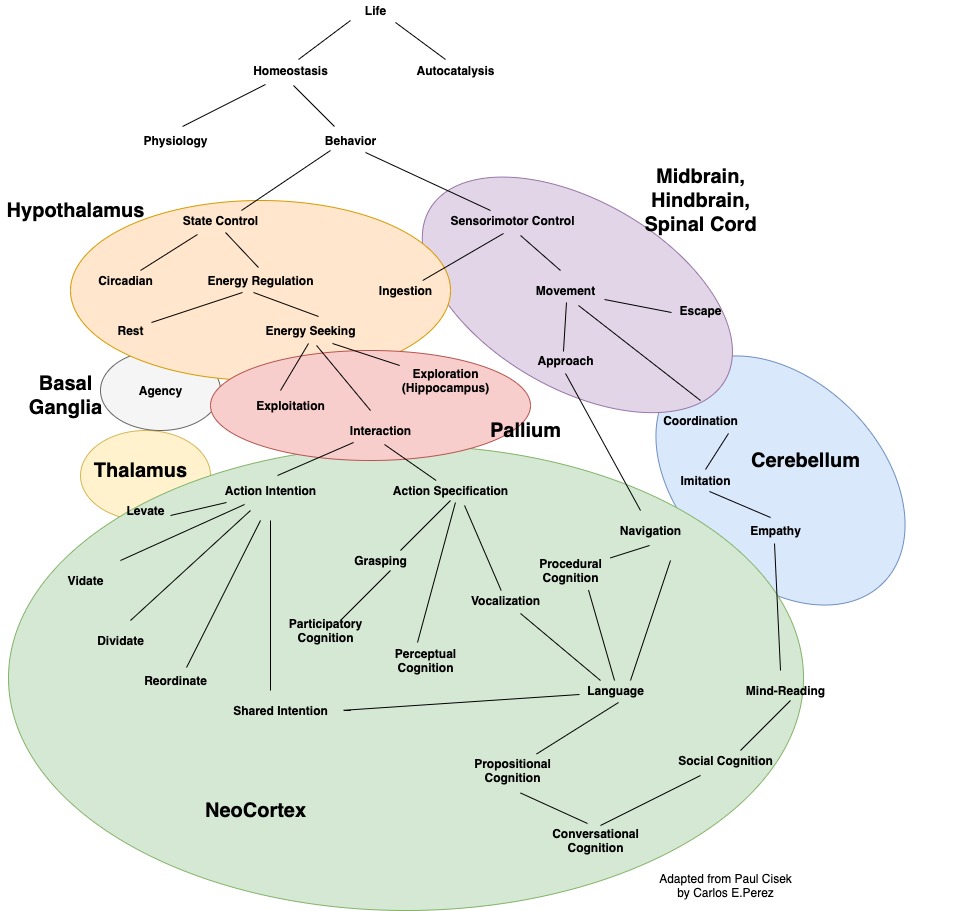

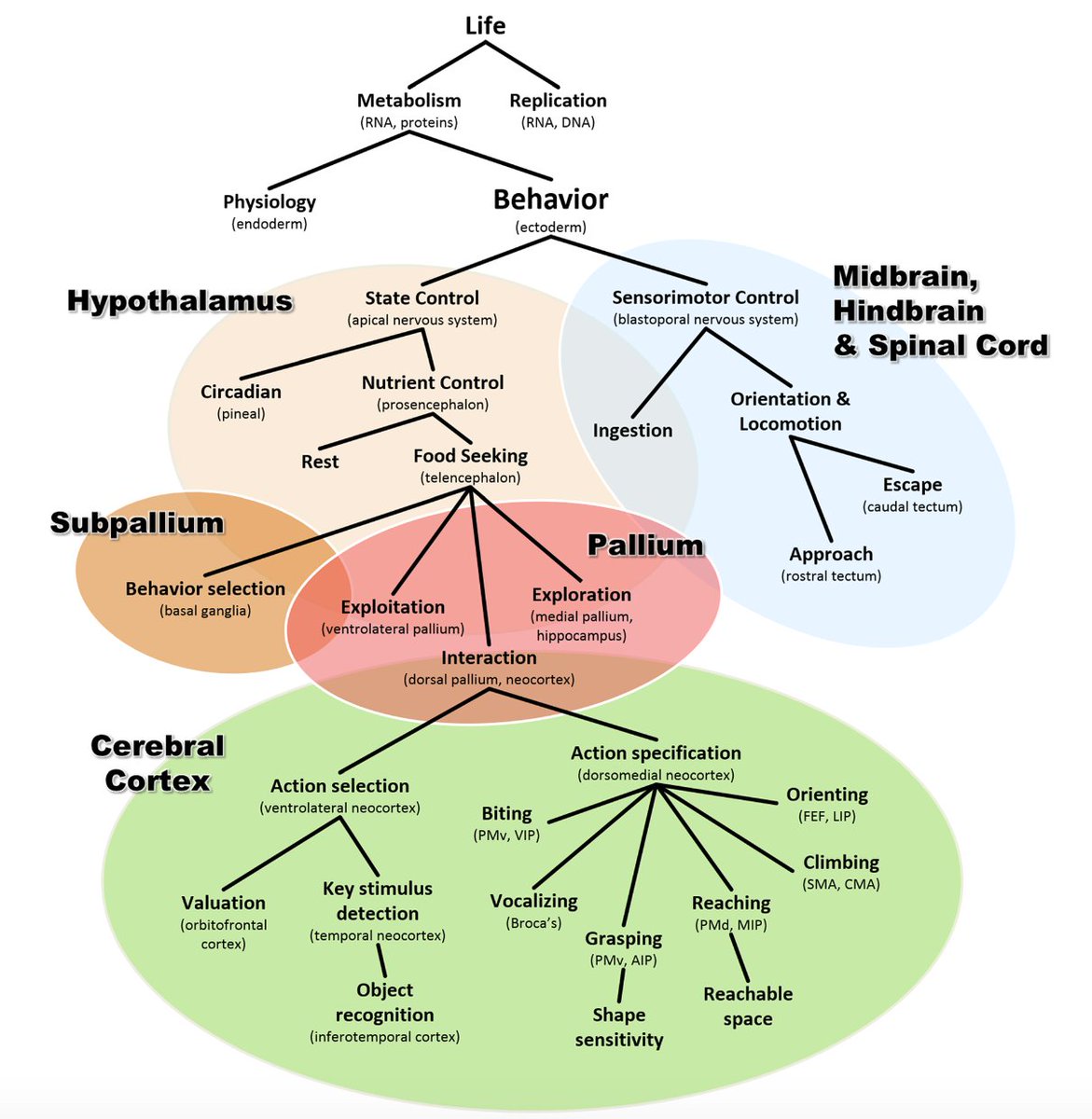

Paul Cisek decided he had enough with the conventional taxonomy of cognition (i.e. input, output, cognition) and decided on a new taxonomy.

The first thing to notice about this is that all behavior is rooted in homeostasis. Damasio argues that the purpose of the brain is homeostasis.

The second thing to notice is under interaction (i.e. doing) there is selection and specification. Recall that in my post about the meaning of computation, that computation is the interplay between intention and mechanism. medium.com/intuitionmachi…

So what happens in the cerebral cortex is... well computation. But I want to create even finer distinctions with intention/action selection and mechanism/action specification. This is where I take inspiration from David Bohm.

First, let's reword Cisek's original taxonomy.

Homeostasis->Behavior->(Energy Regulation,Movement)->Energy Seeking->(Agency,Exploitation,Exploration,Interaction)->(Intention,Specification)->Learning

Homeostasis->Behavior->(Energy Regulation,Movement)->Energy Seeking->(Agency,Exploitation,Exploration,Interaction)->(Intention,Specification)->Learning

Then let's create even more fine-grain distinctions using Rheomode verbs. These are to levate, to vidate, to dividate and to reordinate. These are related to attention, perception, decomposition and ordering.

Now let's through in a mix of C.S. Peirce semiotics and we have a new refreshed and advanced vocabulary for cognition! medium.com/intuitionmachi…

Gone is the impoverished notion of thinking of the brain as like a computer! I'm fed up with conversations going in circles because we have a pis-poor vocabulary! medium.com/intuitionmachi…

We now have a vocabulary that expresses everything that needs to expressed about cognition. This is a first step in a theory of general intelligence.

Let's revisit 4EA - Embedded, Embodied, Extended, Enacted and Affective to see if we've covered all bases in our vocabulary. Cisek's evolutionary perspective captures embedded, embodied and enactive. Extended seems to be an advanced cognitive capability that we need to express.

Extended can be expressed as a generalization of shared intentionality. Shared intentionality (Tomasello) is coordinated behavior with agents. Coordinated behavior with tools is usually what extended implies. Shared information is expressed under the Peirce genuine signs.

Social cognition, imitation, mind-reading and language are advanced cognitive capabilities. These are distinctions of shared intentionality. Sensorimotor empathy is the shared movement. Computational empathy is shared intention and specification.

Symbol grounding (rather detachment) is a downstream capability from computational empathy. This follows the evolutionary path as a consequence of shared behavior: participation->perception->procedure->proposition.

Let's expand this using Gibson's method of defining a relationship between duality of agent and environment. In this case, environment includes other agents that participate with the same intentions.

BTW, I did fail to mention that in Cisek's taxonomy, perception is absent! This is because action and perception are the same thing. You can see underneath action selection is the act of object detection.

In contrast, Bohm's Rheomode, which are verbs (i.e. processes and not things), doesn't have action, only perception! Cognitive evolution is that we begin with action and we evolve into perception. This further evolves into procedural and propositional thinking.

What I'm showing is how the evolution progresses towards higher-order thinking from a kind where action and perception are inseparable. Although we may think that higher-order thinking is separate from action, this is not the case for humans.

The human cognitive cognition as a consequence of our evolution implies the permanent coupling of action and perception. We learn by performing action and not by perception alone!

Human intelligence is a consequence of prior cognitive habits that were developed long before homo-sapiens set foot on this earth. That is why it is senseless for many researchers to ignore evolution.

• • •

Missing some Tweet in this thread? You can try to

force a refresh