🚘 Buckle in: it’s time to talk about @Tesla FSD!

In this thread, I’ll share what I find most impressive and the challenges that remain before the “F” in “FSD” is true.

Let’s go!

In this thread, I’ll share what I find most impressive and the challenges that remain before the “F” in “FSD” is true.

Let’s go!

1. @Tesla FSD is a polarizing topic in AV for a few reasons:

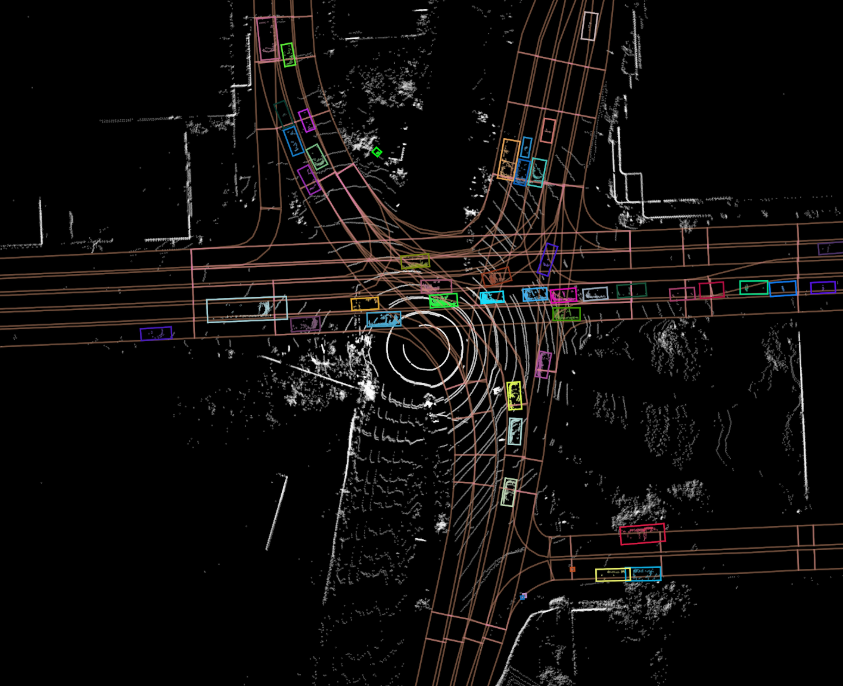

✅ No use of pre-recorded HD maps

✅ Perceiving the world with cameras (no lidar!)

Many think FSD is very different than most other self-driving technologies, but you can boil it down to these two reasons.

✅ No use of pre-recorded HD maps

✅ Perceiving the world with cameras (no lidar!)

Many think FSD is very different than most other self-driving technologies, but you can boil it down to these two reasons.

2. What @Tesla FSD is thus able to accomplish:

🗺 By generating a map on the fly, instead of pre-loading one recorded earlier, FSD can theoretically drive anywhere

💰 Realizing cost-savings because of fewer sensor modalities

🗺 By generating a map on the fly, instead of pre-loading one recorded earlier, FSD can theoretically drive anywhere

💰 Realizing cost-savings because of fewer sensor modalities

3. However, huge challenges remain.

Remember, @Waymo only just unlocked commercial driverless (i.e. no Safety Driver) utilizing both a pre-recorded map and extra sensor modalities (lidar, and higher-resolution cameras and radars).

Let’s talk about those challenges.

Remember, @Waymo only just unlocked commercial driverless (i.e. no Safety Driver) utilizing both a pre-recorded map and extra sensor modalities (lidar, and higher-resolution cameras and radars).

Let’s talk about those challenges.

4. Map challenges 📍

FSD appears to not detect this median, and thus tries to drive down the wrong side of the road.

Is this an “edge case” to iron out, or is it a monstrously large technical challenge to infer road rules in real-time?

FSD appears to not detect this median, and thus tries to drive down the wrong side of the road.

Is this an “edge case” to iron out, or is it a monstrously large technical challenge to infer road rules in real-time?

5. Map challenges 📍

FSD appears to not understand that this is a one-way street, preventing the lane change to the left.

Humans intuitively recognize this based on the directions of parked cars (and signs). Machine intelligence is not quite at that level.

FSD appears to not understand that this is a one-way street, preventing the lane change to the left.

Humans intuitively recognize this based on the directions of parked cars (and signs). Machine intelligence is not quite at that level.

6. Map challenges 📍

I’m not quite sure what’s going on here, honestly. The route for the vehicle keeps switching from going left to right, causing a need for driver intervention as the vehicle dives for the curb.

This is why drivers need to be attentive at all times.

I’m not quite sure what’s going on here, honestly. The route for the vehicle keeps switching from going left to right, causing a need for driver intervention as the vehicle dives for the curb.

This is why drivers need to be attentive at all times.

7. My bias: no company utilizes a pre-recorded HD map because they love adding cost. They do so because inferring road features in real-time is an exceptionally hard challenge.

Perhaps you can do so to 99.9% accuracy in the short-term, but is that good enough?

Perhaps you can do so to 99.9% accuracy in the short-term, but is that good enough?

8. Vision challenges 👀

In this instance, FSD appears to be about to hit a sign, requiring intervention. There are no detected object in the visualization.

Is this an “edge case” that more data will iron out? Or is it that depth-estimation with only cameras is fallible?

In this instance, FSD appears to be about to hit a sign, requiring intervention. There are no detected object in the visualization.

Is this an “edge case” that more data will iron out? Or is it that depth-estimation with only cameras is fallible?

9. Vision challenges 👀

FSD decides to proceed at an unprotected junction even while a vehicle in cross-traffic is oncoming. This requires driver intervention.

Perhaps the dark limited the range of the cameras and vision algorithms?

FSD decides to proceed at an unprotected junction even while a vehicle in cross-traffic is oncoming. This requires driver intervention.

Perhaps the dark limited the range of the cameras and vision algorithms?

10. My bias: no company adds lidars to their robotaxis because they love the added cost. They do so because lidar complements the weaknesses of cameras (like seeing in darkness) and radars incredibly well.

11. Given FSD’s “beta” designation, these sorts of issues are to be expected.

However, the clips above were taken from only 7 minutes of driving. Seeing these types of issue, with that frequency, gives me pause that this system is ready for fully self-driving anytime soon.

However, the clips above were taken from only 7 minutes of driving. Seeing these types of issue, with that frequency, gives me pause that this system is ready for fully self-driving anytime soon.

12. Now, we should also spend some time acknowledging that FSD is a damned fine accomplishment.

It has been built with a relatively small team, and there are many impressive interactions.

For instance…

It has been built with a relatively small team, and there are many impressive interactions.

For instance…

13. When you don’t have a pre-recorded HD map to localize to, or a lidar, it can be tough to accurately perceive the exact proximity of objects with the required granularity.

As such, this slight deviation for a parked vehicle was very nice!

As such, this slight deviation for a parked vehicle was very nice!

14. Traffic light detection, without encoding positions in a pre-recorded HD map, is inherently a data-driven problem.

From the small amount of clips I’ve seen, FSD is able to accurately detect not just traffic light state, but the relevance of traffic lights to each lane. Nice!

From the small amount of clips I’ve seen, FSD is able to accurately detect not just traffic light state, but the relevance of traffic lights to each lane. Nice!

15. Even though I pointed out a few failure modes of FSD’s attempt at inferring road rules in real-time, it is still super impressive to see their progress here.

16. After balancing the current weaknesses and strengths of the system (albeit with limited data), it is clear that FSD is an impressive technological accomplishment.

However, is a fully self-driving @Tesla imminent?

However, is a fully self-driving @Tesla imminent?

17. According to the little data I have, the answer is no.

FSD has taken a complex problem and made it more complex, with no pre-recorded HD map and reduced sensor modalities.

Their data advantage helps, but given this starting point, it is unclear if it is meaningful.

FSD has taken a complex problem and made it more complex, with no pre-recorded HD map and reduced sensor modalities.

Their data advantage helps, but given this starting point, it is unclear if it is meaningful.

18. The fact we won’t have fully self-driving @Tesla’s soon does not mean we cannot be excited about FSD.

It’s healthy to see diversity in approach. It drives our industry to deliver a better product for customers.

Congrats to @Tesla on shipping. Now, add driver monitoring!

It’s healthy to see diversity in approach. It drives our industry to deliver a better product for customers.

Congrats to @Tesla on shipping. Now, add driver monitoring!

19. Caveat: All of the above is speculation based on only what I can see. I am sure I am wrong in many places, so please don’t take the above too seriously.

Thank you for reading 🙏

Sources:

1️⃣

2️⃣

Thank you for reading 🙏

Sources:

1️⃣

2️⃣

• • •

Missing some Tweet in this thread? You can try to

force a refresh