What's still out there, polling-wise?

* Presumably we'll get some YouGov/CBS in 10 minutes here.

* Emerson's releasing polling all day.

* Monmouth in PA.

* Rumors of another round of Fox News state polls, though I'm not 100% sure about those.

* Presumably we'll get some YouGov/CBS in 10 minutes here.

* Emerson's releasing polling all day.

* Monmouth in PA.

* Rumors of another round of Fox News state polls, though I'm not 100% sure about those.

* NBC/Marist hasn't released a PA poll this round so that may be coming.

* Presumably Quinnipiac has something up its sleeve?

* A handful of one-off state polls from local universities/newspapers may weigh in again, but they can be budget-constrained and timing hard to predict.

* Presumably Quinnipiac has something up its sleeve?

* A handful of one-off state polls from local universities/newspapers may weigh in again, but they can be budget-constrained and timing hard to predict.

* Morning Consult has mostly been keeping its state polls paywalled, don't know if we'll get something public.

* Maybe one more round of Ipsos state polls?

Of course we'll get weird/random stuff too, a lot of which will probably herd, but this is ~the list of polls I care about.

* Maybe one more round of Ipsos state polls?

Of course we'll get weird/random stuff too, a lot of which will probably herd, but this is ~the list of polls I care about.

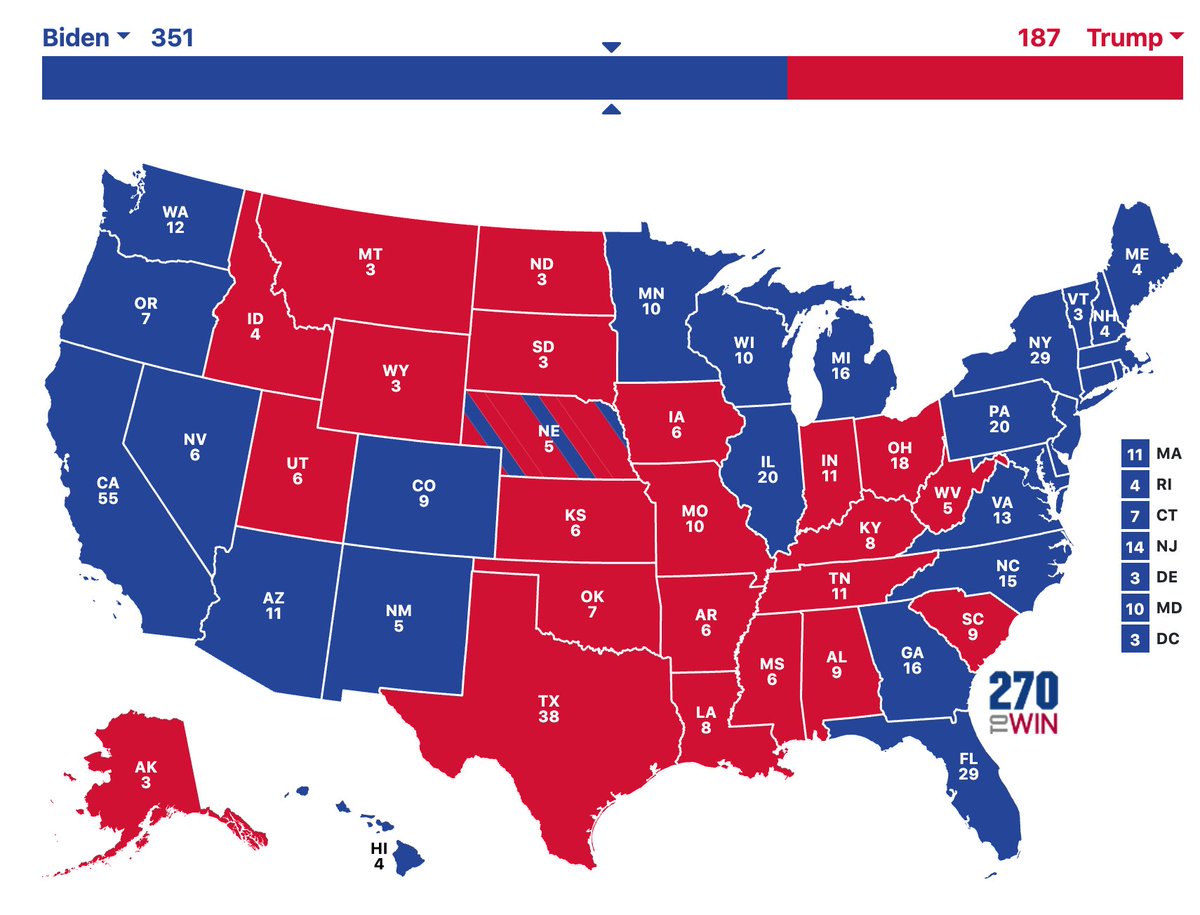

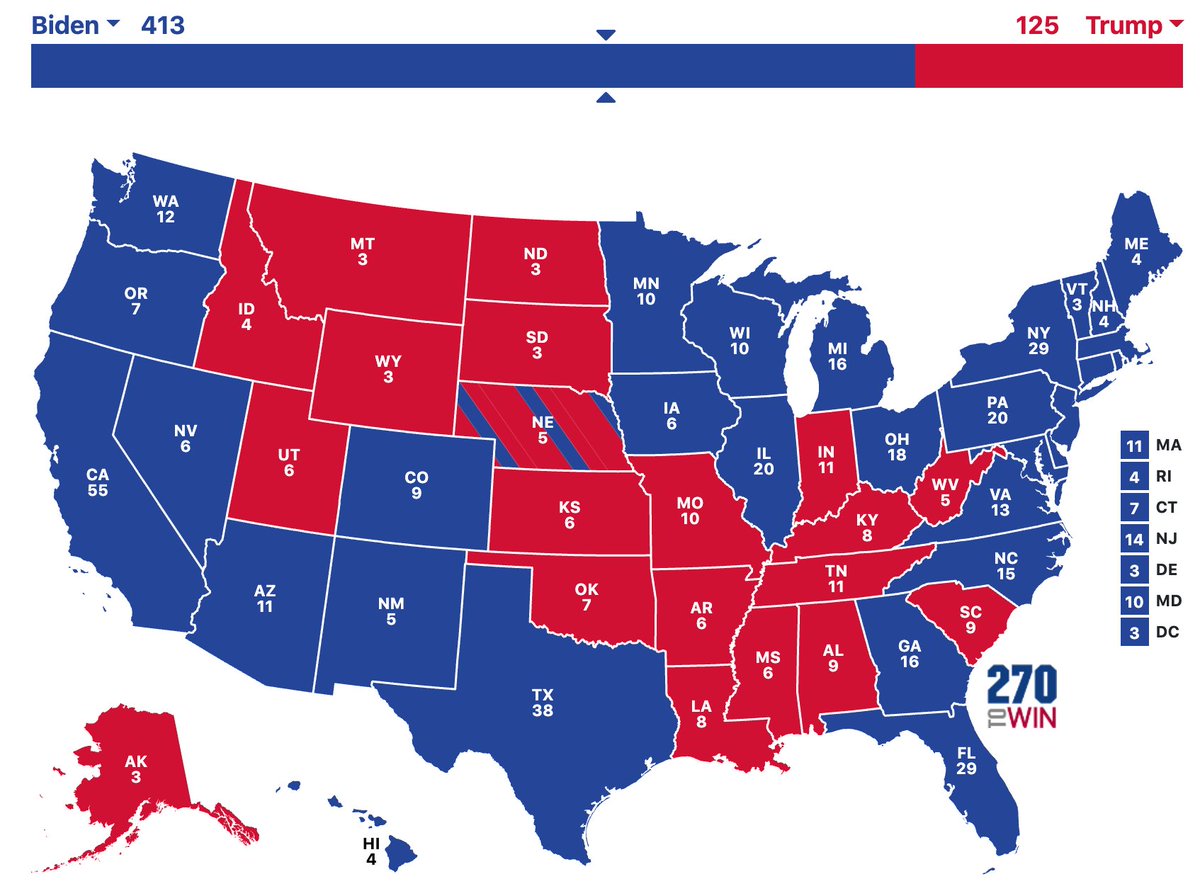

Well, it looks like from YouGov/CBS, we got modeled *forecasts* rather than new polling per se. It's a cool product but we can't use these in our own forecasts as we are averaging polls and not averaging other people's forecasts.

cbsnews.com/news/biden-tru…

cbsnews.com/news/biden-tru…

• • •

Missing some Tweet in this thread? You can try to

force a refresh