An Autopsy of #AI #COVID19 #cough diagnosis

"We think this shows that the way you produce sound changes when you have COVID, even if you’re asymptomatic" @MIT

How this model might work but why it might be very broken: thread 1/25

@zsk @buzzhollandermd

"We think this shows that the way you produce sound changes when you have COVID, even if you’re asymptomatic" @MIT

How this model might work but why it might be very broken: thread 1/25

@zsk @buzzhollandermd

https://twitter.com/EricTopol/status/1323310979858325509

AI is not magic: AI simply builds up a rich representation of the world (a model) and uses this model to predict, categorise, and create. What is exciting about AI is the complex relationships which they can reflect. Key tweets are 8, 11, and 13-19. The rest is background. 2/25

For example, does wet weather effect attendances to A&E (ER)? My instinct (as an Emergency medicine doctor) is that there is an effect, but this effect depends on whether it was raining the day before, whether it is cold or warm, and whether Liverpool was playing football. 3/25

AI can capture these relationships. So, if there is a relationship between the sound you produce when you force cough whilst healthy compared with force coughing when you have Covid-19, then AI can probably detect this difference. The question is: will there be a difference? 4/25

This is Biological plausibility. It is central to #AIinHealthcare because AI is not magic. AI cannot glance at tea leaves to predict how many people will attend my emergency department. Tea leaves (I’m 95% certain) have absolutely no relationship to these attendances. 5/25

However, if an AI can learn how combinations of factors conspire to affect a person’s decision to attend, then accurately it can predict. So how does Covid-19 affect your cough? SARS-Cov-2, the virus which causes Covid-19, enters into cells via a protein called ACE2. 6/25

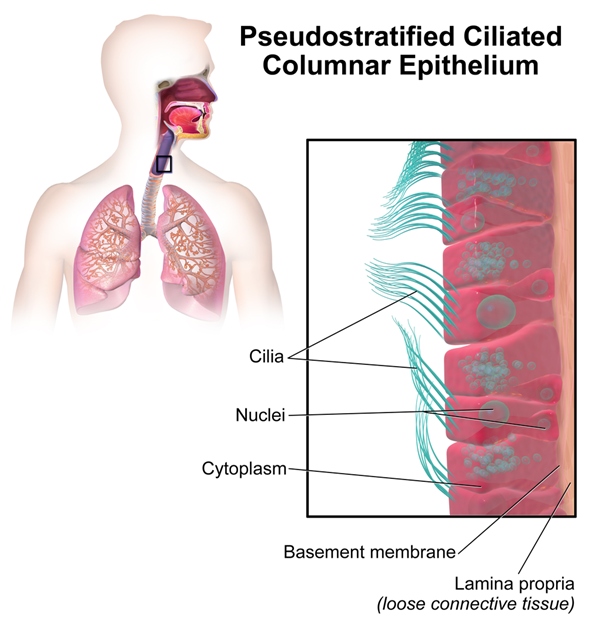

Now, the ciliated epithelium (cells with hairs to move dust/dirt) of vocal cords is rich in ACE2. The squamous epithelium (other outer-layer cells around the cords) expresses less ACE2. Image: Blausen.com staff (2014) "Medical gallery of Blausen Medical 2014". 7/25

Plausibly, viral cellular entry into the ciliated epithelium disrupts their function reducing vocal cord lubrication. Drier vocal cords = change in forced cough.

But here is the problem: drier cords = sore throat.

Forced cough should only be affected once symptoms develop. 8/25

But here is the problem: drier cords = sore throat.

Forced cough should only be affected once symptoms develop. 8/25

Remember, cough samples were generated by people forcing themselves to cough. Asymptomatic individuals were those without an involuntary cough or any other symptom. The AI is not listening passively to a pattern of coughing: that would be an entirely different model 9/25

Other factors which may affect cough are muscle degradation (e.g coughing frequently, low energy from fighting the infection), vocal fatigue (again, from coughing or from shortness of breath), and emotional state. These features plausibly differentiate health from illness 10/25

But hang on: the model performed *better* on asymptomatic individuals? This is where it falls apart for me. There are no factors which should affect forced-cough sounds which do not become better differentiated as symptoms develop. There are no mechanisms to explain this 11/25

Bizarrely, the model performs worst on non-covid patients with Diarrhoea, misclassifying over 1 in 4 as having Covid. I can see no plausible explanation for how Diarrhoea could affect you cough in such a way as to make this model think you have Covid-19. 12/25

None of these mechanisms should exhibit any significant effects in asymptomatic individuals at all. So, what’s going on here? One explanation is bias using sentiment (emotion) in the model. These individuals knew their test results already, which could impact emotion. 13/25

But, reviewing the original paper: ieeexplore.ieee.org/stamp/stamp.js…, we see that sentiment contributed least to the model’s accuracy. The baseline model with just muscular degradation and the cough sound itself only performed at 60% accuracy. That’s not much better than a coin toss 14/25

Vocal fatigue contributed 19% additional accuracy from baseline (getting 4 in 5 right). Biologically, this effect seems reasonable – but a miss-rate of 1 in 5 is difficult to tolerate in the real-world. Inability to differentiate between different illnesses is a weakness 15/25

But the authors added in another biomarker, contributing a further 23% accuracy to the published 98.5%. Certainly an impressive feat: so what is this magic bullet? Well… it’s the ability to distinguish between people whose mother-tongue is Spanish vs English. 16/25

“We hypothesized that such models capable of learning features and acoustic variations on forced coughs trained to differentiate mother tongue could enhance COVID19 detection using transfer learning.” This reasoning feels weak at best. 17/25

Covid-19 affects people’s coughs differently based on their language? Here's what I think has actually happened (we would need the metadata from @mit to know!): the data set is unbalanced with say, the majority of asymptomatic positive covid patients being Spanish speaking. 18/25

If the model learns to identify whether someone is Spanish or English, it is able to use this spurious correlation with asymptomatic +ve covidity to cheat and work out whether someone has covid. With only 100 asymptomatic positive patients in the data, this is important 19/25

A similar situation occurred with a dataset I had for breath sounds for @Senti_Care. Hidden in the metadata was a 'fact' that only women get Asthma. This was simply a function of the dataset, of course, and has no resemblance to reality. 20/25

However, knowing a person’s sex gave the model tea leaves to decide whether a person didn't have asthma. Auto-magically, it knows that, if you are a man, you don't have Asthma. If this model made it out in the real world, where men have asthma too, it would fail hard. 21/25

This model therefore does have some potential: but it is not 98.5% and it is not in asymptomatic individuals. The authors need to check their data; broaden it to differentiate between influenza covid-19 and other illnesses, and test it in the real world 22/25

Until then, the claim “We have proven COVID-19 can be discriminated with 98.5% accuracy using only a forced-cough and an AI biomarker focused approach.” is nothing more than hubris + hype. 23/25

“We find most remarkable that our model detected all of the COVID-19 positive asymptomatic patients, 100% of them.” We find this remarkable too. Remarkable, and, unfortunately, implausible. 24/25

For more #AIinHealthCare pitfalls, check out our 10m read here: phil-alton.medium.com/senti-creating…

PS. If you can predict A&E attendances using tea leaves, then please comment here, make us a brew, and tell me what my future holds. 25/25

PS. If you can predict A&E attendances using tea leaves, then please comment here, make us a brew, and tell me what my future holds. 25/25

Reference for ACE2 vocal cord expression: ACE2 Protein Landscape in the Head and Neck Region: The Conundrum of SARS-CoV-2 Infection, Descamps et al. Biology, Aug 2020. 26/

• • •

Missing some Tweet in this thread? You can try to

force a refresh