Jay McClelland on What's missing in Deep Learning crowdcast.io/e/learningsalo…

He argues against innate systematic generalization in humans and it is something that we acquire.

Thus he argues that to achieve systematic generalization we need to devise machines that learn how to do systematic generalization. That is, a meta-solution to the problem.

Languages are not fully systematic. Exceptions arise in language because we use them too often. It is 'quasi-systematic'.

Why would language be quasi-systematic? Language ultimately reflects the structure of what we think about. It's never perfect but partially approximate.

It is not the language that gives us a systematic structure, it is the world. This is where Chomsky was wrong.

The attention-based architectures make what people and networks 'smart'. It is an architectural innovation that is not only tied to language. To behave in context-sensitive ways, we must be aware of context. It will continue to extend in all kinds of domains.

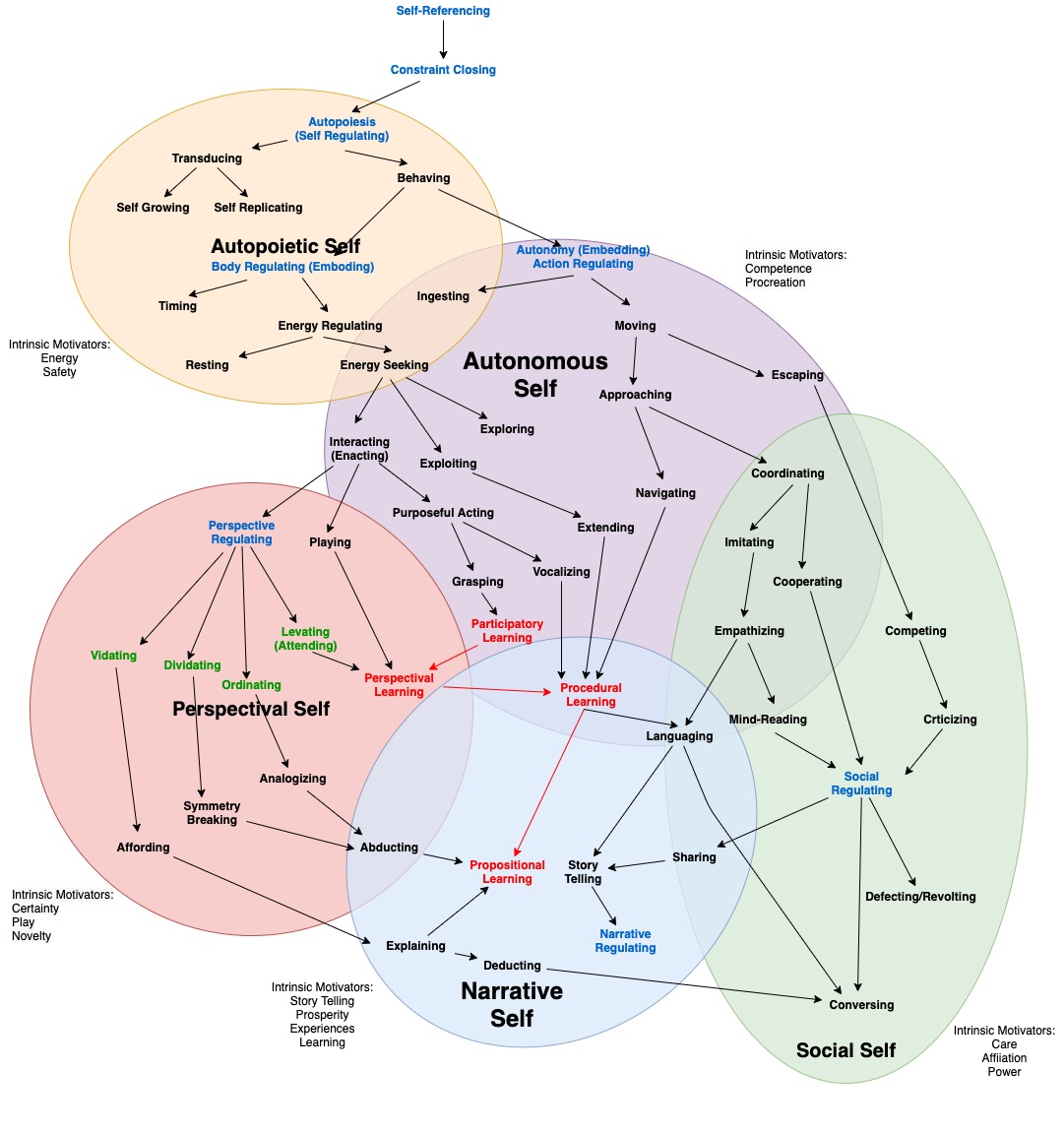

Explanations are understanding why you might do things. The problem with automation is that they understand only literal instruction but are unable to explain why. This is an essential capability required for AI that participates in the shared world of humans.

We have an incredible advantage in our ability to share our thoughts. This is despite the language being constrained in sequentially. Thus it is fundamentally about sharing.

Language forces us to categorize. We have a tendency to overuse categorization that straight jackets our thoughts. It's powerful and also misleading.

There is a tension where you overly use systematicity that you block the intuition. The process of writing down an idea may obscure the process of what lead to the idea.

What surprised me about the talk is that apparently, systematic reasoning isn't an ability that is available to most of the population. This has huge ramifications on political discourse!

Not only are we have intrinsic biases coming from the cultures we grew up in, but too many aren't able to parse the arguments that reveal a cognitive bias. This is quite a revelation!

@threadreaderapp unroll

I had thought that people just had biases (see: Haidt). Not only do they have biases, but they also cannot understand a certain level of argumentation! Humanity is certainly in deep trouble!

• • •

Missing some Tweet in this thread? You can try to

force a refresh