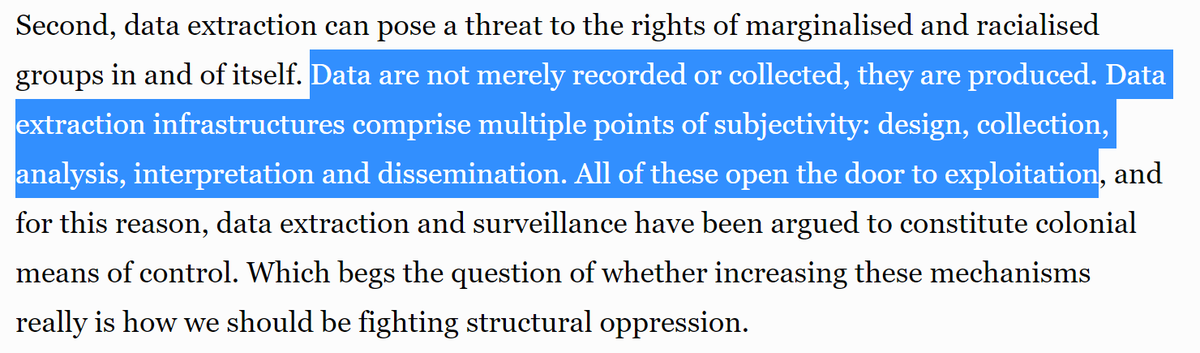

My impression is that some folks use machine learning to try to "solve" problems of artificial scarcity. Eg: we won't give everyone the healthcare they need, so let's use ML to decide who to deny.

Question: What have you read about this? What examples have you seen?

Question: What have you read about this? What examples have you seen?

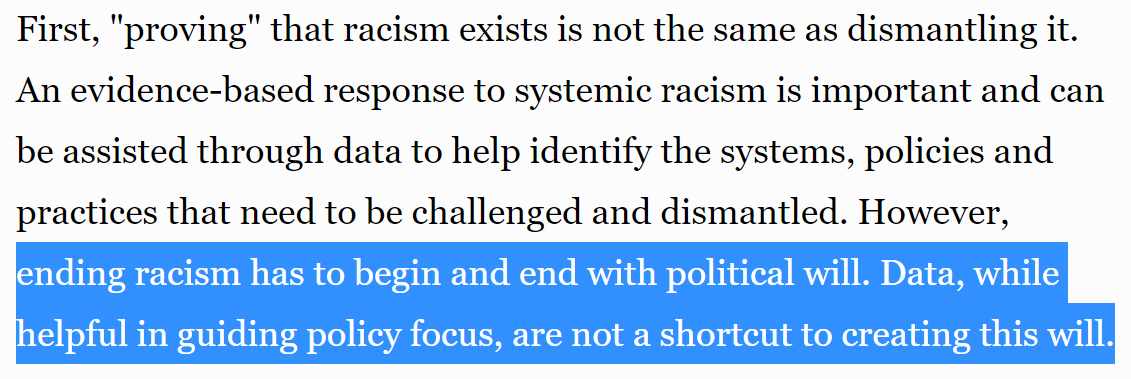

It's not explicitly stated in this article, but seems to be a subtext that giving everyone the healthcare they need wasn't considered an option:

https://twitter.com/math_rachel/status/1285025248136491008?s=20

To be clear, if the starting point is artificial scarcity of resources, this is a problem machine learning CAN'T solve

I've heard some responses: "If resources are truly scarce, isn't ML the best way to allocate them fairly & address corruption?"

Not necessarily. Simple, rule-based approaches can be good for consistency & fairness in many cases

Not necessarily. Simple, rule-based approaches can be good for consistency & fairness in many cases

https://twitter.com/math_rachel/status/1196888077790113793

• • •

Missing some Tweet in this thread? You can try to

force a refresh