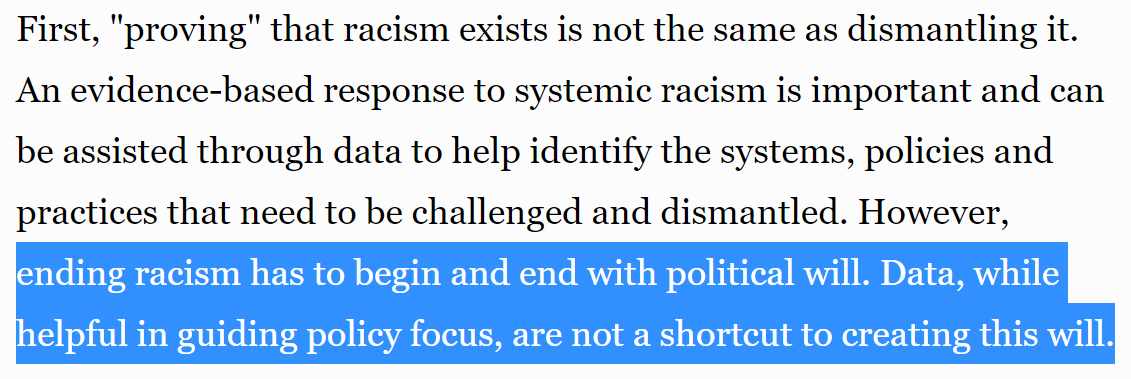

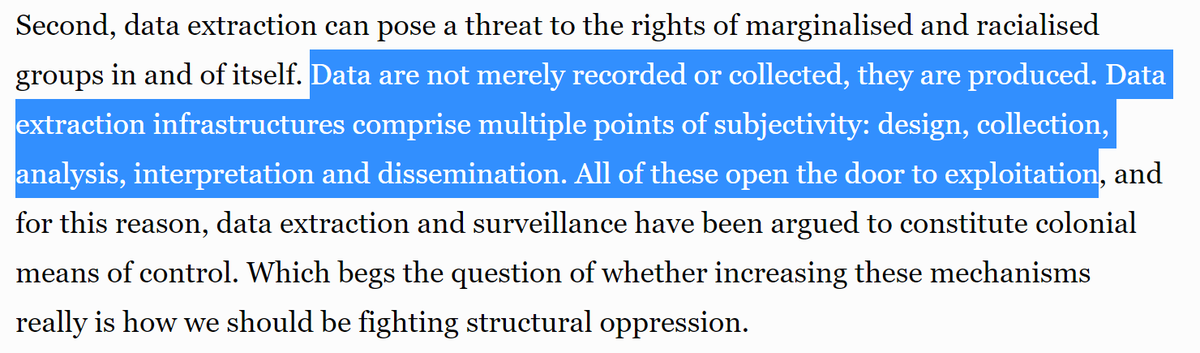

There has been some great work on framing AI ethics issues as ultimately about power.

I want to elaborate on *why* power imbalances are a problem. 1/

I want to elaborate on *why* power imbalances are a problem. 1/

*Why* power imbalances are a problem:

- those most impacted often have least power, yet are the ones to identify risks earliest

- those most impacted best understand what interventions are needed

- often no motivation for the powerful to change

- power tends to be insulating 2/

- those most impacted often have least power, yet are the ones to identify risks earliest

- those most impacted best understand what interventions are needed

- often no motivation for the powerful to change

- power tends to be insulating 2/

The Participatory Approaches to ML workshop at #ICML2020 was fantastic. The organizers highlighted how even many efforts for fairness or ethics further *centralize power*

https://twitter.com/math_rachel/status/12841685238418472973/

I think leaders at major tech companies often see themselves as benevolent & believe that critics do not understand the issues as well as they do. E.g. Facebook criticizes Kevin Roose's data as inaccurate, yet won’t share other data:

https://twitter.com/EthanZ/status/13288001976993751044/

Only the issues *most visible* to system designers get addressed. With this model, many issues are not prioritized until they are incredibly widespread & causing serious harm (as opposed to addressing earlier). 5/

https://twitter.com/math_rachel/status/1283249233613619200?s=20

A classic example of tech platforms being insulated from the harms caused is the Black women who raised an alarm on deceptive sock-puppets and coordinated harassment in 2014, yet Twitter failed to respond 6/

slate.com/technology/201…

slate.com/technology/201…

What motivates the powerful to act: I still think about Facebook hiring 1,200 content moderators in Germany in < 1 year to avoid a hefty fine, vs after 5 years of warnings about genocide in Myanmar, just hiring “dozens” of Burmese content moderators 7/

https://twitter.com/math_rachel/status/1021906783944597505

As more background, here is a thread of work on framing AI ethics issues as being about power 8/

https://twitter.com/math_rachel/status/1284976543769309184

Machine learning often has the effect of centralizing power 9/

https://twitter.com/math_rachel/status/1293692877298532352

Question: What reasons or resources have you found helpful in convincing those that don’t see power imbalances within tech & AI as a problem, believing the solution is primarily for those with power to wield it more benevolently? 11/

• • •

Missing some Tweet in this thread? You can try to

force a refresh