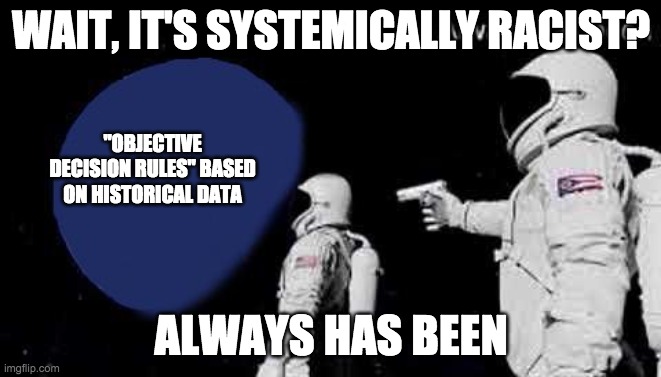

Rachael Meager (see pinned tweet for meme source!)

11 Dec,

24 tweets, 4 min read

So some very smart folks have asked about how we would apply the AMIP metric to studies of rare events. This kicked off a discussion of what robustness checks are really for, and I want to take that set of questions seriously in this thread.

I think robustness checks mainly (ought to) function to illuminate how variation in the data is being used for inference, and we should then be able to discuss whether we think this is a reasonable situation and adjust our confidence in the results.

The problem is not that there is SOME change to which our analyses are sensitive -- of course there is, they has to be. If your results aren't affected by ANY change you make to the analysis, something has gone horribly wrong with the procedure.

I think @dmckenzie001 has said this before me (sorry if I misquote, David!) but it is not good to show a bunch of checks in which nothing the authors do changes the result at all. Suggests a lack of interest in truly understanding the analysis procedure.

The problem - the thing we rightly fear from robustness checks - is if analyses are sensitive to changes they ought NOT to be sensitive to according to our understanding of the world, or when results display sensitivities which would meaningfully affect our interpretation of them

These more problematic changes we should investigate generally come in two flavours: (1) any and all very *small* changes, and (2) a set of particular changes to which any sensitivity signals a model/assumption failure.

Very small changes of any kind are generally important to check because if analyses are very sensitive to those, it signals a fragility that we would usually like to know about, and which would often purely in itself affect our interpretation of the results.

All practitioners (myself included, Bayesians very much included) know that we don't have the exact right model, an exact random sample from the exact population of interest, etc. We need to know if small deviations from the ideal experiment would cause big changes in our results

Analysis of rare events is a case where it can be entirely reasonable for results we truly do care about to be based on 1% of the sample. But there are (at least) 2 reasons why I think you should still compute the AMIP and find the AMIS to overturn your results.

First, your *audience* needs to know that some tiny % of the sample drives the result. If you're confident this is perfectly ok, there should be no problem sharing that information very plainly.

It is easy to fail to fully appreciate this, especially in areas like econ of crime. If you sample 30K people, and get 6 murders in the control group and 3 in treatment, your audience needs to be absolutely and fully aware that the comparison your result rests on is 6 vs 3.

Now you might want to then adjust what you'd think is a problematic percentage. Of course dropping all murders from 1 group will affect things. But should dropping ONE murder overturn the result? Is that ok?

One aspect of this issue is being able to define -- ideally beforehand!! -- what a "small" change is in a given analysis. We don't advocate for any particular filter or binary rule for robust / non robust in the paper for that reason.

Second, if you're structurally, by design, only using tiny % of the data, it usually becomes questionable whether you really are in a "big N" world. Fat tails affect the functioning of the delta method. The efficiency of the mean (vs the median) is eroded extremely fast.

Even if the rare event is a binary outcome, that means p in the model is very close to 0 or 1, and inference near the boundaries of parameter spaces is notoriously difficult to do. You should virtually never run an LPM if the true probability of an event lies near the boundary.

If p = 0 or 1 then your typical off the shelf estimator's behaviour is going to be nonstandard. Mostly people know that, or they figure it out fast, cause the computer will usually freak out.

But what we see from e.g. the weak IV literature, and the "local to unity" time series literature, is that once you are in the *neighbourhood* of pathology, you are usually already affected by the pathology.

So if p = 0.98 or 0.01 in your bernoulli trial, you're probably in trouble from a classical inference perspective. Usually at the very least your root N asymptotics are no good. (I teach this in the context of weak IV and it's the most fun we have all semester... for me anyway)

This is not at all to suggest that the AMIP signals a problem with the classical inference. It need not. There are cases where the classical properties are fine and the AMIP is big (we simulated some).

But rare events - even rare binary events - are cases in which the classical inference will generally have problems even if the AMIP is small.

It is not inherently wrong for results to be based on 1% of the sample. It might be fine. But if so you need to (A) know it, and be absolutely crystal clear with your readers about it (B) be careful to use statistical techniques that are equipped to handle that situation.

We do not advocate for any fixed % rule, though as we made special note of dropping of less than 1% in the abstract, that does tell you that we thought about that as being a small part of the data for our applications. but there's no one-%-fits-all.

In our R package, our default implementation does NOT report "here's what happens if uyou remove the most influential 1%". Instead, our default reports the minimal removal proportion to change the sign, significance, or both, of the analysis.

I personally don't want to see this become a threshhold crossing rule, which I do not like in general (ask me about test size being 0.05 all the time! hate it!!!). But I do want to generally see the AMIP so I can adjust my understanding if I need to. FIN!

• • •

Missing some Tweet in this thread? You can try to

force a refresh