When I think about threat hunting:

- Pro-active search for active / historical threats

- Pro-active search for insights

- Insights lead to better understanding of org

- Insights are springboard to action

- Actions improve security / risk / reduce attack surface

- Pro-active search for active / historical threats

- Pro-active search for insights

- Insights lead to better understanding of org

- Insights are springboard to action

- Actions improve security / risk / reduce attack surface

With these guiding principles in hand, here's a thread of hunting ideas that will lead to insights about your environment - and those insights should be a springboard to action.

Here are my DCs

Do you see evidence of active / historical credential theft?

Can you tell me the last time we reset the krbtgt account?

Recommendations to harden my org against credential theft?

How many do we already have enabled?

Where can we improve?

Do you see evidence of active / historical credential theft?

Can you tell me the last time we reset the krbtgt account?

Recommendations to harden my org against credential theft?

How many do we already have enabled?

Where can we improve?

Here's my #AWS environment

We're worried about credential theft.

Let's query #CloudTrail logs for API calls that returned "Access Denied" from the same IAM / Principal ID in a short time-frame.

At a minimum this will lead to insights about our #AWS env

We're worried about credential theft.

Let's query #CloudTrail logs for API calls that returned "Access Denied" from the same IAM / Principal ID in a short time-frame.

At a minimum this will lead to insights about our #AWS env

Here's where my critical data is stored.

Do you see any evidence of active or historical threat actor activity.

How can we improve visibility to these systems?

What am we doing well to protect these systems and any suggestions on how I can improve?

Do you see any evidence of active or historical threat actor activity.

How can we improve visibility to these systems?

What am we doing well to protect these systems and any suggestions on how I can improve?

Here's a list of how employees can access the environment remotely - yeah, here are the single factor methods.

Any sign of active / historical unauthorized access?

How can we improve viz?

New detection opportunities?

Recommendations to secure remote access?

Any sign of active / historical unauthorized access?

How can we improve viz?

New detection opportunities?

Recommendations to secure remote access?

I'm worried about unauthorized access to O365 / Okta

Any sign of unauthorized access?

Can you tell me how many employees access O365 / Okta remotely via VPN?

Which VPN services do they use?

Policy / config recommendations to secure O365 / Okta?

Any sign of unauthorized access?

Can you tell me how many employees access O365 / Okta remotely via VPN?

Which VPN services do they use?

Policy / config recommendations to secure O365 / Okta?

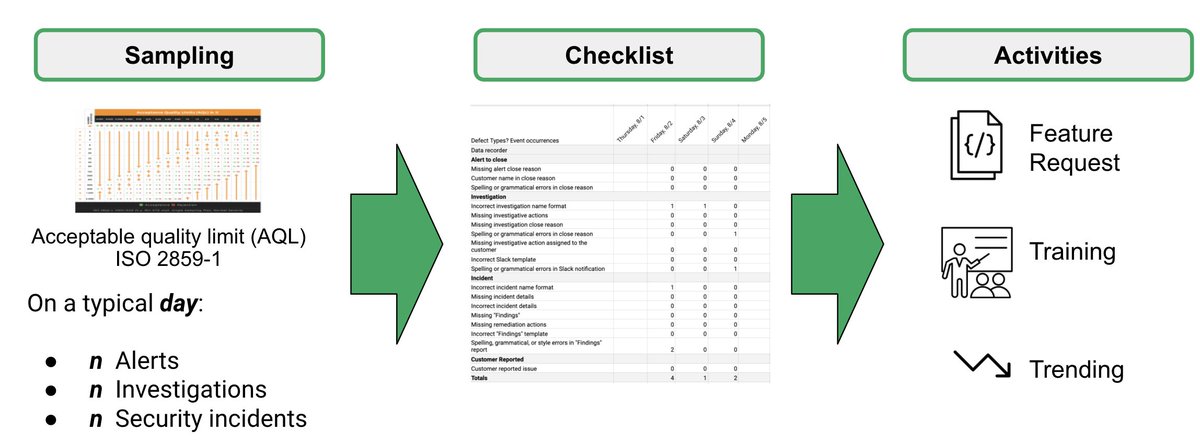

Recap: We started with a question / a worry.

We first asked: is there a problem?

And then we asked: given what we've learned, how can we improve?

Hunting will lead to insights and insights should be a springboard to action.

We first asked: is there a problem?

And then we asked: given what we've learned, how can we improve?

Hunting will lead to insights and insights should be a springboard to action.

• • •

Missing some Tweet in this thread? You can try to

force a refresh