BREAKING: @Facebook just took down two foreign influence ops that it discovered going head to head in the Central African Republic, as well as targeting other countries.

More-troll Kombat, you might say.

Report by @Graphika_NYC and @stanfordio: graphika.com/reports/more-t…

More-troll Kombat, you might say.

Report by @Graphika_NYC and @stanfordio: graphika.com/reports/more-t…

There have been other times when multiple foreign ops have targeted the same country.

But this is the first time we’ve had the chance to watch two foreign operations focused on the same country target *each other*.

But this is the first time we’ve had the chance to watch two foreign operations focused on the same country target *each other*.

In the red corner, individuals associated w/ past activity by the Internet Research Agency & previous ops attributed to entities associated w/ Prigozhin.

In the blue corner, individuals associated w/ the French military.

@Facebook report here: about.fb.com/news/2020/12/r…

In the blue corner, individuals associated w/ the French military.

@Facebook report here: about.fb.com/news/2020/12/r…

Two households, both alike in dignity… well ok, two troll ops.

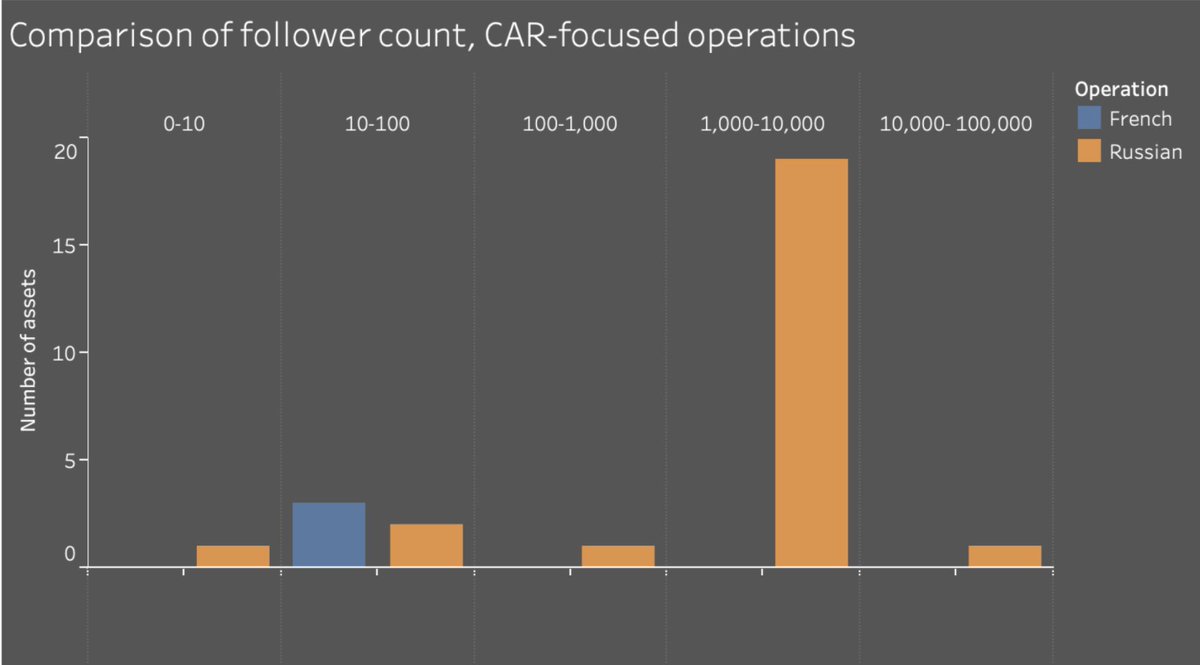

Generally low followings. Russian op mainly targeted CAR, South Africa, Cameroon. French op focused on CAR and West Africa.

And they trolled each other.

Details in our report. Highlights to follow here.

Generally low followings. Russian op mainly targeted CAR, South Africa, Cameroon. French op focused on CAR and West Africa.

And they trolled each other.

Details in our report. Highlights to follow here.

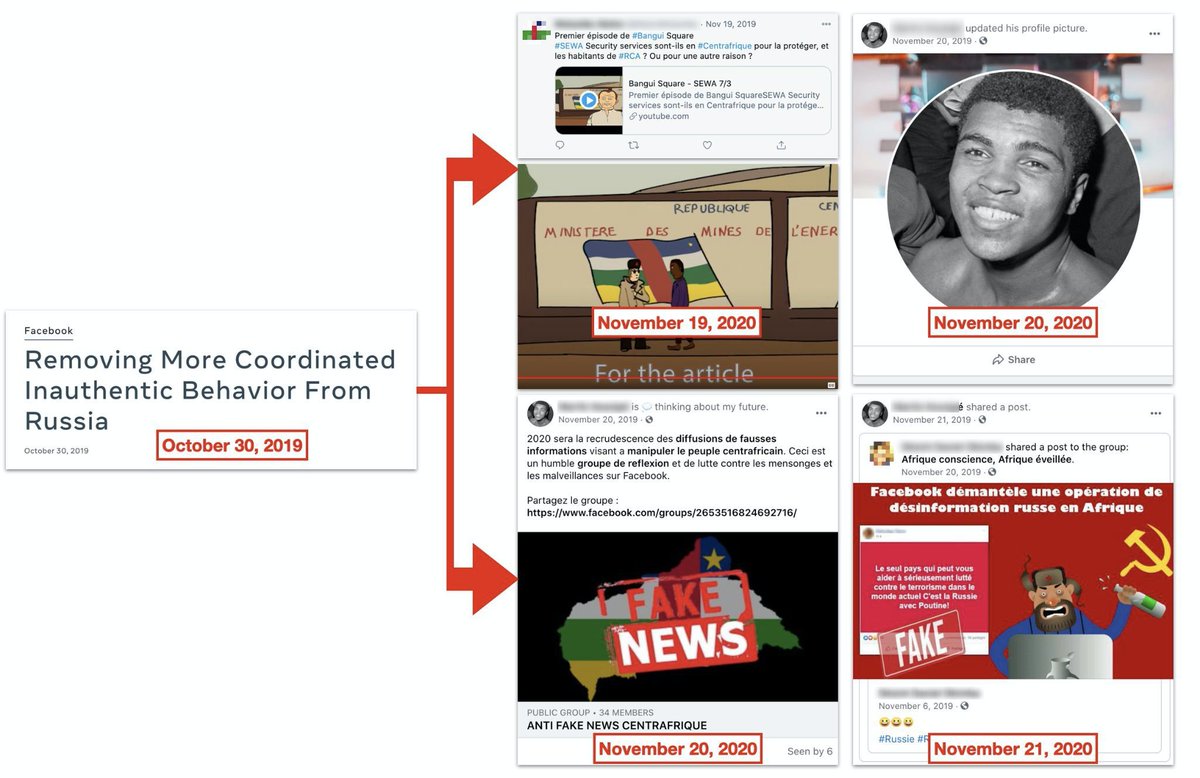

So, background: in October 2019, Facebook took down a bunch of Prigozhin-linked assets across Africa, including CAR.

Our friends at @stanfordio wrote it up at the time.

cyber.fsi.stanford.edu/io/news/prigoz…

Our friends at @stanfordio wrote it up at the time.

cyber.fsi.stanford.edu/io/news/prigoz…

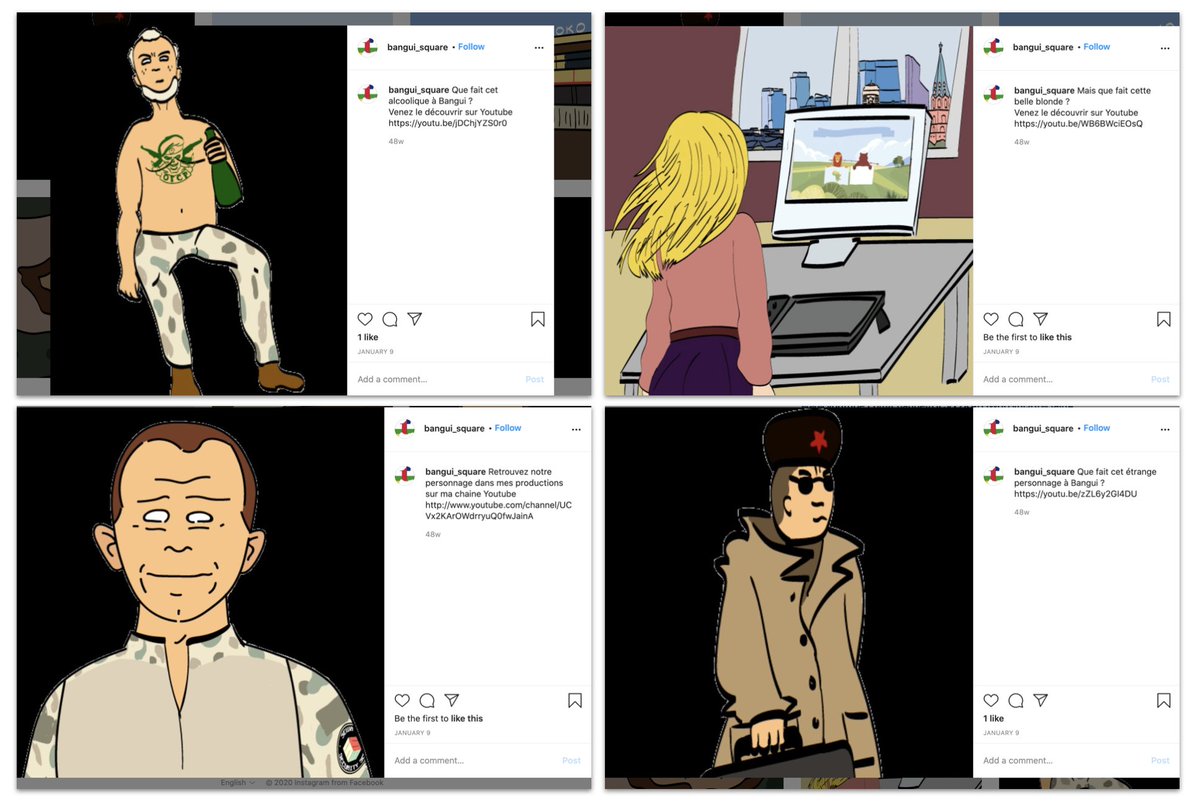

This fabulous cartoon, about a bear saving a lion from French/U.S. hyenas, was sponsored by Prigozhin firm Lobaye Invest at the time. (h/t @dionnesearcey, who wrote it up for @nytimes in September 2019)

Troll assets boosted it.

Troll assets boosted it.

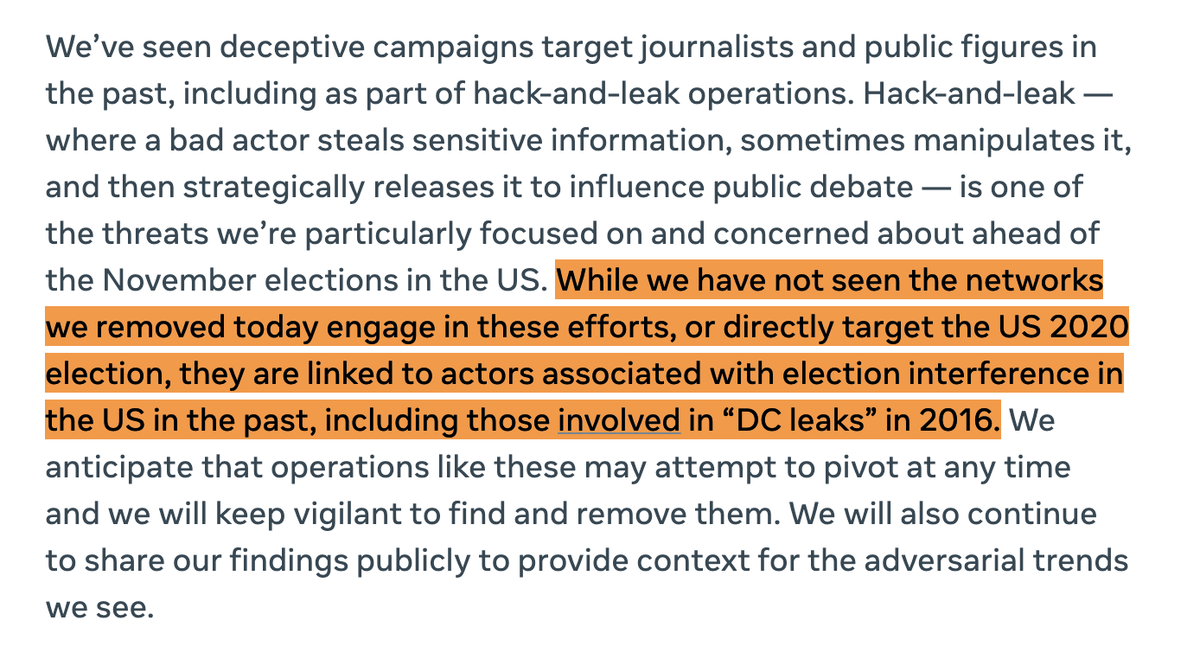

What we didn't know was that there was a recent, fledgling French operation, mostly praising France, sometimes criticising Russia and Prigozhin.

After the FB takedown, they set up a few fake assets to, um, "fight fake news."

After the FB takedown, they set up a few fake assets to, um, "fight fake news."

This was definitely a "fight fire with fire" effort.

Their portrayals of Russians were, well... troll-y.

Their portrayals of Russians were, well... troll-y.

It was also a direct and explicit reaction to the earlier Russian operation.

Note the cartoon on "Tatiana"'s screen. Familiar?

Note the cartoon on "Tatiana"'s screen. Familiar?

It also focused on the Wagner group and Russian mercenaries. But the methods it used were ... unfortunate.

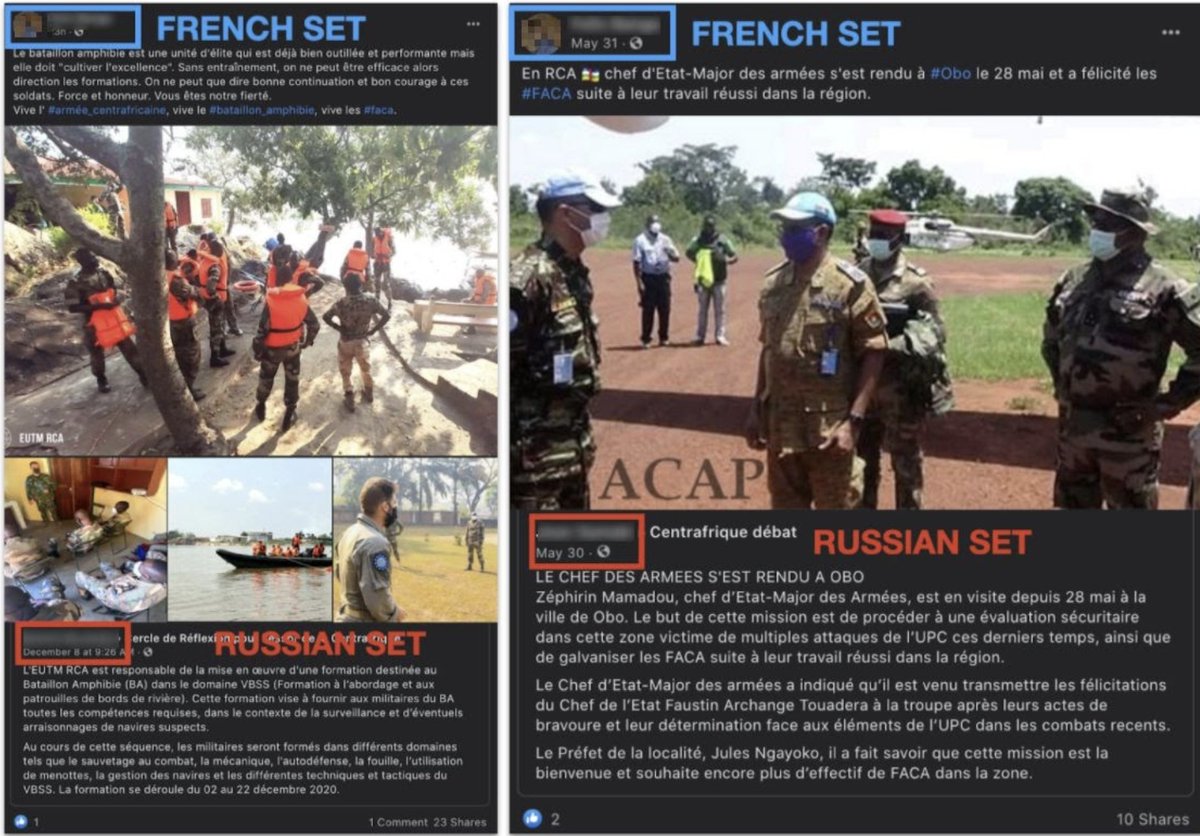

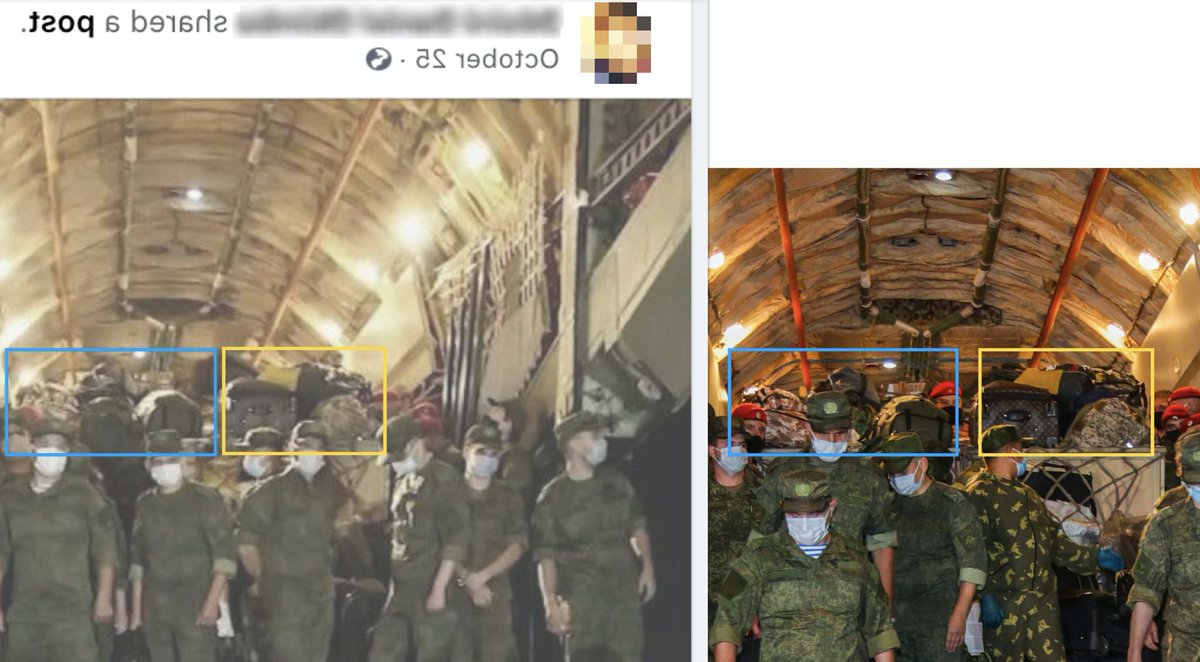

Left, post by a French asset on "Russian mercenaries arriving in CAR," October.

Right, news report on Russian medics landing in Kyrgystan, July.

Left, post by a French asset on "Russian mercenaries arriving in CAR," October.

Right, news report on Russian medics landing in Kyrgystan, July.

If you reverse the French photo and compare the backpacks in the background... oops.

Same event, shot from slightly different angle.

That, folks, is disinformation.

Same event, shot from slightly different angle.

That, folks, is disinformation.

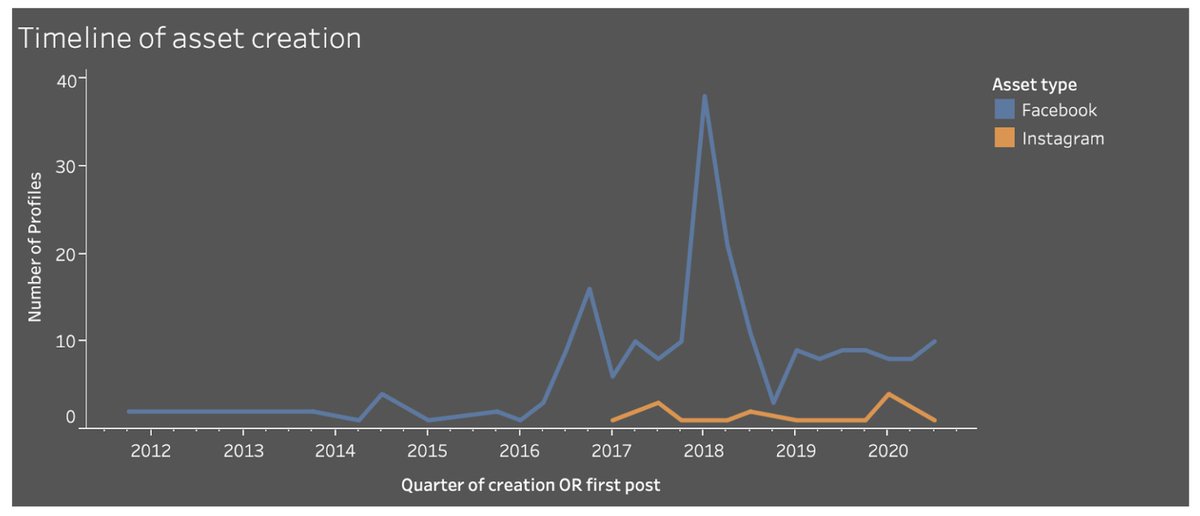

Meanwhile, from January the Russian operation created a bunch of new CAR-focused assets. Trying to rebuild the network.

Unlike the French operation, this one seemed primarily focused on domestic politics, including support for the president.

Unlike the French operation, this one seemed primarily focused on domestic politics, including support for the president.

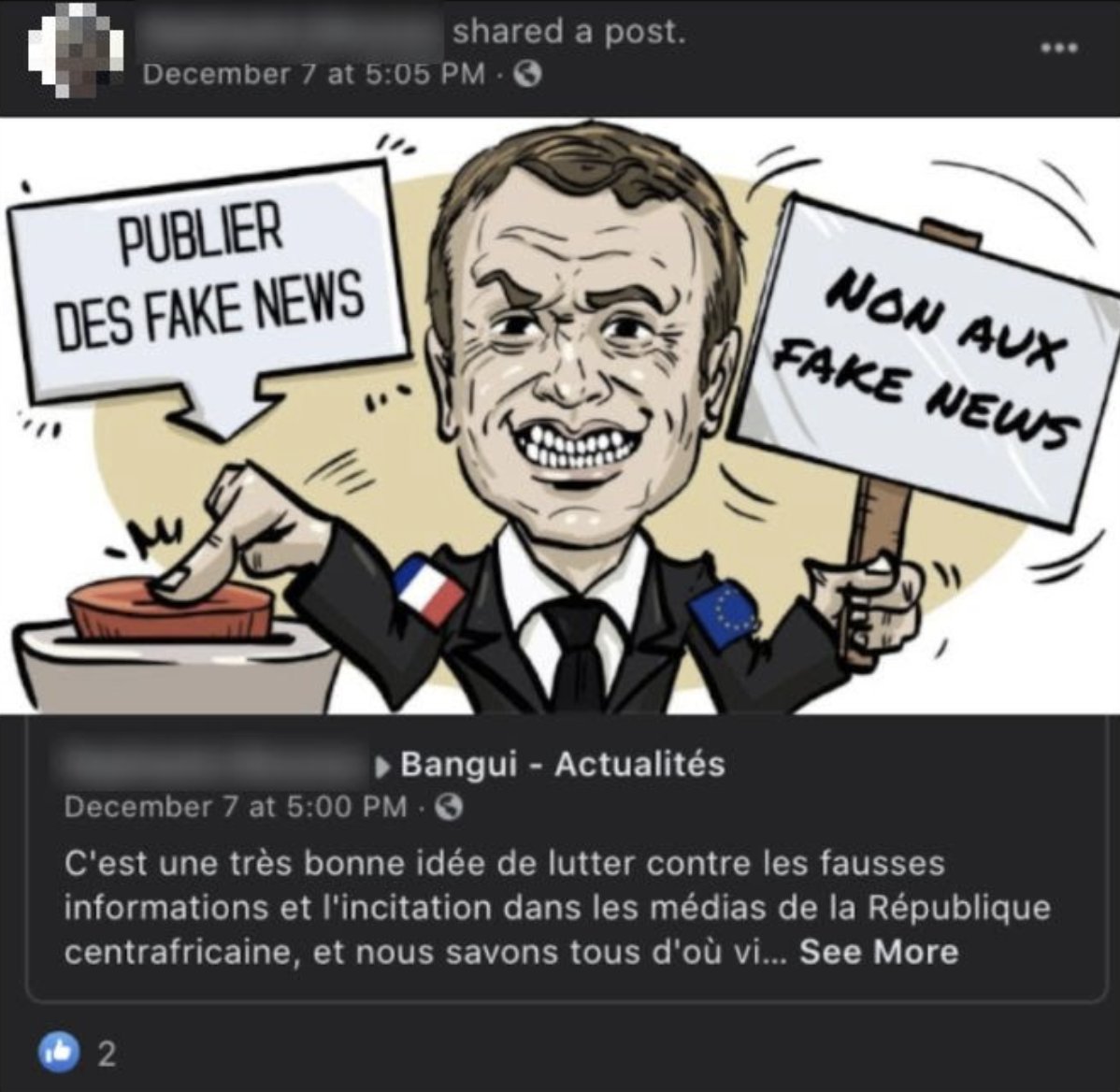

... and spread negative content about the French , including by accusing them of fake news.

Oh the irony.

Oh the irony.

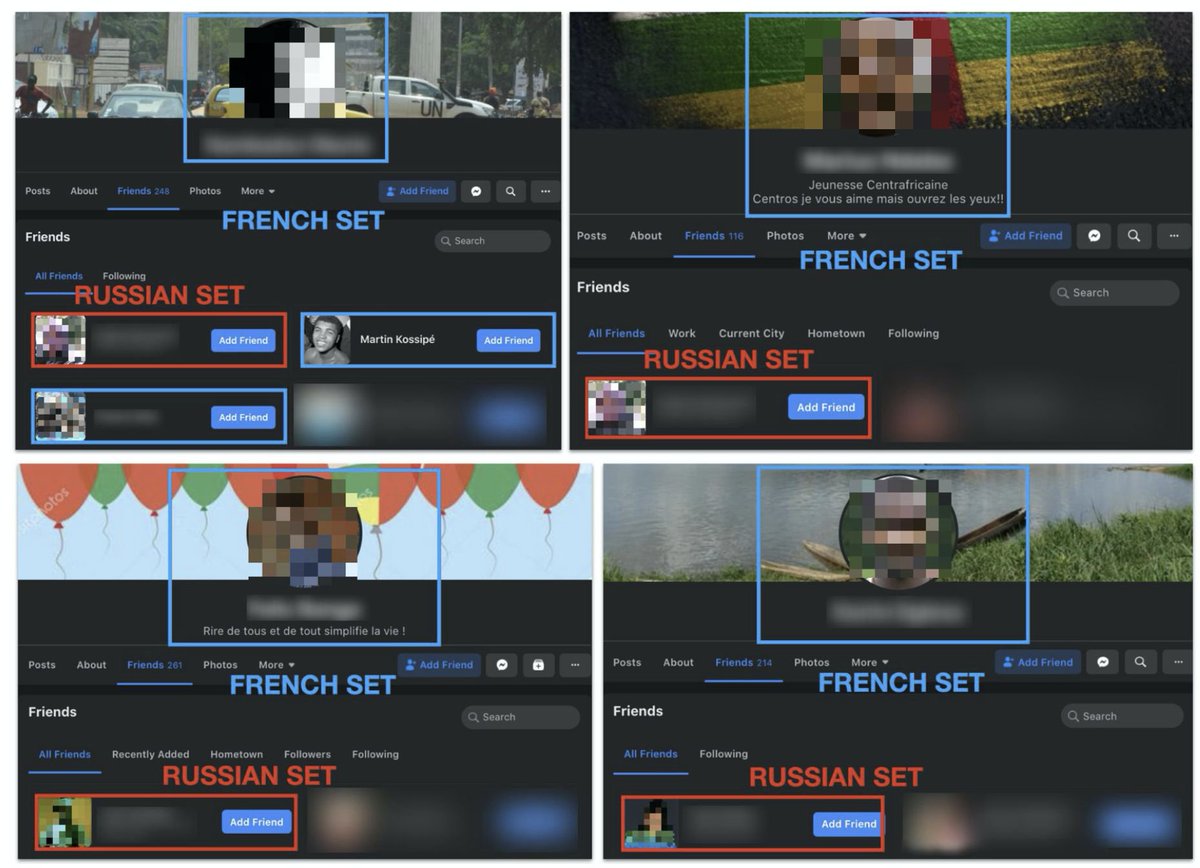

Thing is, the French and Russian ops were posting into the same groups, and liking the same pages.

They even liked *each other*.

Pink = FR accounts

Green = RU accounts.

Red = RU pages.

Blue = unaffiliated pages.

They even liked *each other*.

Pink = FR accounts

Green = RU accounts.

Red = RU pages.

Blue = unaffiliated pages.

So it wasn't long before they started noticing each other.

Here's a French account calling out a "fake" post by a Russian account. January this year.

Here's a French account calling out a "fake" post by a Russian account. January this year.

... and then they friended them.

Fishing for clues?

"I'm not really a troll"?

"I can see your mouse from here"?

Fishing for clues?

"I'm not really a troll"?

"I can see your mouse from here"?

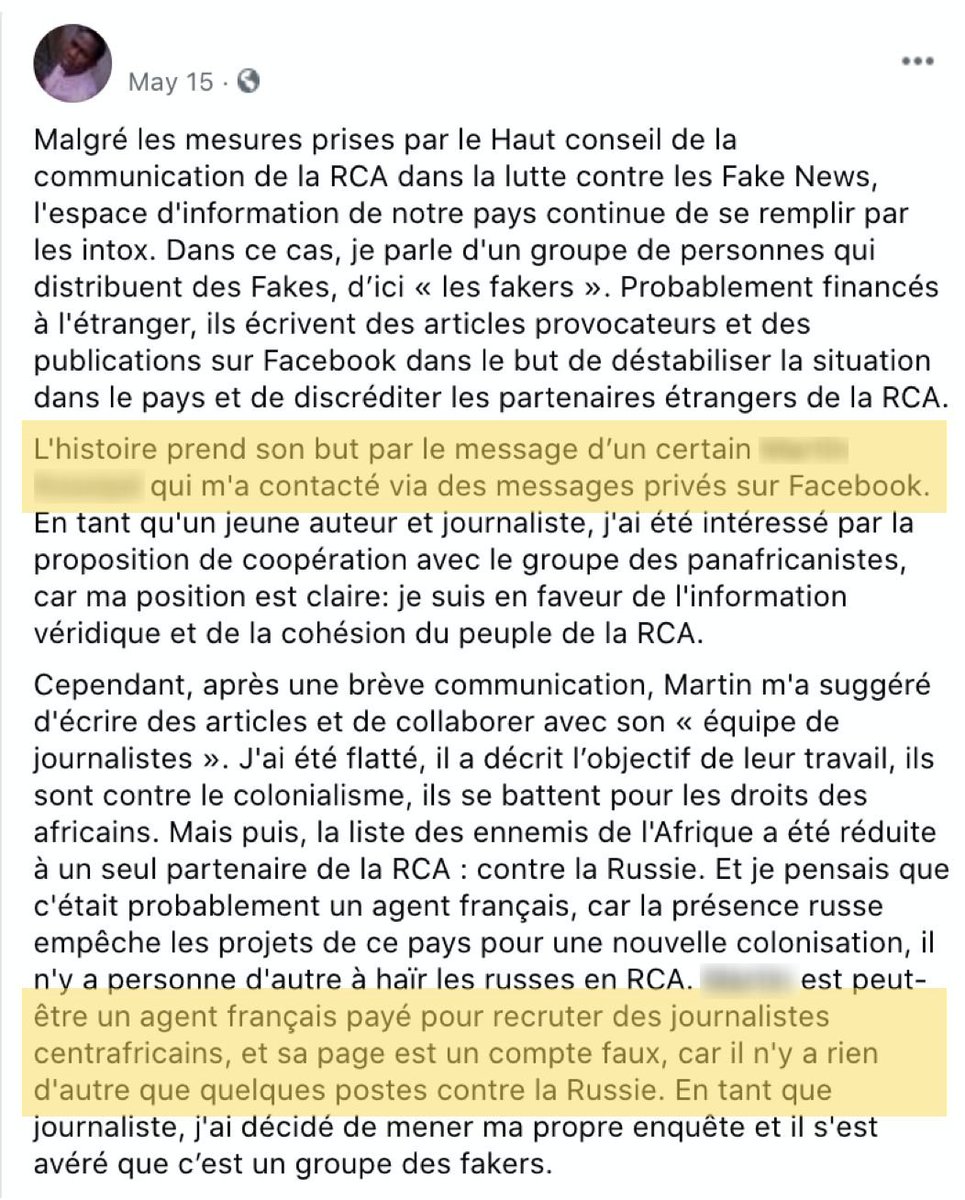

This was the weirdest moment. Russian asset accusing French asset of DM'ing him *to recruit him*.

Never take a troll's word at face value, but if true, this would be fascinating. Who was trolling whom?

Never take a troll's word at face value, but if true, this would be fascinating. Who was trolling whom?

This activity didn't generally get much traction. One Russian asset focused on CAR politics had 50k followers, the rest on both sides were in the hundreds or, at best, low thousands. On YouTube, most videos had a few dozen views; on Twitter, small numbers of retweets.

And here's the thing: we're writing about these operations because they got caught and taken down.

Which is what happens when fakes get caught.

Even - perhaps especially - "anti-fake-news" fakes.

Which is what happens when fakes get caught.

Even - perhaps especially - "anti-fake-news" fakes.

This is why fighting fakes with fakes is *not a good idea*.

Expose trolls and fakes, yes. But don't do it with more trolls and fakes. Because then you get things like this.

Expose trolls and fakes, yes. But don't do it with more trolls and fakes. Because then you get things like this.

• • •

Missing some Tweet in this thread? You can try to

force a refresh