I recently have had a number of aspiring ML researchers ask me how to stay on top of the paper onslaught. Here are three concrete tips:

1) Pick a tiny subfield to focus on

2) Skim

3) Rely on your community

Thread to explain ⬇️ (1/5)

1) Pick a tiny subfield to focus on

2) Skim

3) Rely on your community

Thread to explain ⬇️ (1/5)

1) Pick a tiny subfield to focus on

It's impossible to stay on top of "all of ML". It's a gigantic and diverse field. Being an effective researcher requires laser-focusing on a subfield. Pick a problem that is important, excites you, and you feel you could make progress on. (2/5)

It's impossible to stay on top of "all of ML". It's a gigantic and diverse field. Being an effective researcher requires laser-focusing on a subfield. Pick a problem that is important, excites you, and you feel you could make progress on. (2/5)

2) Skim

You'll find that many papers within your subfield of choice have a lot in common - there is often only a small nugget of novelty in each paper. It's incredibly important to develop your ability to find this nugget as quickly as possible. (3/5)

You'll find that many papers within your subfield of choice have a lot in common - there is often only a small nugget of novelty in each paper. It's incredibly important to develop your ability to find this nugget as quickly as possible. (3/5)

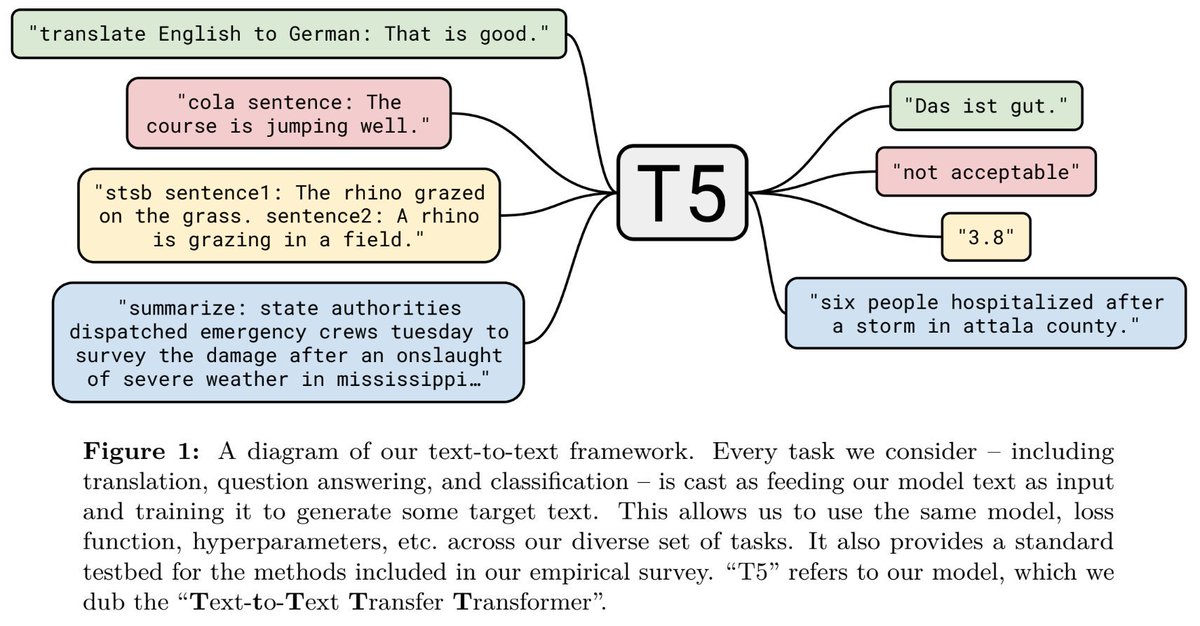

For example, try scrolling down to the algorithm box or explanatory diagram and see if you can figure out the main idea/contribution of the paper in less than a minute. Then decide if you want to read the rest. (4/5)

3) Rely on your community

Find a community of people who care about the same subfield. I created a reading group during my PhD for this. If you aren't already a part of a community, Twitter is a nice stand-in - just follow people who work on stuff you care about. (5/5)

Find a community of people who care about the same subfield. I created a reading group during my PhD for this. If you aren't already a part of a community, Twitter is a nice stand-in - just follow people who work on stuff you care about. (5/5)

• • •

Missing some Tweet in this thread? You can try to

force a refresh