I was a product manager on Samsung #ecommerce and we were testing a hypothesis that users were ready to adopt purchase of products via chat i.e. conversational commerce. I got a $1M budget to validate it. Here’s how lack of model explainability dooms business decisions. Read on…

We selected the most popular chat app, FB Messenger, to test this hypothesis. To accelerate the test, we ran ads with a promotion that opened a chat window directly into our commerce chatbot.

Now about the ad target. We had a database of millions of users who had previously engaged with the Samsung brand. The target of 300k users for the ad campaign was decided by an #ML model.

The target was selected based on their propensity to buy. I asked the data scientist on how the model selected the target segment. They said “It's just the model, we can’t tell how it predicts”

We structured the experiment on variations of message and product price. An immediate learning was that lower price points worked better for purchase but higher price point did well for getting product information.

Key question on everyone’s mind - what is unique about the users who are converting and how can we get more like them?

The answer again? “There’s is no way to know why the model selected the user”

The answer again? “There’s is no way to know why the model selected the user”

The pilot failed to meet our ad conversion goals. The key retrospective question - was the ad audience wrong and should we have chosen others from the user database? or was the message wrong?

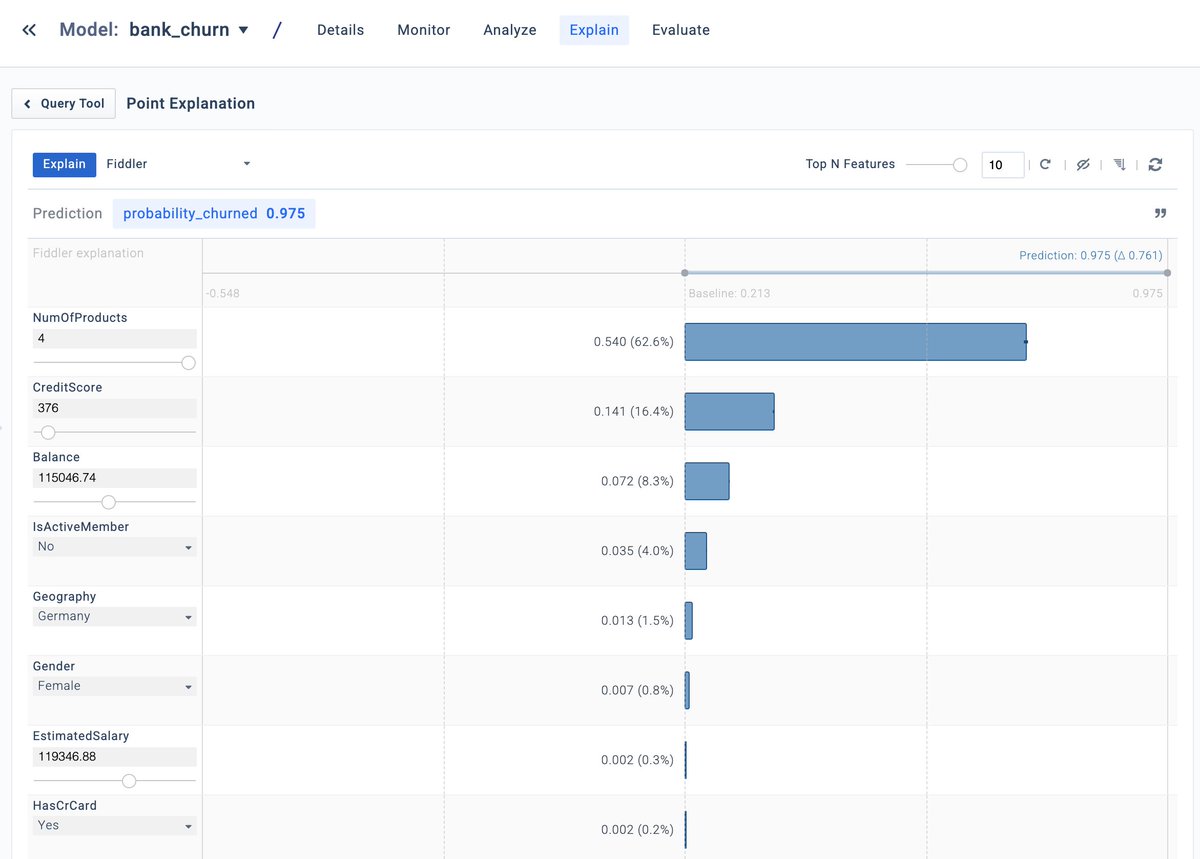

If I could view an explanation of why a model picked a converted user (like this churn prediction explanation from @fiddlerlabs), we could have selected more lookalikes and saved the pilot.

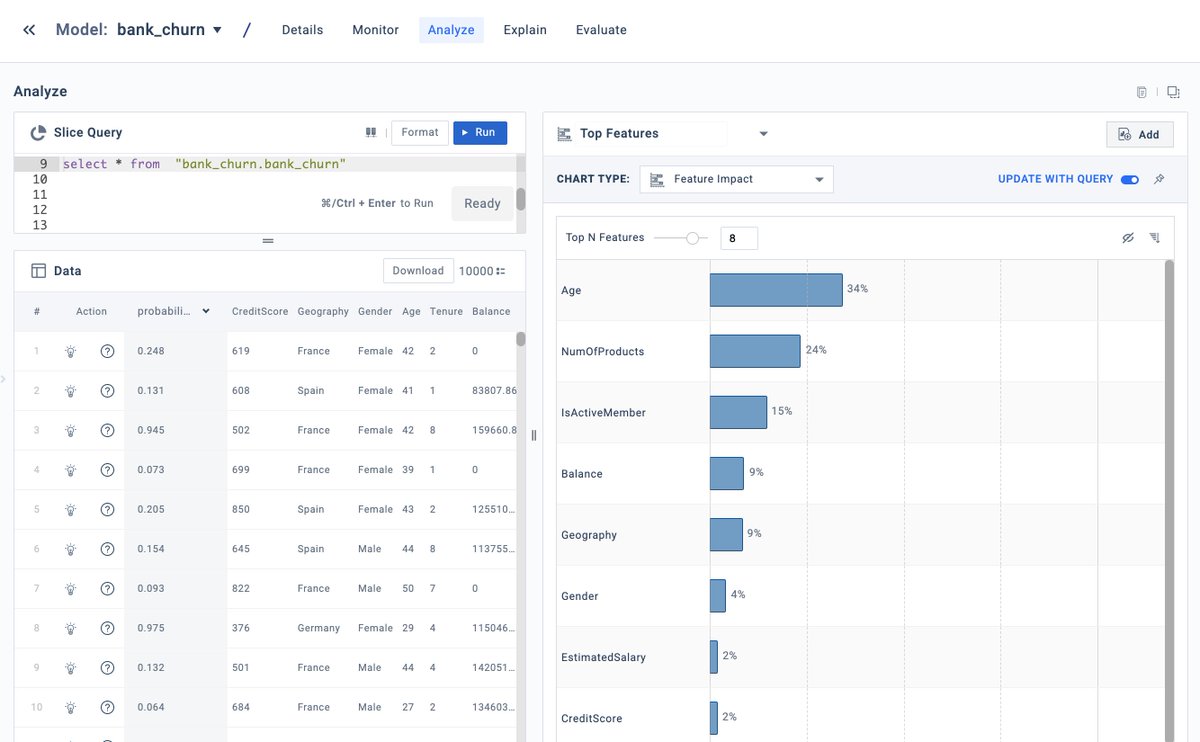

If I could view what features were driving the selection of the audience or converted users over others (like this churn prediction one from @fiddlerlabs ), we could have iterated towards the right audience and saved the pilot.

Explainability isn’t just critical for high value use cases - it's THE KEY to ensure success of all ML use cases!

#ExplainableAI #Analytics

#ExplainableAI #Analytics

• • •

Missing some Tweet in this thread? You can try to

force a refresh