Update: Following my tweet on Thursday, Google fixed its autocomplete promoting that "civil war is inevitable"

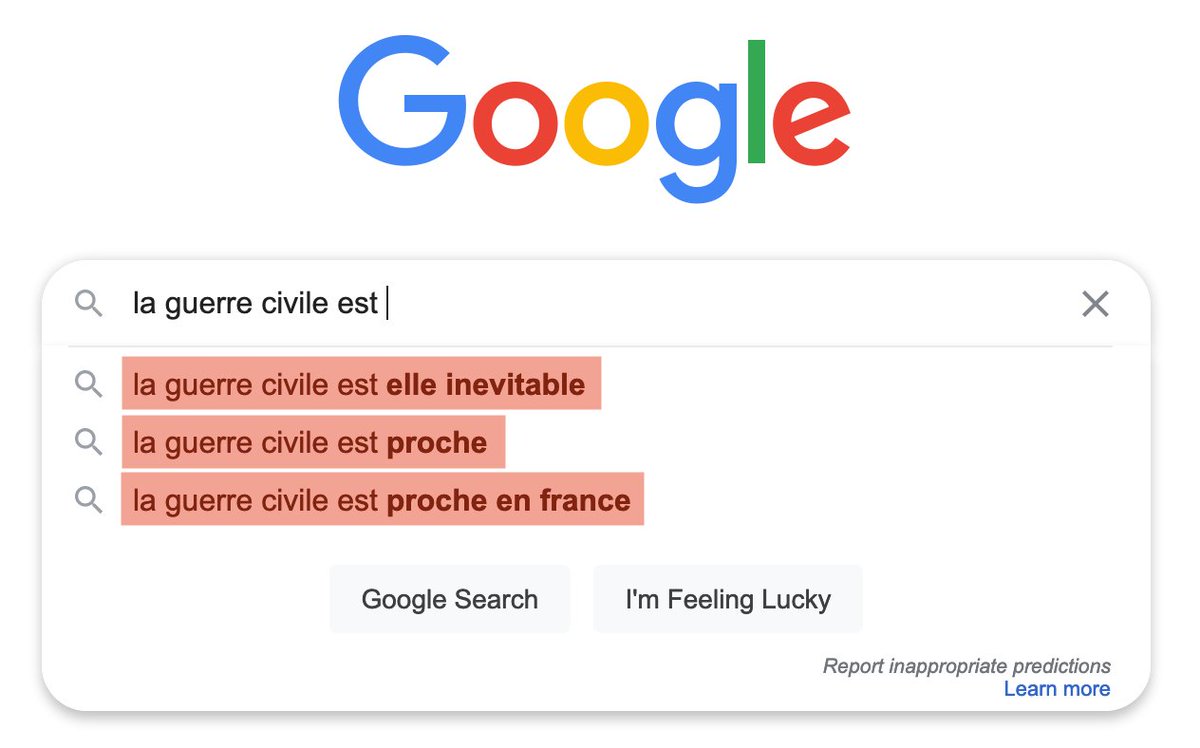

Here's what Google autocomplete for "civil war is " looked like before Thursday:

THREAD

Here's what Google autocomplete for "civil war is " looked like before Thursday:

THREAD

Why does Google suggest that? Does the algorithm know something we don't? Having worked at Google, I don't think so. I think fearmongering is efficient for clicks, so Google *promotes* fearmongering.

5/

5/

Now, imagine what many people think when they see "civil war is inevitable" coming from Google. Many must think there has be some truth to it. They might think they need to take action.

6/

6/

Conclusion: when exposing dangerous algorithmic bugs, Google takes action.

Finding them one by one is not enough. So I'll continue working with researchers to create tools to help anyone find those bugs.

7/

Finding them one by one is not enough. So I'll continue working with researchers to create tools to help anyone find those bugs.

7/

PS: Here's the link to my Tweet from Thursday:

8/

8/

https://twitter.com/gchaslot/status/1346958038889078785

• • •

Missing some Tweet in this thread? You can try to

force a refresh